Figure 1. Comparison of Artificial Intelligence, Machine Learning and Deep Learning

This paper focuses on compiling necessary aspects of deep learning for future researches. Deep learning has gained massive popularity in computing research and application development. Many cognitive problems related to unstructured problems are solved using this technology. Deep learning and Artificial intelligence are the underlying paradigms on popular applications like Google Translator. Machine learning and Deep learning are the two subset of Artificial intelligence. Deep learning algorithms are integrated using different neural network layers replicating the functioning of the human brain. Deep learning uses layers of neural networks to learn data in a recursive manner from training data from structured datasets and uses this data to predict unstructured data. Deep learning has three layers, namely input layer, output layer and hidden layer. Neural networks are often usual for the image recognition. Big Data powered with Deep learning can drive innovations beyond imagination in future.

Deep learning is a subgroup of machine learning (Kaur et al., 2020; Liu et al., 2020) that emulate the working of the human brain through neural networks capable to make decisions. Deep learning is from the broader family of artificial intelligence used to simulate human brain with a system of computer applications. It simulates the action of the neuron present in the human brain to process it. Machine learning (ML) and Deep learning (DL) are subsets of Artificial Intelligence (AI). Deep neural network. After training, the application works on its own to finish the task (Kaur et al., 2020). The relationship between Artificial intelligence, machine learning and deep learning is shown in Figure 1.

Figure 1. Comparison of Artificial Intelligence, Machine Learning and Deep Learning

Deep Learning has magnificent performance in computer vision and in language processing (Liu et al., 2020). Deep learning refers to networks that has various deep layers for efficient learning. Deep learning can be pictured as a set of points it decides according to the input. It is similar to the biological nervous system and works according to already known information. In neural networks, each node works as neurons in the human brain. Machine learning works by identifying the image extracts features such as height, shape and applies them in an algorithm to obtain the output. In deep learning, the raw images will be predicted and directly fed into the algorithms without manual feature extraction and obtain the output. Thus deep learning takes less time for execution.

The biological nervous network consists of neurons. The neuron consists of a cell body, dendrites and an axon. Majorly dendrites are used for receiving signal from every neuron. In every neuron structure, the end of each axon will be connected to the dendrites (Lynn et al., 2019). The neuron generates an electrochemical pulse called an action potential. This potential travels along the axon, activates and reaches them. The neural networks have capabilities to solve the given task that machine learning can never be able to complete. A deep neural network refers to a large amount of hidden layers. The layer can learn the given raw data directly on its own (Rafi et al., 2021). Artificial neural networks are made up of connected nodes that are organised like a human brain. The numerical values of each neuron in a structure are as follows: 3.4, 7.8, 2.33, 0.49. In real life, the link between two artificial neural networks is referred to as an axon. Weights are used to represent the relationships. Every task will have a different set of numerical weights (Abiodun et al., 2019). For each task, the weights values cannot be predicted in advance. The goal of neural networks is to tackle any tasks that are similar to those performed by the human brain.

Different sorts of neural networks are Feed forward networks and Feedback networks. Feed forward networks consist of input, output and hidden layers. After calculations are performed with the input data, new values generated will be forward to the further layer to determine the output. Feedback networks signals works in both the direction in its loop.

Neural networks distinguish the depth of the deep learning and their networks. Compared to multilinear regression Artificial Neural Networks predicts more accurate output.

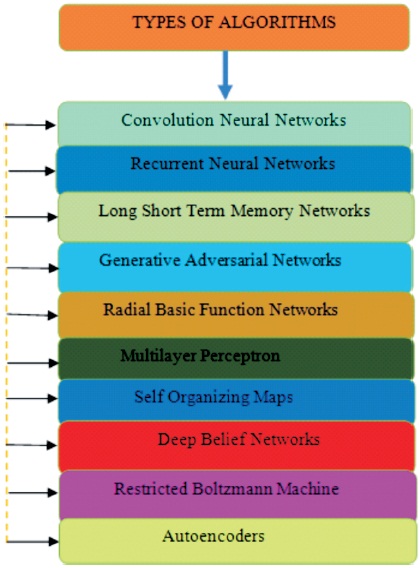

Deep learning algorithms are extensively used to solve problems. Each type has different neural networks to solve the complex problems. Ten types of algorithms are shown in Figure 2 .

Figure 2. Taxonomy of Deep Learning Algorithms

Convolution neural networks are mainly used for image processing, satellite imaging, medical image analysis, video recognition, etc. It contains a multi-layer algorithm that collects features from the given data and uses them to recognise objects (Aly & Almotairi, 2020; dos Santos et al., 2018). The layers are convolution layer, the rectified linear unit, and the pooling layer. For the action of convolution, the layer has filters. The elements are subjected to operations known as rectified linear units. As a map, the output has been rectified. Down sampling, also known as the pooling layer, reduces the dimensionality of the featured map (Yin et al., 2021). It flattens two dimensional layers to create a single layer. The object is identified using the flattened matrix as input in a convolutional neural network sample.

Recurrent neural networks are time related data. It has a loop where the output is given back to the input called feedback. The basic neuron transforms into adapted complex structures for greater performance (Lynn et al., 2019; Roy et al., 2020).

In recurrent neural networks, the input data is passed into a cell that activates and persists, and the output is fed into the feedback loop (Liu et al., 2021). The neural networks then filter the data using their own set of weights.

It is a type of recurrent neural network that is used to remember past data for a longer period of time. Its principal applications are speech recognition and music composition. Data is sent at random intervals (Liu et al., 2020, 2021). The first phase is removing all of the irrelevant data from the prior state. Second, the cell state values are updated, and finally, the output in terms of the new cell state is generated.

Generative adversarial networks are deep learning algorithms that generate new data which resembles the training data. It has two main components: Generator and Discriminator. The Generator is used to generate false data. Discriminator is used to learn from the false data and it can distinguish real and false data. It solves many problems in computer vision and image processing (Shao et al., 2020). Generative adversarial networks are used to increase the period time and are mainly used in the video games to upscale low resolution and higher resolution in image training. Finally, it sends the result to both generator and discriminator.

Radial basic function networks are a special type of algorithms action function that occurs with the help of radial functions. RBFN is used in speech recognition, pattern recognition and image processing (Guo et al., 2020). RBFN has three layers input layer, hidden layer and output layer used for classification and time prediction. RBFN measures the input value using the training data. The hidden layer has a Gaussian transfer function which gives the output inversely proportional to the center of the neuron.

It has activation functions in its multilayer perceptron consisting of fully connected input and output layer. The same number of input and output layers are used to build speech and image recognition. MLP feeds data to the input layer and compute between the input layer and the hidden layer. Large database has been constructed for testing (Mokbal et al., 2019). MLP uses activation functions to determine the nodes and functions in sigmoid and tanh.

High dimensional functions are used to understand with the help of SOM (Aly & Almotairi, 2020). Self-organizing maps initialize weights to the nodes from the training data. Each node has been examined and compared with the input vectors. The values of winning nodes are called the Best Matching unit.

Deep belief networks are used for accuracy and detection (Wang et al., 2021). It has multiple layers with latent variables where the binary values are often called hidden units. It has both previous and subsequent layers used for image recognition and motion capture data. DBN finds values in each layer of the bottom-up pass.

Deep belief networks are built based on the model of Restricted Boltzmann machine (Rathore et al., 2019), mainly used to generate images, to recognize and to capture data.

The deep learning algorithm used for dimensional reduction learns from the sets of input. It has two layers called visible units and hidden units (Li et al., 2018). The visible unit is connected to all hidden units. It collects the set of inputs and encodes them in a forward pass. The algorithm passes the output to the hidden layer. The activation of the individual passes to the output of the visible layer. In the visible layer, RBM compares with the original value and analyzes the result.

Autoencoders are the special type of neural network where input and output are homogenous. It depicts the input data to the output layer (Bellini et al., 2019). It is mainly used in image processing and popularity prediction. Autoencoders receive the input and transform it into different forms. It first encodes the image and reduces the size of the input. Finally, decode the image to reconstruct the image.

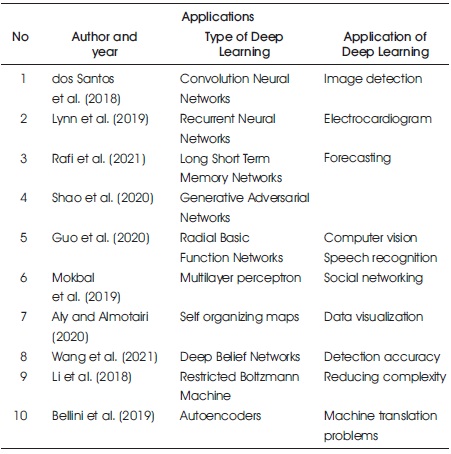

The applications of Deep learning are found in image detection, forecasting, computer vision, speech recognition, social networking, data visualization, detection accuracy, reducing complexity and Machine translation problems. The summary of some of the applications of Deep learning been tabulated in Table 1.

Table 1. Some of the Applications of Deep Learning

Feature learning is a fundamental job that gives knowledge about the problem and accuracy. In deep learning, feature learning works by itself using algorithms. It scans the data, identifies the features and it promotes learning. In deep learning using neural networks, the works are completed in a short period of time and it corrects the mistakes by repeating the task more than a thousand times. Deep learning algorithms are trying to grasp highlevel features, and therefore, it eliminates domain adroit and core extraction. The neural networks can be used for different other applications and data types. Deep learning is more flexible for newer applications.

Deep learning requires a very large amount of time for better performance for any given task. The cost will be high to train the complex models. It is quite difficult for a less competent person to adopt because there is no specific theory for training, selecting the proper network topology, or strategies to complete the specified tasks.

Deep learning and its various kinds are addressed in this study. Ten types of deep learning algorithms are covered, along with their applications. In the near future, neural networks will not only be deep, but will also evolve gradually. The right design and development of a network will improve both efficiency and problem solutions. Deep neural networks are effective at dealing with unstructured data due to their vast number of features. Deep learning has become an indispensible component of technology.