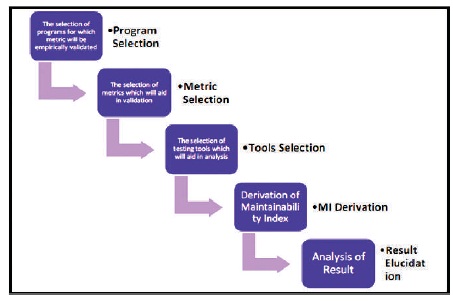

Figure 1. Methodology Elaborated

The main goal of software engineering is to develop an economical high- quality software product. The quality of a software product plays a critical role in decision making and impact the success of a business. To make a software product qualitative attributes need to be identified and then correlated to its quantitative measures. Hence a software metric values specify the structural complexity of a software product. In this research paper, six metric values such as Line of Codes (LOC), Cyclomatic Complexity (MVG), Lines of Comment (COM), Number of Methods (NOM), Coupling Between Objects (CBO) and Halstead Volume (HV) have been applied to investigate java-based two searching and five sorting programs. Three software estimation tools have been applied to them to obtain a conclusion on their implementation pertaining to referenced metrics. Additionally, a maintainability Index metric has been determined from the base metric to demonstrate comparative maintainability of the source code. This relative study demonstrates the analysis of the result for the same programs. Correctness, Fault-proneness, Modularity and threats of Validity are also discussed in this study.

In the context of software engineering, software quality measures and conformance leads to its design. The evaluation of software quality requires attribute that defines and track to its development process. With the growth of the software industry software quality is gaining its importance. Entire software industry understands the significance of software quality and expect it in its end product and not ready to compromise on it. Software Metrics are the means with which we can track and assess the development of a product and can make necessary changes to conform to its design.

It is some what difficult to define the word quality. In simple words definition of Quality is “In business, engineering and manufacturing, quality have a pragmatic interpretation as the non-inferiority or superiority of something; it's also defined as being suitable for its intended purpose which satisfy customer expectations.

IEEE defines software quality as “The degree to which a system, component, or process meets specified requirements and/or customer expectations.”

The previous standard for software quality measurement was ISO/IEC 9126. It categorized software quality into six characteristics (factors) which are functionality, reliability, usability, efficiency, maintainability, portability later they were subdivided with sub characteristics (criteria). Now the latest standard for software quality measurement is ISO 25010. It is introduced with two additional factors. The difference between two lies mainly in how they are categorized and define those characteristics as nonfunctional. It categorized software quality into eight characteristics which are functionality, efficiency, compatibility, usability, reliability, security, maintainability, portability (ISO/IEC 25010, 2011).

Software metrics are tools which are used for collecting valuable information in the form of quantitative measures which may be pragmatic to take a necessary decision during various stages of the software development life cycle.

Measurement is a necessity of software engineering and software metrics is an instrument to it. Many researchers applied studies investigating the relationship between metrics and software quality. Studies have been carried out on the distribution of faults during development and their relationship with metrics like size, complexity metrics etc. Akiyama (1971), was the first to attempt to use metrics for software quality prediction when he was using KLOC as a surrogate measure for program complexity. The (Chidamber & Kemerer, 1994) metric suits can be useful for early internal indicator of externally-visible product quality. Dagpinar & Jahnke (2003) investigated the significance of different metrics to predict maintainability of software. Nagappan et al. (2006), focused on mining metrics to predict component failures. Ambu et al. (2006), addressed the evolution of quality metrics in an agile/distributed project and investigated how the distribution of the development team has impacted the software code quality. Lee et al. (2007), provides an overview of opensource software evolution with software metrics. Xie et al. (2009) conducted an empirical analysis of the evolution of seven open-source programs and also investigated Lehman's evolution laws. Murgia et al. (2009), addressed software quality evolution in open source projects using agile practices and concluded that there is not a single metric that can explain the bug distribution in the evolution of the analysed systems. Zhang and Kim (2010), used echarts and patterns to monitor quality evolution over a long period. The number of defects was used as a quality indicator. Yu et al. (2013), studied the possibility of using the number of bug reports as a software quality measure.

Software Measurement can be performed in two ways that are direct measurement which can be measured without the contribution of any other attribute and another is an indirect measurement which can be measured with the contribution of other attributes. In this paper, we shall evaluate source code for metric based analysis. Figure 1 explains the methodology. The first step will be a selection of a program for which metric will be empirically validated. We have selected two search algorithms as linear Search and binary search and five sorting algorithms as Bubble sort, Heap Sort, Insertion Sort, Merge Sort and Selection Sort based in java language. The second step will be the selection of metrics which will help in their validation. The third step will be the selection of testing tools which will help in analysis. The fourth step will be the derivation of the Maintainability Index using a testing tool and acquired metric values. The fifth step will be the analysis of the result and elucidated accordingly.

Figure 1. Methodology Elaborated

We have selected three open-source tools for the analysis of java programs. They are C and C++ Code Counter (CCCC), Source Monitor (SM) and JHawk (JHK). We have selected these multiple testing tools to check and analyse the similarities and differences among their results. Using these tools we have selected only those metrics which are required to derive the Maintainability Index and these metrics are Line of Count (LOC), Cyclomatic Complexity (MVG) and Halstead Volume (HV) and Line of Comments (COM). Later few more quality aspect are derived.

These are the metrics which will aid in decision making by the knowledge of code attributes and potential of their application. A brief description is as follows.

This is the oldest and most widely used size metric. The simplest way to measure the size of a software program is by counting the number of lines in the text of the program's source code. It is used to predict the amount of effort that will be required to develop a program, as well as to estimate programming productivity or effort when the software is produced. There are several cost, schedule and effort estimation models, which use LOC as an input parameter (Fenton & Pfleeger, 1996).

Cyclomatic complexity is a software metric developed by Thomas J. McCabe. It is used to indicate the complexity of a program. It directly measures the number of linearly independent paths through a program's source code. The cyclomatic complexity is defined with reference to a directed graph containing the basic block of a program, with an edge between two basic blocks if control; may pass from the first to second (McCabe, 1976). The complexity is then defined as

M= E-N +2P

where,

M= cyclomatic complexity

E= the number of edges of the graph

N= the number of nodes of the graph

P= the number of connected components

Its applications are to limit the complexity of routines during program development that evaluates the risk associated with the application or program.

Line of comment enhances the readability of the Source code. This does not apply to all the comments. Each comment is used for a specific purpose. Some comments are written for the end-user developer, whereas some are written for other internal developers. The evident kind of comment is which reviews the purpose of the code, compliance the significance of the research related and to automatically create such comments. During Software Maintenance the performance of the task is based upon the quality of code and comments. If comments are neglected then the performance of the maintenance will be dependent on the skill of the developers and the complexity of the code. When well-written comments are available in the code then maintenance will be comfortable (Pascarella et al., 2019).

The number of methods is defined as the total number of methods in a class, including all public, private and protected methods. This metric calculates the number of methods per class to measure the size of the classes in terms of its implemented operations. It is used to identify the potential reuse of a class. In general, the classes with a large number of methods are harder to be reused, because they are more likely to be less cohesive (Lorenz & Kidd, 1994). Thus, it's recommended that a class do not have an excessive number of methods (Fraser et al., 1997).

The coupling between objects (CBO) metric is proposed and empirically evaluated by Harrison et al. (1998). It is used to measure how much an object of one class use methods or properties of an object in another class. Coupling between classes is required for a system to do useful work, but excessive coupling makes the system more difficult to maintain and reuse.

Halstead metric developed by the Maurice Halstead, which defines that in the module or the program there should be a minimal number of potential operators and number of potential operands (Szulewski et al., 1984). They are strong indicators of code complexity as they are applied to code, they are most often used as a maintenance metric (Halstead, 1977). Halstead volume is the actual size of a program in a uniform binary encoding for the vocabulary used. The unit of measurement of volume is the common unit for size “bits” (Albrecht, 1979). It is calculated as the program length (N) times the 2- base logarithm of the vocabulary size (n):

V = N*log n

The CCCC been developed as freeware by Tim Littlefair and is released in source code form. CCCC was produced as an artefact of an academic research project. The primary motivation was to provide a platform for the exploration of issues related to metrics. It is used for the analysis of source code in various languages, which generates a report in HTML format on various measurements of the code processed. The way of using CCCC is just to run it with the names of a selection of files on the command line. CCCC will process each of the files specified on the command line. For each file, the appropriate parser will run on the file. As each file is parsed, recognition of certain constructs will cause records to be written into an internal database and will be generated in HTML format. The run will cause the generation of corresponding summary and detailed reports in XML format. The report generated by CCCC normally consists of six tables plus a table of contents and metrics displayed are LOC, MVG and COM etc.

Source Monitor is a freeware, developed at Camp wood Software, is a standard windows GUI tool that gathers cyclomatic complexity statistics and other metrics for many languages. It collects metrics in a fast single pass through source files. It measures metrics for source code written in C++, C, C#, VB.NET, Java, or HTML and includes method and function level metrics. It saves metrics in check points for comparison during software development projects. It provides metrics like the number of lines, classes and interfaces, methods per class, statements and aggregated measures such as average method size or the percentage of comments. It can display and prints metrics in tables and charts and exports metrics to XML or CSV files for the further process with other tools.

Jhawk is a standalone code analysis tool. It takes the source code of the project and calculates metrics based on numerous aspects of the code such as volume, complexity, relationships between class and packages and relationships within classes and packages. Using JHawk, new metrics can be created. It prepares the result which can be viewed with the application itself or can be exported to HTML, CSV and XML formats.

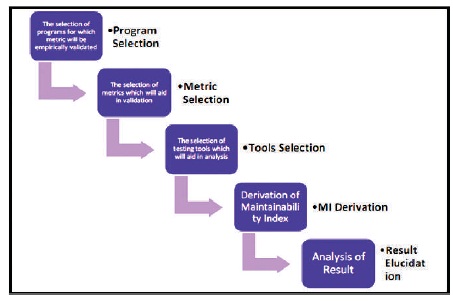

After basic investigation and pre-arrangements, code analyses were performed. Mentioned tools were used to analyse two java program based two searching techniques Linear Search and Binary Search. In Table 1 brief description of the source program is explained.

Table 1. Source Program Description

Using the mentioned tools both the programs were evaluated and then their results are analysed. The other metrics were calculated and delivered by the mentioned metric tools. We have recorded only those metrics which met requirement in our study as explained in Table 2.

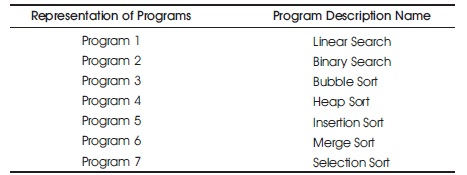

It is evident from Table 2 that metrics like coupling between objects and number of methods have the same values for the same program by different tools meaning the values evaluated by all the tools are correct and empirically validated. The metric lines of comments have the same values for the same program by CCCC and JHK, only SM evaluated in the percentage form. The cyclomatic complexity also has nearly the same values for a similar program by different software metrics tool. This table is evident that a similar program for the same metrics has nearly the same values evaluated by different software measuring tools. Relative analysis of HV metrics cannot be done, as only one tool can measure these metrics. Further analyses of the result of tools applied to these programs can be well understood using visual representation that is charts. Figure 2 displays the C and C++ Counter tool results using column chart. Similarly Figure 3 and 4 displays the result of Source Monitor and JHawk tools respectively.

Figure 2. Result of C and C++ Code Counter Tool

Figure 3. Result of Source Monitor Tool

Figure 4. Result of JHawk Tool

Further analysis of the above experiment is summarized in the Table 3.

Empirical evidence showed that there exists a relationship between software quality and metrics that measure the complexity of a program, the size of the program, the number of pages of documentation generated, CBO (Coupling between Objects) and NOM (Number of Methods). These metrics help in maintaining the software quality.

The Maintainability Index (MI) is a single value indicator for the maintainability of a software system. It was proposed by Oman and Hagemeister (1992). The Maintainability Index is evaluated by combining four metrics. It is a weighted composition of several metrics including Cyclomatic Complexity and Average Lines of Code, as well as computational complexity, based on the Halstead Volume function.

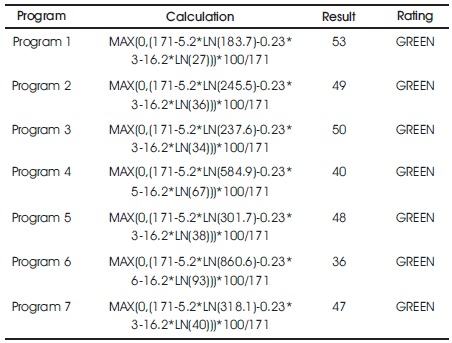

To calculate the MI Index, the following formula presented below is the derived one from the original formula.

MI*=MAX(0,(171-5.2*LN (AverageV) – 0.23*(AverageMVG) – 16.2*LN (AverageLOC)))* 100/171

The Maintainability Index is a value between 1 to 100. For this metric, the higher the value, the easier program code will be to maintain. There are three rating levels for this index:

GREEN: This is a rating between 20 and 100, and indicates code that should be maintainable.

YELLOW: This is a rating between 10 and 19, and indicates the code may be semi-difficult to maintain.

RED: This is a rating between 0 and 9, and indicates the code may be very difficult to maintain.

Table 5 has the Maintainability Index (MI) calculated for all the seven Programs.

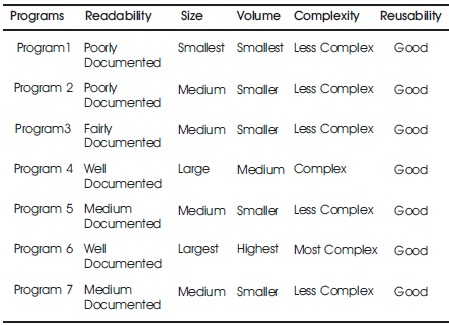

Table 4. Program Attributes

Table 5. Maintainability Index Calculation

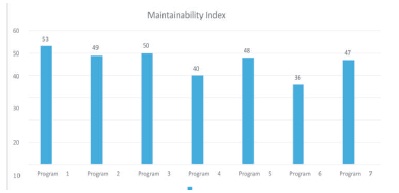

For effective visualization of maintainability index of all the seven program can be seen in Figure 5.

Figure 5. Maintainability Index of all the Seven Programs

According to Figure 5. Program 1 (Linear Search) has the highest degree of maintainability among the seven programs and Program 6 (Merge Sort) is the most difficult to maintain. It is evident that Table 4 values are in agreement with Table 5 values. Program 6 (Merge Sort) have the highest complexity in source code, largest volume and hence results in lowest maintainability index whereas Program 1 (Linear Search) is easiest to program, least complex and of less volume and scores the highest Maintainability Index. Hence, the algorithmic traits and resultant attributes prove that our programs are successfully tested for the mentioned measures.

Despite the fact that the properties estimated in Section 7 may not straightforwardly characterize the quality anyway they can be used to determine parameters meaning the potential changes to be completed in the end product. A portion of the quality variables which are dedicated by these code characteristics are summarized.

LOC is a size-oriented metrics which focus on the size of the software and are usually expressed as kilo lines of code (KLOC). Using errors per KLOC, Defects per KLOC or cost per KLOC based on LOC, important decision can be made and results in the correctness, which is the main component of software quality metric.

Using complexity metric (MVG), developers and testers can determine independent path executions and all the paths have been tested at least once. It further can ensure that system is fully tested. It is used to evaluate the risk associated with the application or program and make the system fault-prone, which is an element of software quality metrics.

NOM metrics are used to detect problems at ease. It is useful as developers use prewritten code, which saves the cost and further software development become more manageable. It is also extended to reusability. Both modularity and reusability contribute to software quality metrics.

The study performed in this paper should be replicated using any other software programs to draw more general conclusions about the ability to test programs for different metrics, on different programming languages and other software tools to reflect the evolution of the quality of software systems. Some limitations may affect the results of the study or limit their interpretation and generalized. The results obtained are based on the type of programs, the programming languages and the software tools. We do not claim that our results can be generalization. It is also possible with the fact such as the development style used by the developers for developing the code may affect the results or produce different results for specific programs.

In this paper, we performed an empirical analysis using the assessment of six software metrics on a set of 2 searching and 5 sorting techniques using three software analysis tools. It is further trailed by the inference of Maintainability Index from its constituent's metrics and a short assurance of other quality variables which can be induced. Software analysis tools are the affordable instruments accessible to the decision authority for dynamic purposes and making them fit for a master dynamic move if there should be an occurrence of forthcoming programming crunch by expressing early markers to risk-prone issues. However project leaders ought to figure their own customized metric program to focus the organization's planned goals, primacies, customer's custom requirements and objective to completely use their vast resources. Our empirical results provide evidence that enriches earlier empirical research on software measurements approving the relationship between software metric and quality characteristics determined subsequently and introducing the ups and downs while selecting the software tools which are available in huge number. Further investigations are, however, needed to draw more generalized conclusions. Like other researches, our investigation has a few impediments. Our investigation covers just a portion of software metrics and tools. This study should be moreover integrated with a huge number and size of a software program to assess a lot more proportions of implementation.