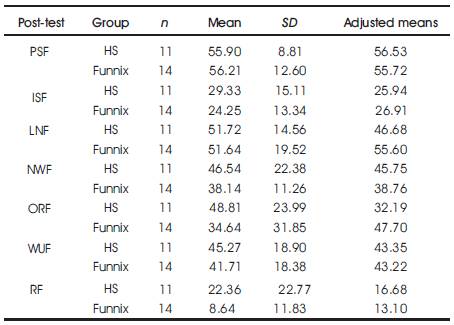

Table 1. Means, Standard Deviation, and Adjusted Means on the Post-tests for the Two Groups

The ability to read is essential to school-based learning and skilled responding in an information rich society. Unfortunately, many students in today's schools do not become skilled readers. Many reading researchers ( Blachman 1996 , 1997; Felton, 1993; Fletcher & Lyon, 1998; Torgesen, 1997) agree that the vast majority of problems experienced by early readers can be prevented through appropriate, explicit, and comprehensive early instruction. Previous research also indicates that parents/families can have a positive influence on the children's reading abilities. However, parents may not have the skills to teach reading in an explicit and systematic manner. It is here that the computer-based reading programs, which are systematic and explicit in their instruction, hold great promise.

This preliminary investigation examined the effects of two parent implemented computer-based reading programs (Funnix and Headsprout) on the reading skills of 25 students at-risk for reading failure. All students were pre and post-tested on the Dynamic Indicators of Basic Early Literacy Skills. All parents were provided one-on-one training by the researchers. Treatment fidelity data was collected. Further, a questionnaire was administered with parents and students to elicit their perceptions of the effectiveness and desirability of the programs. The results indicated that computer-based programs were effective in increasing certain basic early literacy skills of students at-risk for reading failure. A description of the computer programs, results (effect sizes and statistically significant results), implications, and limitations of the study will be discussed.

Recent national assessents of students' progress in the US indicate that many students in today's schools do not develop basic reading skills. For a majority of the students, reading difficulties begin early when they are first introduced to reading. Snow, Burns, and Griffen (1998) identify three primary obstacles that hinder the development of reading skills. These are (i) difficulty with understanding and applying the alphabetic principle, (ii) failure to transfer comprehension skills of spoken language to reading, and (iii) an initial absence or eventual loss of motivation to read.

The above obstacles to reading can be skillfully removed through effective reading instruction. One way to overcome the existing barriers in meeting students' needs is to supplement classroom instruction with computerbased reading programs that provide systematic and explicit reading instruction in the five basic reading skill areas. These five areas, as identified by the National Reading Panel, are (i) phonemic awareness, (ii) phonics, (iii) fluency, (iv) vocabulary, and (v) comprehension ( NRP, 2000 ). Computer based programs may be particularly useful in the teaching basic reading skills such as the phonemic awareness and alphabetic principle (phonics). According to the National Reading Panel, many teachers do not know how to teach the phonemic awareness and phonics skill in an explicit and systematic way (NRP). It is here that the computer-based reading programs, which are systematic and explicitly designed, hold great promise.

Previous research also indicates that parents/families can positively impact their child's reading abilities ( Baker, Serpell, & Sonnenschein, 1995 ; Burns and Casberque, 1992 ; Clay, 19754; Dever, 2001; Jongsma, 2001; Jordan, Snow, & Porche, 2000 ; Snow & Tabors, 1996; Taylor, 1983; Taylor & Dorsey-Gaines, 1988). However, a majority of parents do not have the knowledge and skills to teach reading in an explicit and systematic manner. One way to overcome this barrier is to use computer-based reading programs. Well designed computer-based reading programs can assist parents in meeting the reading needs of their children.

Inspite of growing number of computer-based reading programs in the market there are very few independent investigations that examined the feasibility, effectiveness, and efficacy of computer-based reading programs ( NRP, 2000 ). The current study is part of a series of independent studies undertaken simultaneously in the US and funded by the Office of Special Education Programs at the US Department of Education to address the limited literature on the effectiveness of computer-based reading programs. The participants in each of the studies are independently selected and one of the two parental studies conducted in Utah is reported here.

The purpose of the study was to evaluate two computerbased reading programs: Funnix and Headsprout. The primary purpose was (a) to evaluate the effects of parent implemented Funnix and Headsprout reading programs on the acquisition of basic early literacy skills of students with reading difficulties. Further, (a) parental perceptions of the effectiveness, ease of implementation, and desirability of program and (b) students' perceptions of the effectiveness and desirability of program were investigated.

Participants for the study consisted of students with reading difficulties in grades K-2 and their parents. Participants were selected with the assistance of classroom teachers working in grades K - 1, & 2 in Northern Utah and Southern Idaho. The teachers were (i) asked to th identify students scoring below the 15 percentile on a local, state, or national test of reading achievement and (ii) were asked to send research participation packets (provided by the authors) home with students. A total of 34 parents expressed interest and participated in the study initially.

Participants in the study resided in sub-urban/rural communities in the states of Idaho and Utah. Students from both communities were assigned to both Funnix and Headsprout programs. At the beginning of the study, project personnel contacted parents and visited the homes of the participants to evaluate computer systems to ensure that basic system requirements for Headsprout and Funnix programs were met. Parents in both groups implemented the computer-based programs at their homes and on their personal home computers during the summer break.

At the beginning of the study and after providing consent, parents completed a demographic questionnaire that asked for (a) family demographics (b) interventions or services being received by their child during the school year and during summer (c) information on their home computer (i.e., operating system, availability of internet, etc.) and (d) parents' ability to implement the program for eight weeks during summer break (questions included information on their travel plans, etc.). After obtaining the consent form and the demographic questionnaire, the students were assigned to one of the two groups. The author could not randomly assign the parent-child dyads in this study as Headsprout program required an internet connection to access the program lessons and not all parents had internet connections. Parents who had an internet connection were assigned to either the Headsprout or Funnix group. However, parents who did not have an internet connection were assigned to Funnix group. However, using the demographic information, the project personnel made sure that students from the same schools and parents with similar demographics were assigned to both groups.

Two computer-based reading programs were selected (i.e., Headsprout and Funnix) for this study. Criteria used to identify computer-based reading programs are (a) program addressed instructional targets identified by the National Reading Panel report (i.e. phonemic awareness, phonics, fluency, vocabulary and reading comprehension (b) curriculum targeted in the programs was appropriate for kindergarten to second grade students; and (c) similar lengths of time to complete a computer-based lesson/episode (i.e., 25-30 minutes).

Funnix is a CD-based Direct Instruction (DI) reading program and consists of two levels: Funnix Beginning Reading and Funnix 2. Funnix Beginning Reading consists of 120 lessons on two CDs, a parent CD with a instructional guide on how to use the program, and a consumable workbook for the student to complete after each lesson. Funnix 2 contains lessons on two CDs and a reading book. Funnix has a placement test to place students at different levels in the program (see for more details). Funnix has an in built DI curriculum and a narrator, who models the skills. It requires an adult to navigate the program and make appropriate choices to deliver the instruction based on student's responses. For example, an adult can (a) briefly stop the instructions delivered by the narrator, (b) repeat the instructions of the narrator, and (c) repeat the exercises if the student is making multiple errors. The Funnix parent training CD provides instructions for parents on correct sound pronunciations, when to repeat exercises, how to navigate through lessons and exercises in a lesson, and how to praise students for the correct responses. The Program requires students/children to produce oral responses to stimuli presented on screen, complete workbook exercises, or read from a hardback reader.

Headsprout is a web-based reading program consisting of 80 lessons/episodes for students in K-2 grades. Headsprout does not use a placement test (see http://www.funnix.com/ http://www.headsprout.com/school/ for more details). Hence, all students start on episode/lesson1. Headsprout requires that the student navigate through the program after correctly answering the stimuli presented on the screen. It also has supplemental material such as the Sprout stories that students read after certain lessons/episodes. The program directly teaches/models the skills and requires students to demonstrate skill acquisition by manipulating and clicking the computer mouse. Headsprout recommends that parents/adults supervise the students to ensure that the student produces correct oral response and provide corrective feedback for reading errors during the student reading of the Sprout Stories.

Student's reading skills were measured using the Dynamic Indicators of Basic Early Literacy Skills (DIBELS) progress monitoring probes. DIBELS consist of seven subtests: Initial Sound Fluency (ISF), Letter Naming Fluency (LNF), Word Use Fluency (WUF), Phoneme Segmentation (PSF), Nonsense Word Fluency (NWF), Retell Fluency (RF), and Oral Reading Fluency (ORF). DIBELS probes were individually administered in one minute time intervals ( Good, Simmons, & Kameenui, 2001). For purposes of this study, progress monitoring probe 19 (except for LNF) was used as the pre-test and progress monitoring probe 20 was used as the post-test. For LNF, K-3 benchmark assessment was used as the pre-test and the first benchmark at grade 1 was used as the post-test as progress monitoring probes are not available for this subtest.

At the completion of the study, a social validity questionnaire was administered with parents and students to elicit their perceptions of the computer-based programs. Parents were asked about their previous experiences in delivering reading instruction, ease of navigating the computer-based reading program (CBRP), perceived effectiveness of the CBRP, whether they would recommend the CBRP to other parents, and use the CBRP in the future. Students/children were asked about their general computer use, satisfaction with assigned CBRP, their perception of specific elements (e.g., stories, graphics, activities) of the CBRP, and their perceived effectiveness of CBRP in teaching them how to read.

Project personnel met with each parent to train the parent on how to use the CBRP. Training consisted of (a) reviewing program related materials, (b) modeling overall use of programs, (c) discussing child and parental roles during instructional sessions, (d) reviewing and practicing procedures for properly correcting reading errors, (e) monitoring parental use of programs, and (f) discussing weekly progress reporting procedures. After the orientation and training meeting, parents were asked to deliver reading instruction five days a week, for eight weeks. Each parent was also observed two times while implementing the CBRP. Further, each parent was provided with self-addressed envelopes and progress sheets and was instructed to send daily log sheets to their project personnel on a weekly basis.

At the end of the initial home visit, Dynamic Indicators of Basic Early Literacy Skills (DIBELS) progress monitoring probes (pre-tests) were administered to the student in a quiet location. The entire session (training and pre-test) lasted approximately 1½ hours, with one hour for the overview and training of parent and approximately 30 minutes for the administration of DIBELS measures.

After eight weeks of CRBP implementation, the students were tested again at their homes using DIBELS. At this time, each parent was asked to complete the social validity questionnaire independently and the students were asked the questions on the social validity forms by the project personnel. Students orally answered the questions, which was recorded on the form by the project personnel. At the end of the study, each parent received a $50 honorarium for his/her participation.

Four project coordinators were trained using DIBELS materials and administered assessments with the second author until they demonstrated the probe administration skills specified in the DIBELS training materials. During the course of the study, inter-observer agreement (IOA) data were collected on 20% of probe administrations and at least on one DIBELS pre-test and one post-test for each coordinator. Interobserver agreement for the dependent measures was calculated using item by item agreement. The average IOA ranged from 80.46% (on the PSF) to 99.85% (on the LNF) on the pre-tests and from 84.43% (on the RF) to 99.50% (on the LNF) on the post-test.

The initial sample constituted 34 parent-child dyads. However, nine parent-child dyads did not complete the minimum of 40 lessons or dropped out of the study. Of the 25 remaining participants, 11 were in the Headsprout group and 14 were in the Funnix group. The Headsprout group consisted of three students completing 1st grade and eight students completing 2nd grade. The Funnix group consisted of six students completing KG, four students completing 1st grade, and four students completing 2nd grade. All students had difficulties with reading andwere functioning below their grade levels on the reading assessments.

To evaluate the effects of the programs on the basic early reading skills of the students, two types of analysis were undertaken: First, a one-way analysis of co-variance was used to compare the groups and second, pairedsamples ‘t’ tests were used to measure the pre-post gains on the DIBELS probes for each group. The one-way analysis of covariance was conducted using the computer-based program as the independent variable, post-tests as the dependent variable, and pre-tests as the covariate. All null hypotheses were tested at the .05 significance level (two-tailed tests).

The one-way analysis of covariance (ANCOVA) was conducted for the seven DIBELS measures, with the pretest as the covariate. The results indicated that for the LNF (F(1, 22) = 5.99, p = .023, partial η2 = .21) and ORF (F(1, 22) = 16.85, p = .001, partial η2 = .42) the ANOCOVA was significant at the .05 level. The estimated marginal means for the Funnix group on the LNF measure was 55.6 and for the Headsprout group was 46.68. The estimated marginal mean on the ORF measure for the Funnix group was 47.70 and for the Headsprout group was 32.19. Means on the post-tests, standard deviations and estimated marginal means are provided in Table 1.

Table 1. Means, Standard Deviation, and Adjusted Means on the Post-tests for the Two Groups

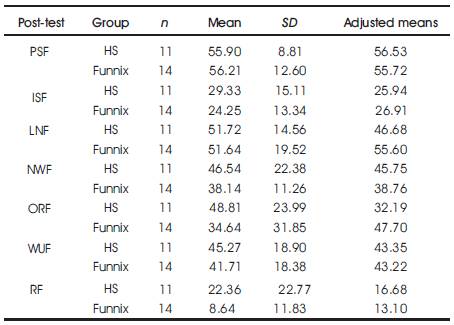

Paired sample t tests were also conducted for each group to examine the pre-post gains on the basic literacy skills after eight weeks of intervention(Table 2). The post-test means were greater for the HS group on three measures: PSF, WUF, and RF. These were not statistically significant at the .05 significance level. As the sample size was small, effects sizes were calculated. The standardized effect size index, d, was small (.44) for the PSF measure and medium (.60) for the WUF measure. The results from the paired sample t tests for the Funnix group indicated that the post-test means were higher on four measures: LNF, PSF, WUF, and ORF. The pre-post differences were statistically significant at the .05 level for only the ORF (.004) measure. As the sample size was small, effects sizes were calculated. The standardized effect size index, d, was small for the PSF measure (.45) and the WUF measure (.42). The effect size was large for the ORF (.94) measure.

Table 2 .Means and Standard Deviations on the Pre and Post-tests for the Two Groups

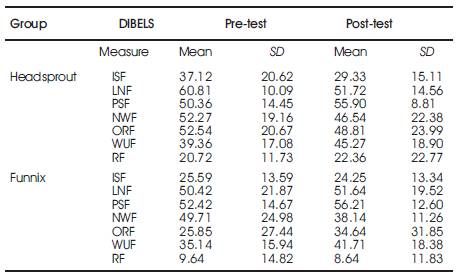

Approximately 50% (n = 14) of the parents in the Funnix group indicated that they had previous experience using computer-based softwares and 64% indicated that they had previous experience with teaching reading. Regarding the Funnix program, (a) all (100%) parents indicated that they were comfortable using the Program, (b) 93% indicated that the Program was useful in teaching reading skills, (c) 100% felt that overall quality of the Program was very good, (d) 100% indicated that they would recommend Funnix to a friend, (e) 93% indicated that they would use Funnix to help another child, and (f) 64% indicated that they would chose Funnix when given a choice of Funnix, other computer program, and a printbased program.

The results from the social validity questionnaire administered to the parents in the Headsprout group indicated that 36% (n = 11) of the parents had previous experience using computer-based softwares and73% had previous experience with teaching reading. Regarding the Headsprout program (a) all parents (100%) indicated that they were comfortable using the program, (b) 82% indicated that the Program was useful in teaching reading skills, (c) 73% felt that overall quality of the Program was very good, (d) 91% indicated that they would recommend Headsprout to a friend, (e) 91% indicated that they would use Headsprout to help another child, and (f) 73% indicated that they would chose Headsprout when given a choice of the Headsprout, other computer program, and a print-based program (see Table 3).

Table 3.Parental Perceptions of Computer - based Reading Programs

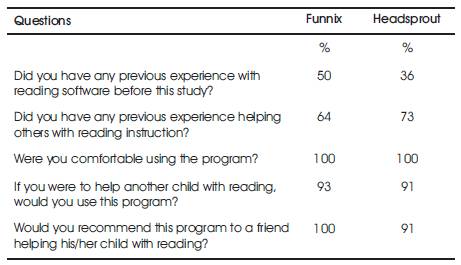

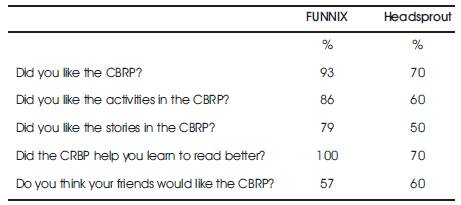

The results from the social validity questionnaire administered with the students in the Funnix group indicated that (a) 93% of the respondents liked the Funnix program (b) 86% liked the sounding out activities (c) 79% liked the stories (d) 100% indicated that Funnix helped them learn to read and (e) 57% felt that their friends would like the Funnix program. The results from the social validity questionnaire administered with the students in the Headsprout group indicated that (a) 70% of the respondents liked the Headsprout program (b) 60% liked the sounding out activities (c) 50% liked the stories (d) 70% indicated that Headsprout helped them learn to read and (e) 60% felt that their friends would like the Headsprout program(Table 4).

Table 4. Students' Perceptions of Computerbased Reading Programs

To summarize, this preliminary investigation examined the effects of two computer-based reading programs on the basic early literacy skills of students at-risk for reading failure. The ANCOVA results indicated that they were statistically significant differences between the two groups on two measures: LNF and ORF. On both the measures, the students in the Funnix group had higher scores than the students in the Headsprout group. As the students were not randomly assigned and there was differential attrition (six students in the Headsprout group vs. three in the Funnix group) of the participants, we examined the pre-post gains on the DIBELS measure for each group. The pre-post analysis indicated that students in both the groups had higher scores (and small effect sizes) on the PSF measure, a measure of phonemic awareness and a crucial component of the reading process. Further, students in the Headsprout group showed medium gains (medium effect size) on the WUF measure, a measure of vocabulary, which is one of the five critical skills areas identified by the National reading Panel. Similarly, students in the Funnix group showed small gains (small effect size) on the WUF measure and large gains (large effect size) on the ORF measure, a measure of fluent reading ability.

A majority of the parents who participated in the study reported that they had previous experience with reading instruction and all the parents indicated that they were comfortable using the CBRPs. Almost all the parents, in both groups, indicated that they would use the CBRP to help another child and would recommend it to a friend. However, a higher percentage of students in the Funnix group indicated that they liked the reading program and all the students in the Funnix group indicated that the program helped them read better. Similarly, a higher percentage of students in the Funnix group indicated that they liked the activities and stories in the Funnix program than the students in the Headsprout group.

A significant finding of this study is that both programs facilitated gains on the phonemic awareness measure, a crucial component of the reading process, after eight weeks of intervention. It is interesting to note that the Headsprout group showed gains on only two of the measures (as evidenced by effect size measure) and the Funnix group showed gains across three measures with a large effect size on the ORF measure. This could be due to many factors. For example, given that all students in the Headsprout group start on lesson 1 and the students in the Funnix group are placed by their performance on the placement test, we hypothesize that the skills targeted by the program (to be more specific, the 40 episodes or lessons) could have been one significant factor for the differential results.

Almost all the parents and a majority of the children/students indicated that they like the programs they had used. We asked both direct and indirect questions, to elicit participants' opinions about the programs. For example, we asked the parents if they would recommend the program to a friend, if the program was a good program to teach reading, and/or would they use it to help another child. We were not surprised with the findings in that we think parents who were interested participated in the study and parents who did not like the programs may have dropped out of the study (nine dropped out of the study). However, we were surprised with the students' perceptions, especially with the likability of the Funnix program. Ninety three percent of the students indicated that they liked the Funnix program but only 57% indicated that their friends would like it. This variability might be due to students' perceptions that their friends do not require reading interventions or that the skills targeted by the Funnix might be simple/easy for their friends. Similarly, only 70% of the students in the Headsprout group indicated that they liked the program. We think that it might have to do with the instructional level/skills targeted in the program as most of the students were in 1st or 2nd grade and Headsprout requires everyone start at the first lesson. The first lessons target many prerequisite skills that many of the students might have mastered already.

First, the study adds to the small literature based on computer-based reading programs and their effectiveness in promoting basic early literacy skills, especially the phonemic awareness skill. Second, the present study measured the perceptions of parents and students regarding desirability and effectiveness of the program. We strongly believe that collecting social validity data on the ease of use and desirableness is very important for the sustainability of program in practice. Information on the above components provides both practitioners and parents a comprehensive picture of the programs and will aid in their selection and use of programs.

There are some limitations to the study and the results should be interpreted as tentative due to these limitations. These limitations are (a) small sample size and attrition (b) lack of control group and (c) non-random assignment of the groups.

First, the authors tried to recruit as many parents as possible (sent more than 150 parental packets to parents of students at-risk for reading failure) and ended up with 25 participants (for the final analysis; 34 initially). As the sample size for the study was small (less than 20 students for each group), the power of the statistical tests in rejecting the null hypotheses may be weak. The authors tried to increase the power of the statistical test by testing the null hypothesis at the .05 significance level (two-tailed test) inspite of conducting multiple tests. They tried to overcome the issue of limited power of the statistical tests by undertaking effect sizes. The effect sizes computed did indicate that the results of this preliminary investigation are promising.

Second, even though the effect sizes indicate the skill growth in the area of phonemic awareness, the lack of a control group makes it difficult to understand the relative significance of the results. Further, the presence of a control group also would have helped in our understanding of lower scores on the ISF and the NWF measures. For example, Cooper, Nye, Charlton, Lindsay, Greathouse (1996) examined the effects of summer vacation on students' standardized achievement scores. The authors, based on their meta-analysis, concluded that the students' scores were lower by at least one month as measured by grade level equivalents. A control group would have provided data in understanding if the lower scores were due to summer learning loss (i.e., lack of opportunities to practice) or they were due to other issues (for example, motivation of the students at post-testing or difficulty of the post-test probes).

Third, the authors were not able to randomly assign (in a true fashion) the students due to some families lacking internet access. The lack of random assignment makes the current investigation a quasi-experimental one. The authors tried to make sure that students from different elementary schools were assigned to both the groups. Similarly, they collected information on family demographics to make sure parents with higher education were assigned to both the groups. Similarly, they collected daily logs to monitor that parents were implementing the lessons every day for 30 minutes for both groups. Further, they used pre-tests as covariate to control for initial differences of the students. However, due to the lack of random assignment, the results should be considered tentative.

Further research in the area should be conducted to replicate the tentative findings of the study. Such studies should include random assignment of participants and a larger sample. Such studires should also include a control group.

To conclude, this quasi-experimental study demonstrates that the Funnix and Headsprout computer-based reading programs implemented over an eight-week period (40 sessions) facilitated small gains in the phonemic awareness and vocabulary skills of students at-risk for reading failure. Further, the Funnix group had large gains on ORF measure. The results of this preliminary study extends the small literature base on computer-based reading programs and suggests the two computerbased programs can be used as supplemental programs by parents during summer break to facilitate phonemic and vocabulary skills of students at-risk for reading failure.