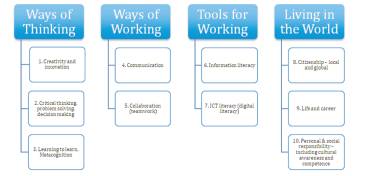

Figure 1. Components of ATC21S “KSAVE” Framework of 21st Century Skills, Encompassing Knowledge, Skills, Attitudes, Values and Ethics

With the onset of Web 2.0 and 3.0 – the social and semantic webs – a next wave for integration of educational technology into the classroom is occurring. The aim of this paper is to show how some teachers are increasingly bringing collaboration and shared meaning-making through technology environments into learning environments (Evergreen Education Group, 2014). Purpose is to show a case study of how teachers include student-to-student online collaboration in their Technology Integration Practices (TIP), and how some research projects are examining useful methodologies for incorporating evaluation, assessment and reflection of the approaches (Wilson et al., 2012; Wilson, Scalise, & Gochyyev, 2014). Results from the use of TIP collaborative math/science notebooks in the Assessment and Teaching of 21st Century Skills (ATC21S) project are presented. Recommendations are to help teachers answer key questions about how to assess and evaluate collaborative work online, and how to employ such techniques in the classroom.

For many teachers, the idea of teaching 21st century standards such as digital collaboration is challenging (Partnership for 21st Century Skills & American Association of Colleges of Teacher Education, 2010; Schrum & Levin, 2014). Teachers ask how they should go about helping students build these skills, and wonder what a successful performance looks like in a collaborative digital space. They want to know how such skills can be effectively assessed, and whether and how students should be expected to improve over time. Instructors have a lot of experience recognizing more traditional work products in the classroom, but sometimes don't know if they can effectively recognize increasing student proficiency in an area such as digital collaboration. They haven't seen many examples.

In this study, a single case study of collaborative work products from the Assessment and Teaching of 21st Century Skills (ATC21S) project was examined. The case study uses one type of collaborative team notebook activity in a math/science learning environment.

ATC21S is an alliance launched by Cisco, Intel, and Microsoft and including government, schools, and university partners across numerous countries. Goals include to encourage the development of 21st century learners and to enhance the skills of the workforce of tomorrow (Griffin, McGaw, & Care, 2012).

The ATC21S project describes today's International and National Educational Standards as primarily measuring performance in core subjects like Math, Science and Reading. Although innovative inclusion of 21st century skills in some schools exists, limited mainstream implementation means that many teachers are having to develop their own approaches to both teaching and assessment. Similar to the Partnership for 21st Century Skills (2009 & 2010), ATC21S describes that, there are few curriculum and assessment tools in place to help schools succeed at teaching critical 21st century skills (Griffin et al., 2012; Partnership for 21st Century Skills, 2009; Partnership for 21st Century Skills & American Association of Colleges of Teacher Education, 2010).

For the case study, a digital collaboration in an ATC21S Science and Mathematics activity is examined (Scalise, 2013; Wilson & Scalise, 2012). The case study introduces ways of viewing and interpreting collaborative performances online. It discusses some key attributes of successful digital collaboration that are easy for teachers to recognize in classroom work products.

One key topic that teachers ponder in digital collaboration is how to effectively evaluate collaborative work in an online setting (McFarlane, 2003). They often feel they are good at evaluating work products in their subject matter areas, for instance they can “grade” and provide feedback for language, math or science competencies in a given assignment. But what factors might they tap as indicators of growing student proficiency (Wilson et al., 2012) in collaborative online digital literacy more generally? Without some indicators, it can be difficult for teachers to gauge how they are helping students improve in this type of Educational Practice.

Digital literacy as a domain encompasses a wide range of subtopics, including learning in networks, information literacy, digital competence and technological awareness, all of which contribute to learning to learn through the development of enabling skills (Wilson & Scalise, 2012).. For the ATC21S project, sets of 21st century skills were identified based on an analysis of twelve relevant frameworks drawn from a number of countries and international organizations around the world (Binkley et al., 2012). These included the OECD and countries in Europe, North America, Asia, and Australia. Called the “KSAVE” framework, the components encompass not only skills, but as the acronym implies Knowledge (K), Skills (S), Attitudes (A), Values (V), and Ethics (E). KSAVE organizes the ten components into four conceptual groupings, and the components have been redrawn into a new diagram as shown in Figure 1.

Figure 1. Components of ATC21S “KSAVE” Framework of 21st Century Skills, Encompassing Knowledge, Skills, Attitudes, Values and Ethics

As shown in Figure 1, KSAVE introduces four overall groupings for the 21st century domain: Ways of Thinking, Ways of Working, Tools for Working, and Living in the World . KSAVE suggests that, the first Ways of Thinking grouping, which includes creativity, problem solving and metacognition, represents a push forward in the conceptualization of thinking. Its components contribute to higher order thinking skills, and require cognitive processes such as recall and inference, but are not always a current focus of formal schooling processes. To incorporate more focus in schools requires appreciation of how students employ focus and reflection such as in problem solving but also indicates how students must be able to take on multiple perspectives, value transfer, and apply generative association, such as are evident in creativity and innovation.

The other groupings in KSAVE have similar group sets within the 21st century domain. For instance, the second category, Ways of Working, groups communication and collaboration skills together as essential components of 21st century work requirements. Such team contributions, knowledge sharing, and dissemination are increasingly a top focus of how we work. The third category, Tools for Working, includes information literacy and digital literacy in the knowledge economy. Finally, Living in the World focuses on the broader perspectives of personal, social, local, and global citizenship responsibilities and skills.

For the ATC21S case study, here the focus is on a digital literacy portion of Tools for Working, called learning in networks, the digital literacy domain for ATC21S is comprised of four strands of a learning progression, or set of progressively more proficient levels. The four strands are:

Given the broad reach of these four strands, planning for Technology Integration Practices (TIP) that support collaboration is not the domain of any one subject matter area. Rather examples of effective technology integration strategies can be found across many content areas. These include language arts/foreign languages , mathematics/science, social studies, art/music, physical education/health, and special education.

Thus one way of approaching school use of digital literacy is to consider it a practice, or way of working across domain but through new tools (Scalise, 2014). Friedman (2007) describes such practices as a major shift toward technology that Educators need to address. He discusses how it may be a counter-production to ask students to power down or give up their social media when they enter the school doors. Rather, students should actively engage in digital literacy practices in formal learning, including using the tools, networks and bodies of expertise available to students virtually (Scalise, 2013). This both underscores developing ICT knowledge and skills as an important practice in schools, and allows educators to teach and model appropriate use while supporting subject matter learning.

When considering the relationship of the KSAVE learning in networks objectives to actual classrooms, it is important to consider the technology integration that is done by educators in schools (Roblyer, 2004, 2006). Technology integration for education is often defined simply as using technology as a tool for teaching and learning (Barron, Kemker, Harmes, & Kalaydjian, 2003). Scholars in recent years have described ICT literacy as often best achieved through integrated learning with technology rather than stand-alone (Ridgway & McCusker, 2003; Somekh & Mavers, 2003). In integration, technology use is embedded in subject matter areas, authentic tasks, real-world problems or other applications. By contrast when taught in stand-alone instruction, the focus can be more solely on learning a tool rather than its application. Kozma, Jewitt and others have called for rethinking both what digital literacy calls for, and how technology can better contribute to its teaching and assessment (Kozma, 2003; Quellmalz & Kozma, 2003).

For technology integration planning in the classroom, best practices include understanding how, when, and why technology can be infused into education to improve learning outcomes. Poor technology integration planning can center on including too much technology in the classroom as well as too little. For instance, using technology for its own sake rather than strategically to support specific learning outcomes can be a problem in some schools. Scaffolded hands-on experiences with technology and modeling of technology between teachers helps to bring about more effective technology integration planning.

Robyler describes technology integration planning or TIP for teachers as encompassing five phases :

Teachers use digital collaboration in the classroom today in many different ways. One teacher may ask her students to compose on Google docs, employing an online document in which all of the authors can share access to the document so that they can write simultaneously (Wessling, 2012). Students grouped into teams of three or four work on individual laptops. They sit together in table groups. They can speak together face-to-face and discuss the developing shared writing in person, but they also compose over the computer together for both draft and final documents. The online environment affords a variety of virtual tools such as for annotation, tagging, and providing in-line comments, as well as for tracking prior drafts and the contribution of each student.

Teachers describe that students need to manage these skills in productive ways in their later lives. This will involve collaboration skills such as turn-taking, affirmation, constructive critique, and etiquette. Students can share the feedback and ponder solutions together. Teachers can see through the online products which students are contributing and how.

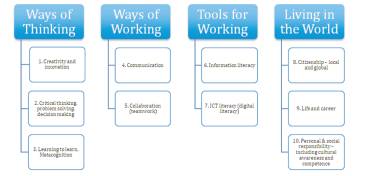

One potential mechanism for the assessment of student ability in the learning network aspect of ICT literacy is to model assessment practice through a set of exemplary classroom materials. An ATC21S module that has been developed for this is called the “Arctic Trek” scenario and is based on the Go North/Polar Husky information website (www.polarhusky.com), a project originally of the University of Minnesota. The Go North website is an online adventure learning project based around arctic environmental expeditions. The website is a learning hub with a broad range of information and many different mechanisms to support networking with students, teachers and experts.

The Arctic Trek scenario, with an opening screen shown in Figure 2, views social networks through ICT as an aggregation of different tools, resources and people that together build community in areas of interest. Through a series of screens, the tour through the site for the ATC21S demonstration scenario is conceived as a "collaboration contest," or virtual treasure hunt. In this task, students in small teams ponder tools and approaches to unravel clues through the Go North site, via touring scientific and mathematics expeditions of actual scientists. The items from the Arctic Trek scenario are the examples of activities (Wilson & Scalise, 2014).

Figure 2. Opening screen of the ATC21S Arctic Trek Scenario, a Collaborative Digital Literacy task in a Science and Mathematics Content Area

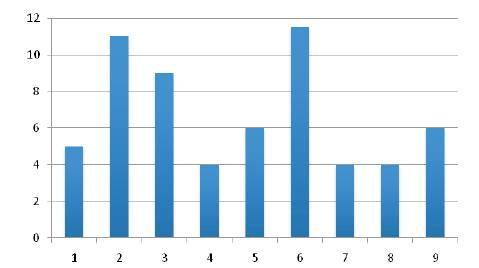

A digital collaborative notebook was employed in different trials of the Arctic Trek task in four countries. Nine team notebooks are examined in this case study as shown in Figures 3 and 4. The Arctic Trek task includes the use of a collaborative math/science lab “notebook” shared by teams of four students in the classroom. The notebook focuses especially on the developing and sustaining Intellectual Capital through Networks (ICN) portion of the ATC21S KSAVE framework. As a work product, the shared notebook is similar to other collaborative online work products described for the use in the classroom by teachers. The notebook allows groups to “construct” a collaboration online. Note that, no face-to-face collaboration opportunities are made available in the Arctic Trek scenario, so the notebook and its associated tools form the full record of the collaborative work product. Thus a major point of the Arctic Trek notebook example was to yield generative information on the fourth or ICN strand of the ICT literacy framework – what do the nine teams of students do when faced with opportunities to employ collective intelligence?

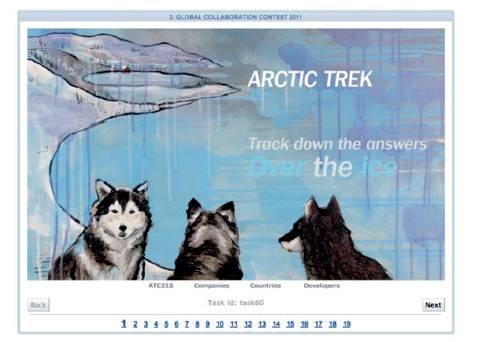

Figure 3. Table showing attributes achieved for the Nine Case Study Notebooks Sampled. Teachers can use simple data displays like this as Rubrics for Student Teams to selfevaluate their Collaboration Efforts

Figure 4. Graph displaying Sum Total of Attributes achieved for each of the Case Study Notebooks Sampled. Visualizations such as these are easy ways to see differences in student work products, and can serve as useful Resources for Teachers working on evaluating their Student Work during Digital Collaboration Efforts

As either a Microsoft One Note document or a collaborative Google document online, student teams were provided with a link in the Arctic Trek activity and a “secret code” to login into their shared document online with their assigned team members. They then had an “open canvas” or mostly blank collaborative work space with some associated tools such as a chat box to use for their collaboration and communication.

Containing just a few lines of instruction, the notebook was intentionally left as an unstructured, simple device through which students could collaborate and share work across their team. The unstructured nature of the approach meant that each team could employ the tool in the manner that they thought best and student work across the team could be evaluated for attributes capturing how effectively the team itself decided to employ their opportunity to collaborate. Without substantial layers of scaffolding or support telling the students how they should go about the collaboration, the teams were therefore left to draw on their own working knowledge to gather the team together and assist in solving the activities of the task.

To develop attributes to help interpret the collaborative performances seen, a set of Arctic Trek notebooks representing a purposive range of performance were sampled from the ATC21S project. They were then reviewed by methods of integrative research to gather a set of core ideas for patterns seen in the student work. These represented both some common ground and some divergent characteristics by which teachers could recognize important attributes of the ICN performance in the collaborative artifact. The identification of such attributes can help to build a bridge between using such approaches in the classroom and evaluating how well students are learning to collaborate and employ intellectual capital effectively.

As shown in Figure 3, twelve attributes were identified that ranged at initial levels from accessing the digital tool being used for collaboration, making attempts to identify team members, posing initial questions, and sharing simple answers. At more intermediate levels, students are also working on at least some types of role allocation, planning strategies and shared thinking for the collaborative process to the highest level performances participating in effective evidence sharing, systematic execution, flexible adjustment and analysis during the activities, and attempting to come to a shared understanding on tasks across the team.

The twelve attributes identified were analyzed by subject matter experts and then sorted in a cognitive diagnostic technique of ordering from “easier” attributes to “harder”, based on sample numbers in which the attributes appeared, with ranking of “ties” by subject matter experts. Figure 3 shows the sorting of attributes in the columns, and the sorting of notebook’s performances from low to high in the rows. A zero in the table recorded that, the attribute was not present in the notebook; a one recorded that, the attribute was present. The fairly regular pattern on the diagonal of zeros and ones in the table display indicates that, teams with lower performing notebooks tended to consistently display the same few attributes while higher performing teams compiled both more attributes, and higher level attributes on the ICN strand. Figure 4 shows sum scores across the attributes by notebook sample.

Of course the small sample size of a case study allows generative work to take place on new constructs, but larger samples and additional methodologies are needed to confirm and more broadly generalize interpretations in the 21st century skill domains (Wilson et al., 2012). This is a limitation of the case study method. For those interested in generalizing into the case study observations from their own contexts (Scalise, 2010), the case study format enables the goal of showing teachers some potentially effective paths to evaluating collaborative tasks in their own classrooms. Teachers often have small local samples from which they can draw a representative range of work like the ATC21S nine notebook set. Similar to a digital case study, teachers can then use their usual review of student work and their TIP Phase 5 approaches to evaluate the patterns in the results. By identifying a small set of helpful attributes that show high utility, they have mechanisms to guide their own work as well as allow for feedback and guidance to students that can be used for both current and future evaluations.

Initial proficiency shown by student teams in the notebook case study sample indicated, as described in Figure 3, the ability to access the collaborative space, begin to identify team members, and attempt to pose at least some initial questions and answers to the team. Of the nine notebooks sampled that represented a range of performance, all teams were able to progress this far in their shared efforts.

These indicators are at the ICN1 level of the framework.

Two teams were additionally able to extend to a mid-range of collaboration that involved establishing at least some partial roles or turn taking in the collaborations, and employing the ability to share not only their initial questions and answers, but some of the evidence and evaluation that the team collected or completed in their information for aging during the task. These indicators are at the ICN2 level of the framework.

Three teams at the higher performing range of proficiency seen on the 12 attributes of Figure 3 show more systematic and complete progress in employing intellectual capital effectively. Their role planning efforts were more thorough and showed evidence of being carried out as agreed upon including making adjustments during the task at times. The teams independently developed ways to systematically identify contributions from different team members, and engaged in evidence reconciliation. This included identifying signal versus noise in the information, interrogating data for meaning, and sharing aspects of their mental models during their visualizations of scientific data.

As illustrative examples, three of the notebooks in the case study sample will be used to show how teachers can explore some degree of “bench marking” for digital collaboration work products. The three notebooks again represent a range of the performance shown in Figures 3 and 4, with one selected from each of ICN1 to ICN3.

Example 1 in Figure 5(a) is an excerpt from Notebook ID 4. This notebook is at the lower end of the collaborative range, but still showed the performance of value and skills to be reinforced in student digital collaboration. Teachers can help such students by pointing out what they are already doing effectively as well as continuing to work with them to build additional understandings. Achieving four of the twelve attributes listed in Figure 3, the team's notebook shows proficiencies at the first level, or ICN1 of the ATC21S digital literacy “intellectual capital” strand. Students correctly access the collaborative tool, make simple efforts to identify their team members (“who is this?”; “yes it is me!!”), and begin to pose some initial, but relevant questions and answers to each other (“you go which website??? ”, “i will click 3. now u post ur ans. so I can copy for what they ask.”).

Figures 5 (a)-(c) are the examples of excerpts from three different Arctic Trek notebooks which illustrate different ranges of performance seen in the team activities, and how teachers can recognize differences in digital collaboration performance.

In one part of the task, students are expected to find colors that are used to describe the bear population in an online table. Requiring both identifying signal versus noise in information and interrogating data for meaning, a fully successful performance on the “color” task can be mapped into the ICN3 level (“Proficient builder”) of the ICN strand (Wilson & Scalise, 2014), but partial credit is also possible. On the color question for the team using Notebook 4, the students do not review the correct representation, so they are far off in their color interpretation. Yet they still show evidence of attempting to share their thinking at a level appropriate to ICN1. They post simple questions about the color chart they believe that they have correctly selected, and they puzzle together about some initial answers. However, their postings include little or no evidence to support their thinking, and the team members do not evaluate shared results or attempt strategic thinking across the group to resolve discrepancies (“i saw black and grey… ya maybe but I thought also got white”; no additional commentary following on this topic following the discrepant comment).

By the end of the task, the team shows some basic success in accessing and using a digital tool for collaboration, establishes the beginning of an ability to “tag” the identity of a fellow team member, and is successful in posting some relevant questions and answers digitally. Team members also show some degree of frustration in their attempts to collaborate, for instance answers are posted without explanation or evidence: “but we must explain,” one student tries to correct the group. “i try my best ok?” another student responds. Another student reverses the temporal order of answer and evidence supplied, telling the team, “my answer is 3. Then i will do my research to accompany my answer.”

In Example 2 in Figure 5(b), from Notebook ID 9, students achieve six out of the twelve attributes in Figure 3. The team here is emerging into behaviors of the next level of the intellectual capital strand of the framework, ICN2. The team shows somewhat more knowledge than Team 4 described above about the mechanics of collecting and assembly data together in a digital collaboration, and of knowing when to draw on collective intelligence. These are some attributes of ICN2. For instance, they make attempts at some role planning, which Team 4 did not do (“We need to decide who will do what task,” one student says. “ok do you want to decide,” a teammate replies. Other data collection planning comments include, “Can I pick what I want to do?” and “i would like to do the coloring task.”).

However the team members never systematically agree on and execute the planning decisions as a whole. So while this team is moving beyond ICN1 and into ICN2, they are just beginning to master the skill set of more strategic collaboration efforts. These students also begin to acknowledge multiple perspectives by sharing evidence and not just answers across the team during their information foraging. On the color task, one student reports, “i chose four because the graph showed four colors to show the living conditions.” Another student disagrees and advances a claim with concrete details as her evidence for five colors: “They use red for declining populations. They use orange for reduced populations. They use green for not reduced populations. They use light green for stable populations. Then they use yellow for moderate.” A third student on the team checks this answer, and then confirms the five-color evidence statement. The team continues on to have a lengthy discussion about other questions and activities in the task.

In Example 3 in Figure 5( c), which is from Notebook ID 6, students achieve nearly all – 11.5 – of the 12 attributes identified in Figure 3. Team behaviors show not only ICN2 traits, but a substantial degree of systematic effort associated with the next level of the framework, ICN3. In their responses to the “color” question, for instance, the team members identify signal versus noise in information as they come up with the answer of not only five colors but six, which codes for missing data in the task as a source of discrepancy for advanced teams to ponder. They record their consideration of whether white could be considered a color used to represent “data deficiency” or in other words the intended missing data, along with recording the five color codes for the bear populations actually identified in the color chart.

In other answers from the team, not shown in the Figure 5(c) excerpt since the notebook is extensive, the students from this team interrogate data for meaning. They explore how the scientific expedition data shows the species under consideration is “on the general decrease” and “facing high risks of being endangered” but share their thinking to introduce caveats. Team members describe areas they have discovered in the reporting from the scientific expedition that are not consistent with the overall trend, like “the Gulf of Boothia, Southern Beaufort Sea and M'Clintock Channel” where “risks of future decline are low.”

The students on this team also show evidence of sharing aspects of their mental models together. They describe their visualizations of data. To do this, they effectively fit data on bear population graphs with digital tools online and describe how they “control the gradient/frequency” of the display and “the y-intercept” so that they can come up with, record, and share with each other the best fitting curve for the scientific data.

However, even for this team, following the reporting of discrepant opinions, it is unclear whether the students do always consider whether they need to reframe their thinking afterwards. The group does not appear to reach a consensus or otherwise sum up the conclusions of the team as a whole. As they move between questions, they sometimes simply report a set of different answers. This could be due to having insufficient time in the 45 minute task to reconcile answers. However, teams who are able to reconcile spontaneously without prompting or scaffolding might be considered to be at a higher level of team situational awareness and more advanced in their ability to negotiate meaning and establish shared understanding during digital collaboration.

Especially in looking ahead to work settings where digital collaboration might be an important skill, the expectation is often to establish a shared product, such as a common recommendation, a report or presentation that synthesizes the knowledge of the group. Going beyond what the students exemplify in ICN3, this might be an important aspect to add to ICN4, as a distinguishing characteristic of the top level of the framework.

For many teachers, the idea of teaching 21st century skills such as digital collaboration is both exciting and challenging. Teachers want to know how to help students build such skills, which many believe will be important in the future work and lives of their students. But what does a successful performance in digital collaboration look like, teachers often ask, and how should they gauge and support learning?

The case study notebooks here reveal a range of performance during student collaboration with technology can be identified by a set of twelve attributes in digital collaboration aligned with ICN levels 1-3 of the ATC21S framework. Anchor examples are illustrated in three examples.

Recommendations include that, by examining the attributes of digital collaboration and using simple rubrics, displays and tabulations of results such as shown here, teachers should learn to readily recognize traits for building more successful digital collaboration in their student's work. Opportunities for learning in social networks supports the student experience and enhances growing capabilities for effective collaboration. Furthermore, as teachers embrace digital tools and collaboration in their classrooms, it is recommended that they add to initial approaches and customize for the contexts in which they educate.

Teachers who increasingly want students to work together in digital environments need to provide avenues for success. This includes evidence and feedback on what successfull collaboration is expected to look like and to produce. Increasingly as the digital age advances, teachers want rigorous learning contexts that also employ digital tools and learning in social networks for their students. This is recommended to contribute both to academic success and social/emotional learning, as well as 21st century skills important to students for college and career readiness. However, use of assessment and evaluation rubrics for these new products in the classroom is recommended. These are needed to support teacher understanding and student understanding. Teachers need to know what to look for and what to teach toward for new digital collaborative and socially shared learning activities. Students need to know how effectively learning takes place in new learning environments and academic situations.

By mastering productive collaboration in school projects, students can gain skills that are valuable for a lifetime. Teachers and schooling systems can recognize and support these skills, to identify important attributes of collaborative problem solving and digital literacy in student work.