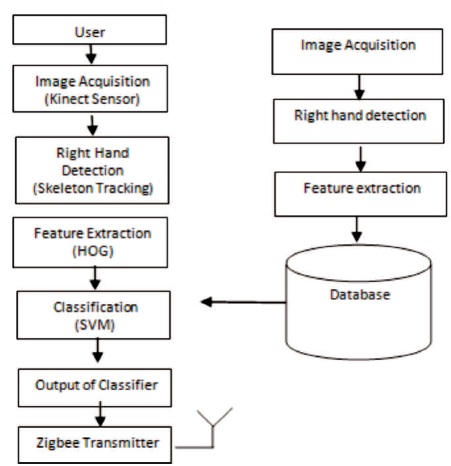

Figure 1. Block Diagram of Processing of Gesture Recognition

Hand controllers and electromechanical devices have been used by humans to control robots or machines, but there were some constraints in several factors of interaction. Pattern recognition and Gesture recognition are the growing fields of analysis. Hand gesture recognition is very significant for Human-Computer Interaction (HCI). In this work, the authors present a completely unique real-time methodology for robot control using hand gesture recognition. It is necessary for the user to communicate and control a device in the natural efficient way in human-robot interaction based. The implementation is done using Kinect sensor and Matlab environment. The robot arm is controlled by Firebird V robot. They have implemented a prototype using gesture as a tool for communication with machine command signals generated using gesture control algorithm. These generated signals are then given to the robot to perform a set of task. This Kinect sensor recognizes the hand gestures and then assigns functions to be performed by the robot for each hand gesture.

Gesture and Gesture recognition terms are frequently encountered in Human-Computer Interaction. Gestures are the motion of the body or physical action kind by the user so as to convey some substantive data. Gesture recognition is the process by which gesture made by the user is made known to the system. Through the use of computer vision or machine eye, there is great emphasis on using hand gesture as a substitute for new input modality in broad range applications. With the development and realization of the virtual environment, current user-machine interaction tools and methods, including mouse, joystick, keyboard, and electronic pen are not sufficient.

The gesture is one of the natural ways of Human- Computer Interaction (HCI) and it has intuitive control methods. Since 2010, Microsoft issued Kinect (Agrawal & Gupta, 2016; Ansari, 2017), a kind of somatosensory camera, a number of methods based on it have been proposed. The former gesture system cannot identify and locate hand positions, but for Kinect, all these problems can be solved easily. Kinect can obtain three kinds of data: RGB data, Depth data, and sound and presage data. Developers can get suitable data to develop their own application according to demands. There are two main methods based on Kinect for gesture recognition. The first one is to obtain human depth images, and separate the region of hands by the 20-threshold value. The second one is to get human skeleton images using skeleton tracking through Kinect, and then it uses the skeleton data for further gesture recognition. In this paper, an effective gesture recognition system based on Kinect is proposed.

This century has witnessed the expansion of robotics like never before. The prospect of most physical and machine work being done by machines has modified the approach the globe functions. Being a high end application or techno-logical, robotics has also influenced other areas of human functioning. Industries have witnessed the automation of its process, increasing the efficiency and decreasing the time and human labor. Human body language recognition using a computer is the overall aim of the research work, thus making a link between using Human-Machine Interaction. Human- Machine interactions are often increased by hand gesture recognition victimization while not looking forward to standard input devices like keyboards and mouse.

The main goal has been presented to design and develop a hand gesture recognition system using a Kinect sensor with the following objectives.

In this section, the previous works on hand gesture recognition is discussed in detail.

Ma and Peng (2018) have introduced an information about gesture recognition using a Kinect sensor. This paper presents an improved threshold segmentation technique. The experimental results show that the hand position is obtained quickly and the period of time recognition distance interval will be reached by 0.5 m to 2.0 m, and the fingertip detection rates can be reached 98.5% in average. This paper concludes that the gesture recognition distance is up to 2.0 m only.

Chen, Kim, Liang, Zhang, and Yuan (2014) explain about recognition of hand. Hand region is extracted from the background subtraction method. Then the palm and fingers area unit divided therefore as to observe and acknowledge the fingers. Finally rule 3 classifier is applied to predict the labels of hand gestures. The disadvantage of this paper is not mentioning the distances. This paper explains only gesture recognition, but no mention has been made about the recognition distance.

Ren, Yuan, Meng, and Zhang (2013) focus on building strong part-based hand gesture recognition system using Kinect detector. This paper explains to differentiate the hand gestures of slight variations. Once a hand form is detected, it is delineated as a time-series curve. The disadvantage of this paper is user has to wear a black belt on gesturing hands wrist.

Agrawal and Gupta (2016) explain a novel method to recognize hand gesture for Human Computer Interaction using computer vision and image processing techniques. In this paper, Senz3D is used as a camera to capture the information. The number of open fingers and their actions that perform improves some of the functions.

Xu (2017) has designed a real time Human Computer Interaction system based on hand gesture. The whole system consists of three components: hand detection, gesture recognition, and human computer interaction and realizes the robust control of mouse and keyboard events with a higher accuracy of gesture recognition. Using of monocular camera, recognition distance is very less. A special blue point has been made on the hand.

Reviews on gesture recognition using Kinect is done in (Jais, Mahayuddin, & Arshad, 2015). The Kinect camera captures the movements or gestures made by user then process the data and send the specified commands to a system, device, or application. This paper presents Hidden Markov Model and classifiers such Support Vector Machine, Decision Tree and Back propagation Neural Network for that purpose.

Sankpal, Chawan, Shaikh, and Tisekar (2016) have implemented a prototype using gestures as a tool for communicating with machine. The image of gesture is captured by the camera located on laptop. Then extraction of useful information from image is done by per forming image segmentation and image morphology on the gesture. Gesture command is used to operate any robotic system, which will help to simplify human task. Disadvantage is the range of laptop camera is limited and appropriate lighting is required for red color detection.

Wang, Song, Qiao, Zhang, Zhang, and Wang (2013) have proposed a method to control the movement of a robot based on Kinect, which provides skeleton data with low computation, acceptable, performance, and financial cost. A Khepera III mechanism is employed as a model management object, to verify the effectiveness of the projected technique. The corresponding command is sent to control the movement direction of the robot through Bluetooth.

Ingle, Patil, and Patel (2018) have presented an interactive adoptive module, which moves the robot in various directions. This paper gives a brief description of Firebird V robot and the modularity in changing the microcontroller adaptor boards. This paper emphasis on basic learning of Firebird V robot, components of Firebird V robot, embedded c programming language, and IC.

Pagi and Padmajothi (2017) present a system built up by the Kinect sensor and the robotic arm manipulator, which can mimic torso-body human motion in real time. Using the processed positions of human skeleton joints from the Kinect sensor, commands directly to control the robot arms tracking different joint angles.

This section presents the system structure of the proposed framework. The entire system consists of two modules. The first module of the framework performs the gesture recognition for the movement of the robotic arm and the second module consists of a robotic arm along with the Firebird V robot (Kishore, Rao, Kumar, Kumar, & Kumar, 2018; Ma & Peng, 2018). Each module is composed of sensor part and controller part. Sensor part is for sensing and analyzing the data from the human body. The controller will collect and process the data from the sensor. ZigBee module is present in the modules for the purpose of wireless transmission and reception of data.

The block diagram of processing of gesture recognition system is shown in Figure 1. In this, the human body is detected by the Kinect sensor and the right hand is tracked through skeleton tracking. (Charayaphan, & Marble, (1992); Jones, & Rehg, (1999). After the right-hand detection, features are extracted from the Histogram of Oriented Gradients (HOG). For training the dataset, the extracted HOG features will be stored in the database. Again the HOG feature is extracted from testing data and further Support Vector Machine (SVM) classifies the best match for the test data from the database and generates a particular command for a particular gesture performed by the user. The generated command will be sent through a wireless ZigBee transmitter module.

Figure 1. Block Diagram of Processing of Gesture Recognition

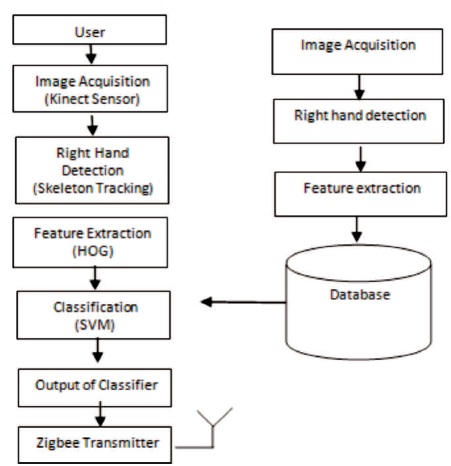

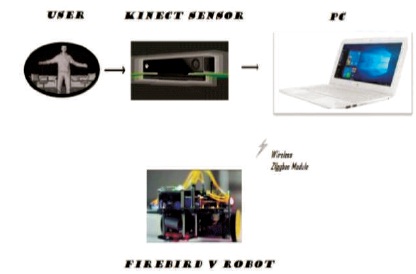

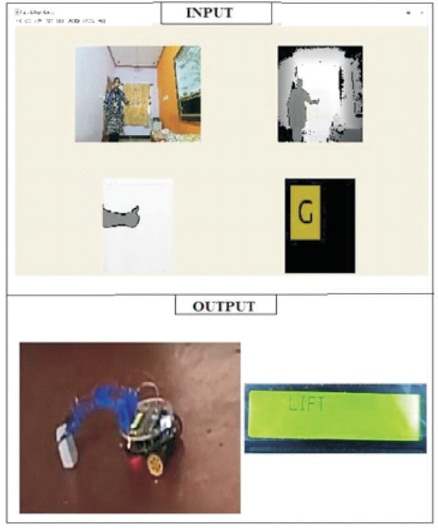

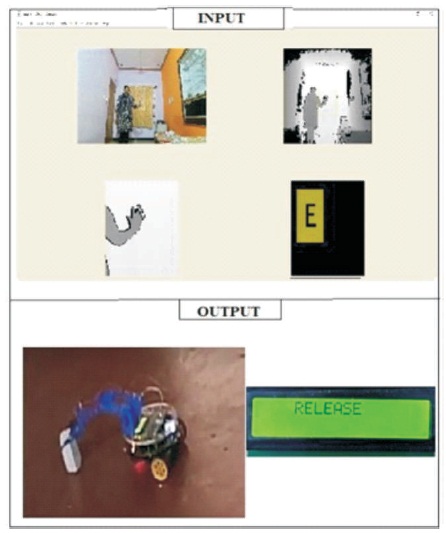

The block diagram of Firebird V Robot and robotic arm system unit is shown in Figure 2. The system is programmed such that whenever ZigBee receiver receives a data, controller unit sends a signal to servo motor unit to perform specific actions with respect to the output of classifier. At the same time, the output actions are displayed on LCD. The objects which are present in the surroundings are sensed by the IR sensor, if the nearby objects are sensed, through IR sensor it generates a stop command to the controller of Firebird V robot.

Figure 2. Block Diagram of Firebird V Robot and Robotic Arm System Unit

The section describes the framework of hand gesture recognition using Kinect sensor and robot unit control system.

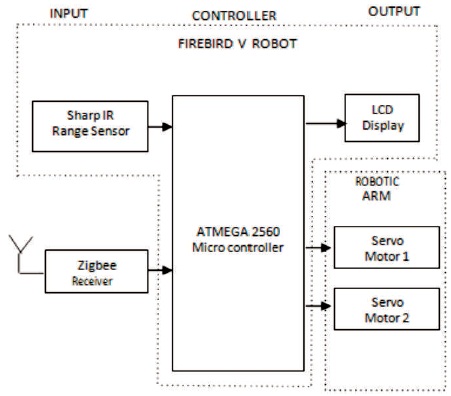

The proposed system can make humans can navigate a robot using hand gestures as a medium. The proposed system overview is shown in Figure 3. The gesture commands are generated using Matlab as a software platform for image processing. As soon as the Kinect sensor detects the gesture, it generates the command for the robot for further functions.

Figure 3. Proposed System Overview

The methodology of the proposed system is as follows:

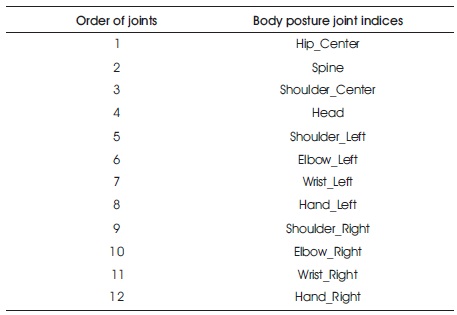

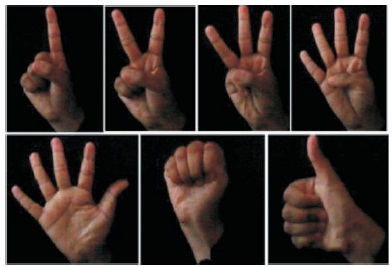

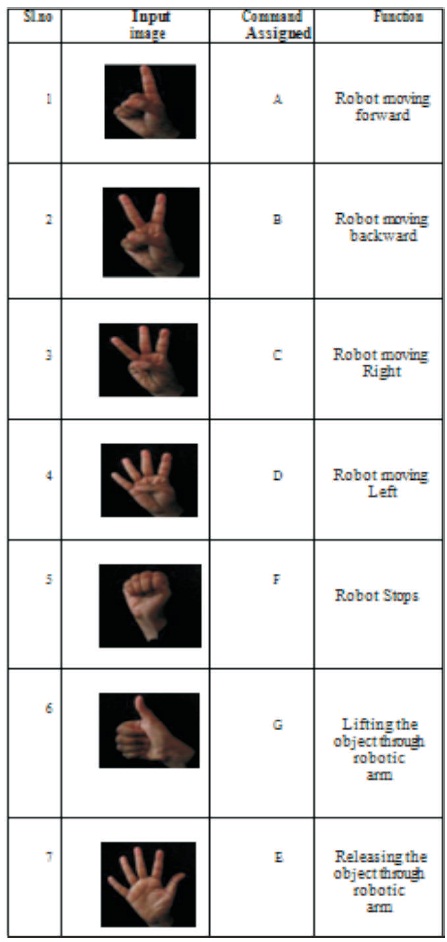

For capturing images, a Kinect sensor (Ansari, 2017) is used, which is connected to the PC through serial port. In hand gesture recognition, right hand is tracked in human body. For this, the skeleton tracking is used. The order of the joints returned by the Kinect adaptor and the main command used in Matlab (Ingle et al., 2018) for right hand detection is 12 order and are listed in Table 1. The detected right hand is cropped in order to ensure the clarity of the gestures performed. In this, 100 images per gesture are trained. It consists of 7 classes, so the set of hand gesture images used in the experiments are shown in Figure 4.

Table 1. Order of Body Posture Joint Indices in Matlab

Figure 4. The Set of Hand Gesture Images used in the Experiments

Before extracting the features, the resolution of all the images must be same. This is often because if the size of all the images is not same then it will lead to a totally different dimension of the feature vector and that they are going to be troublesome to check. Thus, image resizing is finished in order that a number of columns and rows should be the same. It additionally helps in reducing the time interval by the PC and helps in raising the accuracy of the system. Then RGB image is converted to a grey scale image.

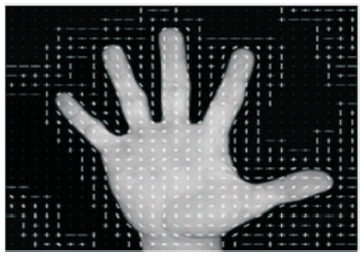

Image features extraction is the method of defining a set of features or image characteristics. HOG feature vector is shown in Figure 5.

Figure 5. Gesture Feature Extraction

Steps followed for HOG:

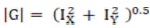

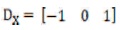

Ix and Iy are horizontal and vertical gradients of the image I is shown in Figure 6, obtained by using a convolution operation as given by equations (3) and (4).

where Dx and Dy are 1D-filter kernels,

Figure 6. Image Divided into Cells and Horizontal and Vertical Gradient Representation

Figure 7. Image Divided into Cells and Horizontal and Vertical Gradient Representation

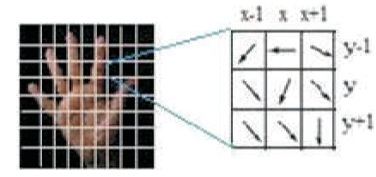

The extracted feature values are stored in feature vector variable memory. The visualization property of the extracted features includes the cellsize of the image would be [8 8], blocksize of the image would be [2 2], and 9 number of bins. The feature extraction of each gesture with respect to input image are listed in Table 2.

Table 2. HOG Feature Extraction of the Hand Gestures

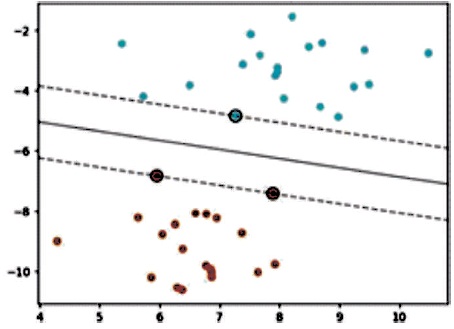

Then the last part of gesture recognition system is classification done through SVM (Ansari, 2017; Ingle et al., 2018). SVM has been used successfully for pattern recognition, beneath the conception of risk of minimization rule. SVM is easy and quick classifier because of its multidimensionality. A Support Vector Machine (SVM) is a discriminative classifier formally defined by a separating hyperplane. (Nagashree, Michahial, Aishwarya, Azeez, Jayalakshmi, & Rani (2005). SVM is a discriminant classifier, which is defined by a separating hyperplane. SVM constructs a hyperplane, which is used for classification and regression as shown in Figure 8. It finds nearest data vectors called Support Vectors (SV), to the decision confinement in the training set and it segregates a given new test vector by using only these nearest data vectors. Patil & Subbaraman, (2012).

Figure 8. The Optimal Hyperplane

Steps followed for SVM:

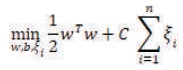

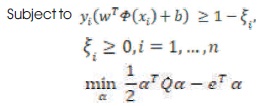

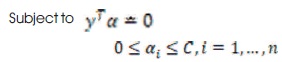

Given training vectors xi∈Rp, i=1,…n, in two classes, and a vector y∈ {1,−1}n, SVC solves the following primal problem:

where e is the vector of all ones, C>0 is the upper bound, Q is an n by n positive semi definite matrix, Qij ≡yiyj K(xi,xj), where K(xi,xj)=φ(xi)Tφ(xj) is the kernel. Here training vectors are implicitly mapped into a higher (maybe infinite) dimensional space by the function φ.

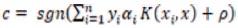

The decision function is:

where xi are the support vectors, αi are the weights, y is the bias, and k is a kernel function. In the case of a linear kernel, k is the dot product. If c ≥ 0, then x is classified as a member of the first group, otherwise it is classified as a member of the second group.

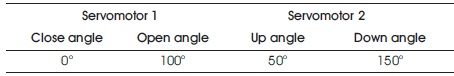

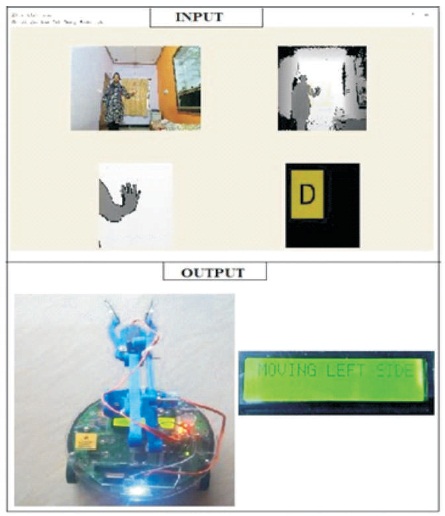

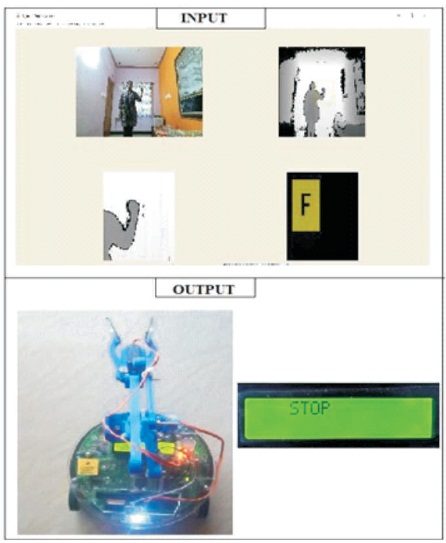

The command assigned for particular sign gesture recognition is shown in Table 4. The command from the ZigBee transmitter is received through wireless ZigBee receiver module, which is mounted on the Firebird V robot. Depending upon the commands, the functioning or movements of the robotic unit is controlled. 11th and 13th pin of ATMEGA2560 micro-controller of Firebird V robot is connected to the PWM of Servomotor 1 and 2, respectively. Servomotor 1 will be represented as gripper motor and servomotor 2 will be represented as movement motor listed in Table 3.

Table 3. Servomotor Angle Positions

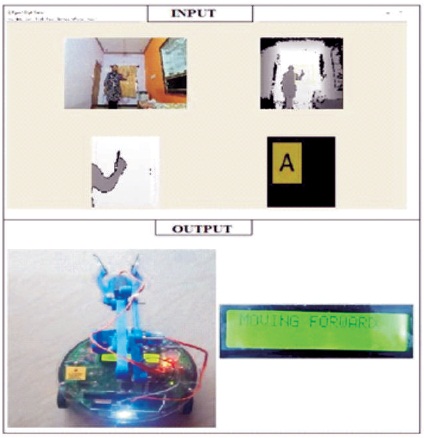

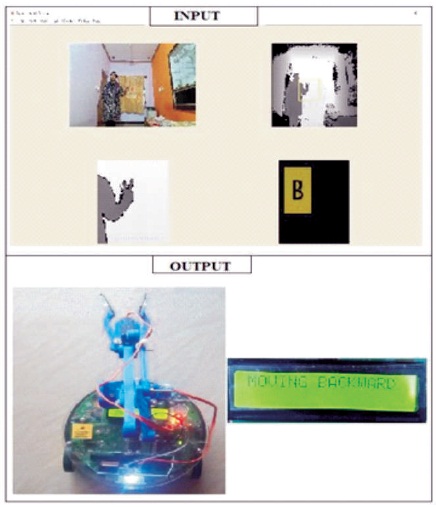

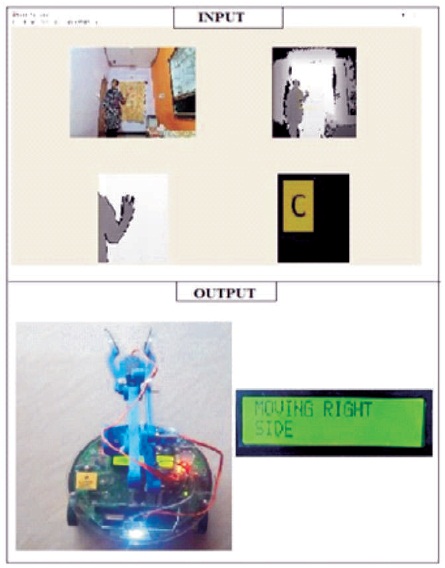

The corresponding functions of the robotic units are displayed on LCD (16*2). With respect to the gestures, the robot starts functioning. When the assigned command is A, the robot starts moving forward. Similarly for B command, robot starts moving backward, C command influences the robot to right side, etc. The remaining robotic functions are listed in Table 4. Whenever the robot comes across any obstacle, then robot stops suddenly.

Table 4. Command Assigned For Gesture Recognition and Function of Robot

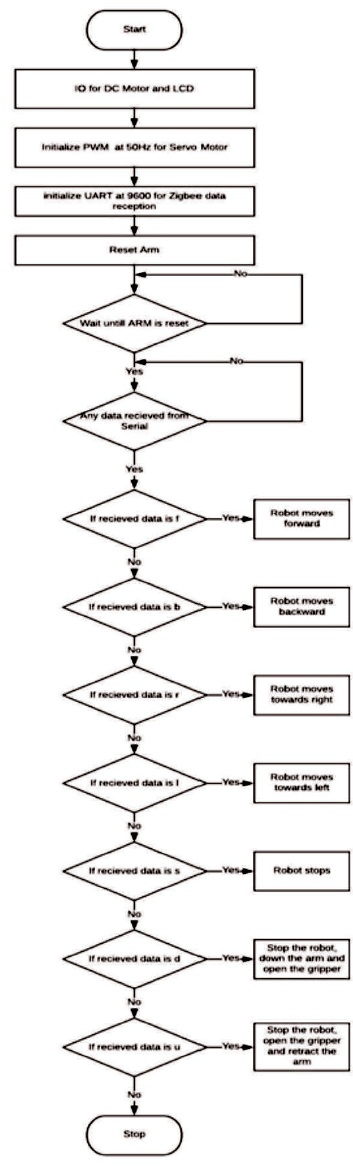

Flow charts are used in analyzing, designing, program in various fields. This section illustrates the flow charts of hand gesture recognition for training database, hand gesture recognition of testing data, and robot unit control system.

Figure 11. Flow Chart of Robot Unit Control System

The proposed system “Hand Gesture Recognition using the Kinect Sensor” is developed using microcontroller, sensors, robot arm, and ZigBee modules. This section explains about the final results and conclusion drawn from the proposed work and also the scope for future work in this direction.

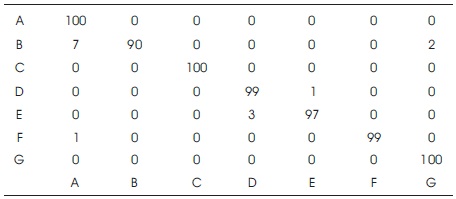

To evaluate the results, the authors have simulated an environment in which hand gestures dataset is collected using Kinect sensor along with Firebird V robot to control all the functions. All the experiments are performed on a standard laptop with Intel Core i7 processor. The dataset is collected as 100 trained factors from 7 gestures cases. Thus in total 7 gestures * 100 trained cases =700 gesture cases have been evaluated in real time.

In this work, right-hand gestures are used to control the Firebird V robot and robotic arm. The main concept behind the robot control is to detect the right-hand gestures through the Kinect sensor. Now the performance evaluation of the system from the following aspects:

5.2.1 Robustness to Cluttered Backgrounds

The hand gestures are recognized using the depth information as a result, the backgrounds can be easily removed. The HOG results in good feature extraction that leads to robustness to the cluttered background (Ansari, 2017; Sheenu, Garima Joshi, & Renu Vig) in an efficient manner. Thus the hand gesture recognition system is robust to the cluttered background. Figure 12(a) illustrates an example when the hand is clustered by background has been detected accurately. Figure 12(b) illustrates when the hand is cluttered by the body has been detected accurately.

Figure 12. (a) The Hand that is Clustered by Background can be Detected Accurately (b) The Hand that is Cluttered by Body can be Detected Accurately

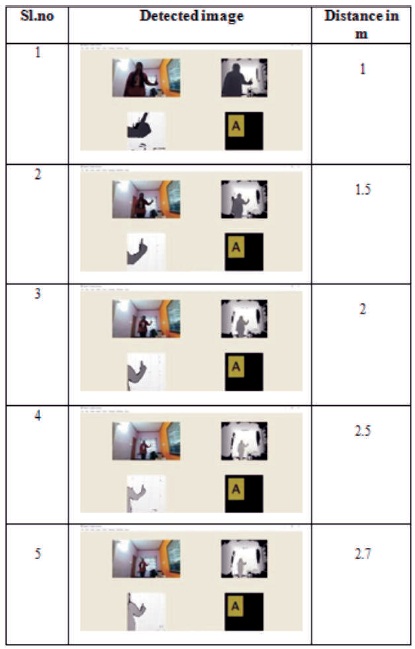

5.2.2 Robustness to Variation in Distance with Respect to Hand Gesture Recognition:

In a real-time environment, detection of hand gestures from a far distance is essential. The proposed system uses Kinect sensor, which is capable of detecting hand gestures efficiently compared to another camera, along with SVM classifier and the HOG feature extraction provides a determinant way to extract gesture features irrespective of distances. Nagashree, Michahial, Aishwarya, Azeez, Jayalakshmi, & Rani, (2005). Thus the system is able to recognize hand gestures for a minimum distance of 0.5 m and a maximum distance of 2.7 m. Table 5 depicts hand gesture recognition with the variation in distance.

Table 5. Recognition of Hand Gestures with the Variation of Distance

From Table 6, it can be seen that the hand gesture is identified between 0.5 m and 2.7 m. The correct rate of gesture detection of varying distance is listed in Table 6. The correct rate of gesture detection between 0.5 m and 0.8 m is 98%, between 0.8 m and 1.2 m is 100%, between 1.2 m and 1.6 m is 100%, between 1.6 m and 2.0 m is 96%, between 2.0 m and 2.2 m is 100%, between 2.2 m and 2.5 m is 98%, between 2.5 m and 2.7 m is 98%. Thus the proposed work is able to detect gestures greater than 2 m with high accuracy.

Table 6. Experiment Results in Gesture Detection

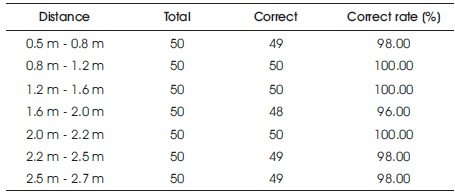

5.2.3 Robustness to Different Orientation of Hand Gesture

The hand gesture in three different orientations and its corresponding Matlab result is shown in Table 7. The features of hand gestures in three different orientations are extracted using HOG. Then the SVM classifier compares the output of HOG with trained database for providing a suitable classes. The classified output of the hand gestures in three different orientations results in the same class. Thus the system correctly recognizes the hand gestures in different orientation and the Matlab results are all same which are shown in Table 7.

Table 7. Hand Gestures with Orientation Changes

5.2.4 Robustness to Distortion

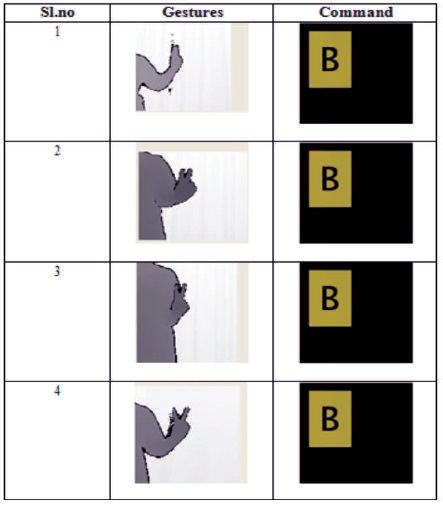

In real-life environment, a hand can have variations on orientations. Besides, because of the limited resolution of the depth map, the hand shapes are always distorted or ambiguous. However we can demonstrate that the proposed gesture extraction using HOG and SVM (Ansari, 2017; Ingle et al., 2018) classifier is not only robust to orientation, but also insensitive to distortion (Anju, & Surendran, 2014). As we can see, the hand shapes in Table 8 are heavily distorted. However, as illustrated in the left column of Table 8, by detecting the finger parts, the extracted features are all same in matrix. As a result, the Matlab output command will be the same as listed in Table 8.

Table 8. The System is Insensitive to the Distortions

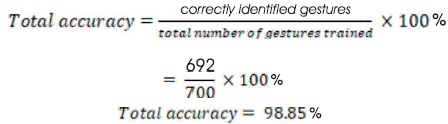

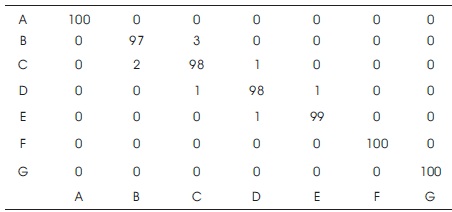

In order to evaluate the accuracy and efficiency of the system, 700 gesture cases have been used as datasets. The results of the overall 700 pictures is summarized with a confusion matrix in Table 8. In the confusion matrix, the first column and also the last row are the labels of gestures. The other entries of the matrix record the numbers of the gesture pictures expected as the corresponding labels. as an example, for the first row, the quantity 100 is in the column equivalent to the label A. It implies that there are 100 pictures predicted as label A within the 100 training pictures of the gesture A. So, for the testing images of the gesture A, the accuracy is 100%. The overall accuracy of 700 testing image is 98.86%.

The confusion matrix of this method is shown in Table 9. The averaging accuracy of rule classifier is demonstrated in Table 10. The accuracy of the proposed method is 98.86% and the accuracy of rule classifier is 97.85%. Thus the method outperforms rule classifier.

Table 9. The Confusion Matrix of Proposed Method

Table 10. The Confusion Matrix of Rule Classifier (Anju & Surendran, 2014)

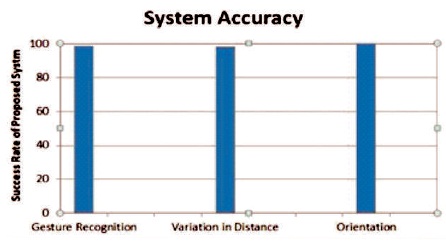

The accuracy of the proposed work with respect to hand gesture recognition, variation in distance and orientation has been shown in Figure 13.

Figure 13. The Accuracy of Hand Gesture Recognition, Variation in Distance and Orientation

The proposed system is able to perform in real time achieving high gesture recognition rate with successful robot control. The proposed system is invariant to distance and orientation changes. In most cases, the proposed system consistently produces better results than existing. Various hand gesture recognition along with Matlab commands and robotic movements have been depicted from Figures 14 to 20.

Figure 14. Matlab Generates Command A and 1 Firebird V Robot Moving Forward

Figure 15. Matlab Generates Command B and Firebird V Robot Moving Backward

Figure 16. Matlab Generates Command C and Firebird V Robot Moving Right Side

Figure 17. Matlab Generates Command D and Firebird V Robot Moving Left Side

Figure 18. Matlab Generates Command F and Firebird V Robot Stops Moving

Figure 19. Matlab Generates Command G and Robotic arm Mounted on Firebird V Robot Lift the Object

Figure 20. Matlab Generates Command E and Robotic arm Mounted on Firebird V Robot Release the Object

Table 11 gives the comparison between the proposed system and existing work with respect to the various sensors used and the communication techniques employed. It can be seen that the proposed system provides user friendly ser vices with well-known technologies when compared to the existing works.

In this work, the author have developed a real-time hand gesture recognition using the Kinect sensor and controlling robot unit. The developed system relies on SVM classifier to learn feature and to recognize gestures. They have employed a series of steps to process the image and detect the right-hand gestures before feeding it to the SVM classifier in order to improve the performance of SVM classifier, 700 gesture images are collected to test the SVM classifier and demonstrate in Matlab that generates commands to perform the actions through Firebird V robot and robot arm. The proposed system for real-time hand gesture recognition gives a good result with high accuracy and efficiency and can be used in a real-world scenario for industrial application.

Future research work includes the following aspects.

The authors wish to thank the Principal, Professor, and all Technical Staff of the Department of Electronics and Communication at Sri Jayachamarajendra College of Engineering, Mysuru, Karnataka, India.