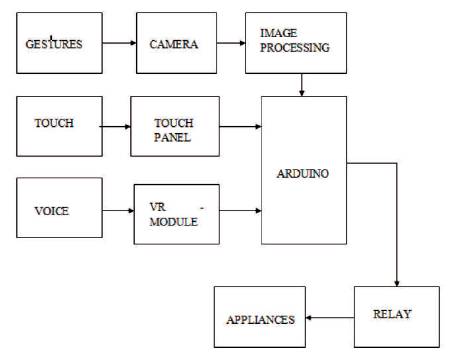

Figure 1. Block Diagram of NUI System

Natural User Interface system is adopted by using the technology available for the purpose of controlling the devices as well as the systems used in the home automatically. For making the Natural User Interface system easy, the control of all the devices should be brought into one place, which makes home incredible. A variety of home devices can be controlled with the help of a Natural User Interface system.

Natural User Interface is built upon aiming to develop the most simple yet, highly efficient form of User interface having touch, voice, and human movements as the key elements. The traditional switches are upgraded with aid of Capacitive Touch Technology. Add-on like Voice is processed with the help of Voice Recognition (VR) module and Gestures are processed with Open Computer Vision Python (CV-Python) using Convex Hull algorithm. These controls are used in order to make it easy for any category of people to use the system with very minimal efforts. It has wide variety of applications and it can also be implemented in Industrial, Educational, Medical, Military Applications.

Language is the interface through which communication is possible among people, when it comes to devices and users, it is again a language which a system understands. But, when two devices have to communicate, there should be some interface between those two devices. Using Natural User Interface, the authors are trying to make two devices communicate with each other without a user interruption physically but, using a User's Gestures, Voice Commands and Touch.

An interface is a platform where, two or more systems are bridged together in order to make the data exchange process easy and convenient to one another. Interface is a tool, which connects the humans with the machines, and help in building user-friendly systems. Interface is capable to receive, transfer, read, and write data to a system or from the System. Interface can establish better connection between hardware and software.

Generally, a User Interface is referred to as UI for connecting an user and a system. Using the User interface, the user holds the complete control of the system. The aim of building an user interface is help the user get an easy access and control over the system. User Interfaces are developed in order to get maximum efficiency and best performance possible. User Interface is developed, where the effort by the user to achieve something is very minimal and desired output is produced from the system, which is possible user Interface. Interfaces usually are built in such a way they act according to inputs intuitively, which gives userfriendly experience.

There are several User Interfaces; one of them is given in brief.

Natural User Interface is often referred as 'NUI'. An NUI is a user interface that responds to natural human behavior like touch, voice, and general body movements or Gestures. Natural User Interfaces are highly advanced and they can convert the human actions to data, and it is sent to perform respective functions after processing.

Natural interfaces are likely to be compact and quickly seem invisible to human eyes. There are many ways in which NUIs can be operated based on the requirement and the objective of the system. NUI are the most interactive advanced technology, that can be used for any types of applications, which requires humanmachine interaction.

Speech is the most fundamental signal, that is used by human beings to convey information. The speech interface is an interface in which, the system takes the data in the form of voice and is processed to perform a specific set of tasks, which is already stored in the system [7]. The system continuously receives data and is compared with the recorded standard set upon, which the interface provides desired output from the system [1], [6].

In this type of interface, touch of the user is taken as the data for the processing system. The touch by the user is tracked and mapped in order to recognize the data, the user wishes to produce to the system and that the data is used to perform specific actions [10].

Gesture is considered as basic actions done by humans during the regular routine of life to communicate some information like sleep, food, well-being, etc. Gestures are the one of the effective ways of communicating between humans. Gesture Interface is a tool, which helps the user to interact with machine using Gestures. It is also referred as Gesture User Interface (GUI) [5]. The Gesture Interface takes human actions as the data and is processed by the system accordingly to perform certain set of functions. The system is implemented without having any devices with physical contact to the user and the system is invisible, which makes it automatic.

The objectives of the paper are listed below.

The block diagram as shown in Figure 1 gives the complete process of this paper, which contains three sections as different interfaces. First Interface is based on Voice, second Interface is based on Touch, and third interface is based on Gestures. In Voice Interface, each and every word spoken by a person is recorded and tested with the trained data. In Touch Interface, Touch of a person on a panel will be identified and corresponding actions will be performed. In Gesture Interface, the actions made by a person will be captured and compared with gestures recorded prior and respective functions are carried out.

Figure 1. Block Diagram of NUI System

The design discusses about extracting the signal in the form of sound, touch, and actions by a person, from VR module, Touch panel, and Camera. They are transmitted to microcontroller, where the commands will be passed to the system for further processing.

The goal of this work is embedding three types of NUIs onto a single chip. Firstly, in Voice Recognition, a speech command can be determined by power of speech signal, which can be taken by the help of microphones being connected to the computer itself. Using VR module, the voice commands are captured and trained prior [1], [6]. The general block diagram of the interfacing is shown in Figure 2. The voice from VR module is fed to Arduino for processing and the signals are then sent to respective devices through relay [3], [8].

Figure 2. Block Diagram of Interfacing

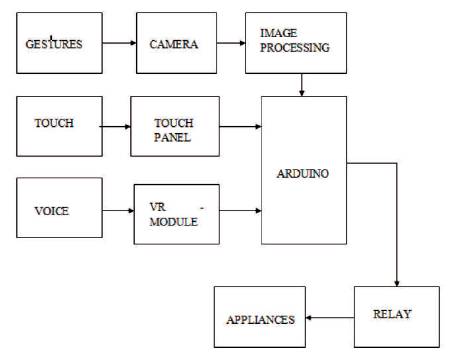

A touch user interface is an interface, which works on the sense of touch by the user and processes it in the form of data to the machine. In this system, a touch panel is used, which works on capacitive touch principle. As soon as a touch is sensed by the panel, there is a change in the capacitive junction voltage, which acts as a trigger signal to the system, which is processed further to cause specific action. Touch panel consists of number of patterns. Each pattern indicates different devices like light, music system, fan, television, radio, etc. The circuit is rigged up and the circuit diagram is shown in Figure 3.

Figure 3. Touch Circuit

When touch is sensed capacitor shows the difference in voltage. This difference in voltage is a small pulse. This small voltage is not enough to trigger the relay through microcontroller, so as to amplify the signal from transistor 555 timers are used. This timer will increase the pulse voltage to 3.3 V, which is enough to drive the appliance through relay via Arduino. This amplified voltage signal is given to D-latch. D-latch records the previous state of the appliance, i.e., the appliance is ON/OFF. So, whenever a signal is given to the data input of the latch, it checks for the previous state of the respective appliance, this is recorded and the state of the appliance from ON to OFF or from OFF to ON. The appliances are driven through the relays and relays are controlled by the microcontroller which has the signal from D-latch as its input is provided to relay and hence relay operates the appliance accordingly.

Hand Gesturing or Gesturing using bodily actions has been used by man since the dawn of civilization as a means of communicating messages, and also used in parallel with spoken words. The meanings of Hand Gesturing, varies depending on geographical and cultural backgrounds. Gesturing can also be profession specific, such as Hand Gesturing used by the military or navy personnel to communicate very specific information. Gestures are an important aspect of human interaction, both interpersonality and in the context of man-machine interfaces. A Gesture is a form of non-verbal communication which visible bodily actions communicates particular messages, either in place of speech or together and in parallel with words, Gestures includes movement of the hands, face, or other parts of body.

The Image Processing part of this paper was developed using OpenCV. OpenCV is an Open Source Computer Vision library developed by Intel Corporation. OpenCV is written entirely in C++, which allows for fast image processing. It has a library of over 2500 different algorithms. OpenCV has interfaces, that allow for programming using C++, C, Python, and soon JAVA, and can be executed on different platforms, such as Windows, Linux, and Android. One major drawback is that GUI interfaces cannot be developed directly using OpenCV. Python language is chosen amongst all other languages supported by OpenCV to know the different features of contours, like area, perimeter, centroid, bounding box, etc. Many functions would be seen related to contours. One of the functions called convex hull is used to detect the contours.

Convex Hull are implemented on the convex curves. Convex hull is not similar to Contour Approximation method. Convex curves are generally bulged out, if found to be bulged in, then it is considered as convex defect. cv2.convexHull() is used to detect and correct convex defects [4], [9].

Figure 4 has been assumed to study the Convex Hull algorithm.

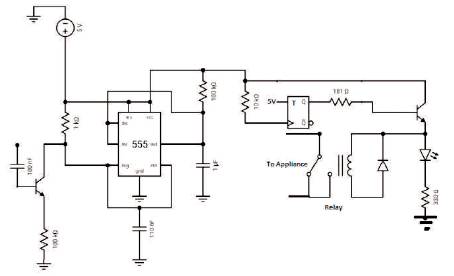

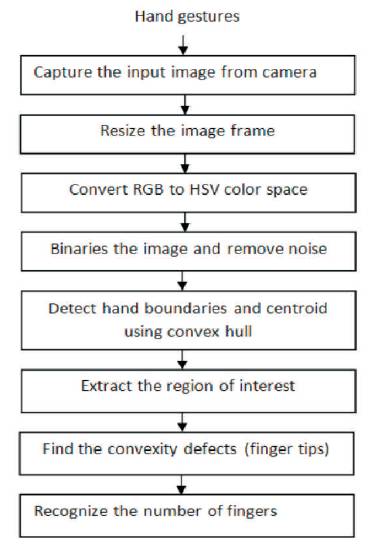

Figure 4. Convex Hull

Convex hull algorithm follows the procedure to detect the Gesture of the Hand. Figure 5 shows the flow of methodology for gesture recognition. The first step in this process is locating the hand using tone of the skin, because it is hard to determine with the moves as the interval may vary from person to person. The second step is to use the hand state to extract the Hand features to determine the finger recognition process. Once the hand is separated contour boundary is created. The counter consists of a number of vectors of hand. When counter finger is processed, fingertip is recognized. Joining the minimum and maximum points, a bounding rectangle is defined, within which convex hull will be present.

Figure 5. Flow of Methodology for Gesture Recognition

There are other points of convexity in the contour. The difference between each arm of Hull is called as convex defect. The defect points are present mostly at the center of the finger valleys. Later the average of all the defects is found out, which is definitely bound to be in the center of palm. Thus the radius of palm is indicated. The ratio of radius to the length of the finger triangle should be same, thus, number of location of tip of finger could be found using AdaBoost algorithm and Haar like feature algorithm for detecting and recognizing the hand[2].

But, when every gestures are combined in a same program, it gets mixed and creates confusion for real time hardware. So, the authors have changed the representation in a rectangular way so that the fingertip and connecting lines are clearly visible.

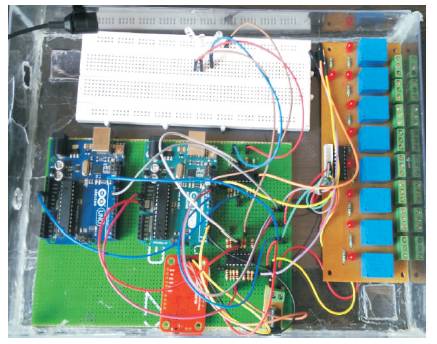

The core of NUI technology lies in how efficient it is to control the appliances with ease. NUI technology is strengthened up by combining all of its sub-technologies making it robust. There are three phases in which the NUI is built on. The prototype of the NUI is shown in Figure 6.

Figure 6. Prototype of NUI

Voice samples are loaded to VR module, the procedure is as shown in Figure 7. The voice commands from the speaker are fed to the VR module and compared with the training set, if the signal matches to the recorded or trained set of commands; the hardware interface executes the code written to control the appliances as shown in Figure 8. Thus voice interface strengthens the NUI technology.

Figure 8. Training VR module 2

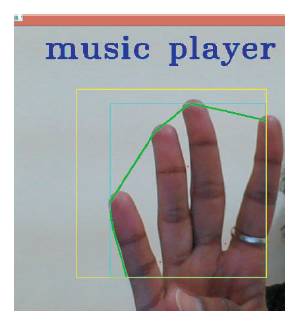

Figure 9 is the output, when the gesture shown is two. Here, Convex Hull algorithm is employed to find the number of defects. If two fingers are detected, number of defects will be one. It is programmed to control light, when defect is one. Figure 10 is the output, when the gesture shown is four. Here, Convex Hull algorithm is employed to find the number of defects. If four fingers are detected, number of defects will be three. It is programmed to control music player when defect is three.

Figure 9. Gesture Pattern 2

Figure 10. Gesture Pattern 4

The proposed system architecture is reliable, because of the use of three technologies embedded into a single system. The Touch part of the system has been designed with different patterns with capacitive touch technology to drive the appliances with faster rate. Voice Recognition system improves the acceptance rate with the help of VR module, which has voice commands trained prior. This makes the system flexible to be used by the elderly/disabled people. By using Convex Hull algorithm, the appliances can be controlled in a very effective way. The proposed and implemented system is very advantageous, as it involves three different types of NUI, i.e, Voice, Touch, and Gestures. If an appliance has been turned ON by touch part of the system, then it can be turned OFF by either Voice or Gesture part of the circuit.

The authors feel, it a great pleasure to express their deep sense of gratitude and profound thanks to their internal guide Mr. Suresh K., Assistant Professor, Department of Electronics and Communication Engineering for his valuable guidance, unrelenting assistance, and support throughout the research work.