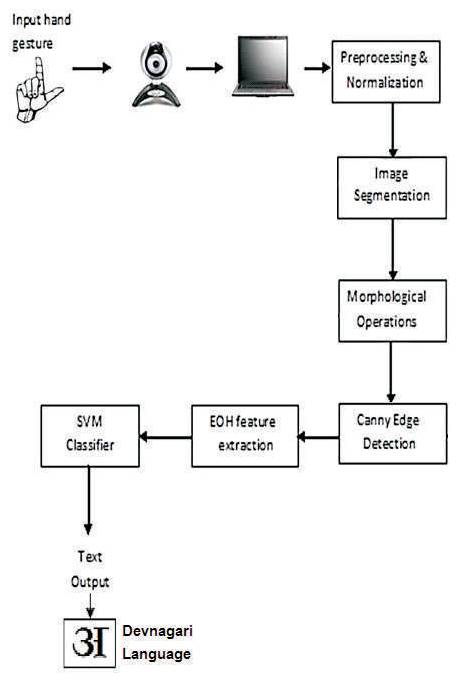

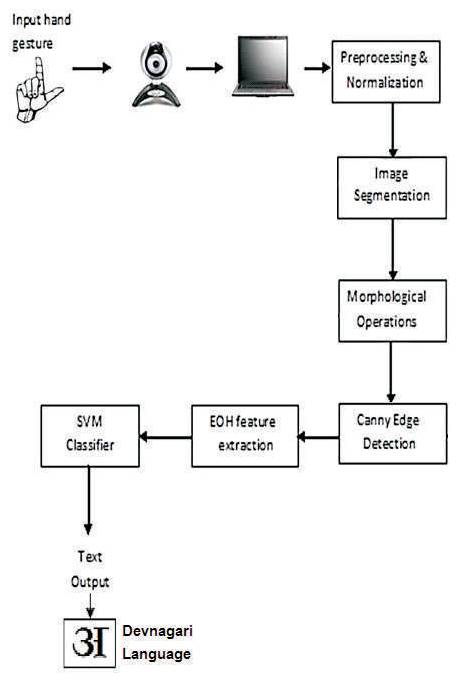

Figure 1. General Architecture of Hand Gesture Recognition System

Hand gesture based sign language is a different way of communication among the Deaf-mute and physically impaired people performed using specific hand gestures. Deaf-mute people face struggle in expressing their feelings to other people, which creates a communication gap between normal and deaf-mute people. This paper, based on hand gesture based Devnagari Sign language Recognition approaches aim to provide a communication way for the Deafmute Community over the society. Therefore, the authors have used static hand gesture based sign language recognition for Deaf-mute communication system. Many researchers use only single American Sign Language or Indian Sign Language for creating their database. In this paper, recent research of sign language is reviewed based on manual communication and body language. A hand gesture based Devnagari Sign Language for recognizing Hindi characters using hand gestures of Deaf-mute people is developed. Devnagari Sign Language recognition system is typically explained with five steps, i.e. acquisition, segmentation, pre- processing, feature extraction, and classification.

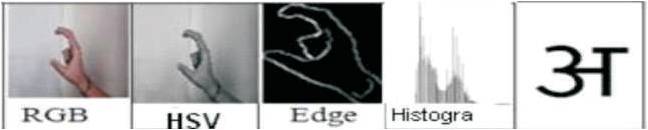

Hand gesture based Sign language uses gestures instead of speech to communicate. Hand Gestures are forms of body language or non-verbal communication which are commonly used in the MATLAB with combination of shapes and patterns, orientation or movement of the hands, facial expression patterns of the lips, etc. Sign language recognition is considered mostly with hand gesture. Hand gesture based sign language is an area of research involving the fields of hand pattern recognition, computer vision, and natural language processing. The implementation of devnagari sign language using hand gestures still face many problems, due to the complexity of hand gesture recognition, and naturally, there is a high variation in the performance of user gestures, such as their physical features of person and environmental conditions. Further hand gestures are of two types, namely static hand gesture and dynamic hand gestures. Static hand gesture is used for the configuration of the sign of hand gestures and poses that are represented in the form of images, while the dynamic hand gesture is the use of the sign of moving hand represented by an image sequence or video data. Sign language is currently widely used in hearing impaired or dumb people as a communication media. It has different uses in many areas like HCI (Human Computer Interaction), Robotic movement Control, Gaming, Computer Vision techniques, etc. Devnagari Sign Language Translation System using image histogram matching algorithm is proposed in this project for recognizing Devnagari Sign Language (DSL) characters. The steps of the algorithm are Image capturing, image acquisition, Image Preprocessing, hand gesture images feature extraction, edge detection of morphological images, and histogram matching using SVM classification. Image is captured in RGB color space using good quality web camera placed on top of the laptop. In the image pre-processing, morphological operations like image blurring, and image noise removing are done. The next stage is feature extraction, where the image histogram considers the cropped images. In feature extraction stage, hand gesture image is extracted and then edge detection is done using canny edge detection. The gesture image histogram obtained at this stage is compared with the histogram of an image in the Matlab training dataset and similarity factor is analysed and calculated. Perfect hand gesture image match is obtained for highest similarity factor. In this paper, hand gesture based Devnagari Sign Language (DSL) which includes some vowels (swars) and consonants (Vyanjan) are taken.

This main aim of this paper is to design a system to help the hearing impaired people to communicate with others, so that the conventional sign language can be replaced with computer recognizable sign language.

Many research works have been done in the area of vision based hand gesture recognition during the past two decades. Another application of gesture language is the interaction between human and computer, in which hand gestures are taken as input data to the computer through a webcam. In HCI, a visual interfacing platform is created to provide a natural way of communication between man and machine [4]. A novel and real time system is used for interacting with a video game via hand gestures using SIFT and SURF feature extraction techniques. Both uniform and complex background are involved in the hand gesture recognition system. Skin color segmentation is used for segmenting hand from a complex background. a complex background [6] using the Euclidean distance measure system is used to recognize 26 static hand gestures for ASL alphabets [1] . Back propagation neural network based hand gesture recognition system was designed to recognize American Sign Language [7]. Lab color space and the extraction of peaks and valleys as the features based neural network is used to classify the hand gestures for alphabets in [8] , after segmenting the hand from the input image. The hand segmentation technique uses background subtraction method [9] to segment the hand with uniform and complex background from the image. To recognize the gesture signs through ANN system [10], which is invariant for the scaling and translation of gesture signs is implemented. A novel method called SIFT features with PCA and template matching is developed for pattern recognition is presented in [11] for recognizing 36 different gestures.

Few works have also been done in the recognition of Indian Sign Language. A classification system technique has been developed for recognizing ISL gestures based on Eigen value weighted Euclidean distance [4]. To reduce the feature space, Gabor filters are convolved with images and then gestures are recognized by using SVM which is based on the reduced gesture Gabor filters. To train the neural network for Persian Sign Language recognition, Discrete Wavelet Transform is used [9]. Various feature based color models are also used in the segmentation of hand for gesture recognition system. The perceptual color space is used [8] for wireless robot control applications. Finger spelling [11] is used in several country languages, such as Indian, American, British, Chinese, Persian, Arabic, and Malaysian for recognition of sign language.

The method consists of five steps which include image acquisition, image pre-processing, segmentation, feature extraction, and classification. The basic architecture of this proposed hand gesture recognition technique system is shown in Figure1.

Figure 1. General Architecture of Hand Gesture Recognition System

The first step, image capturing is the main step in the system of the Devnagari Hand Gesture Recognition System. The image is captured using a good quality 8 mega-pixel webcam. Image capturing can be done by different color space methods such as RGB through webcam. The system uses the RGB color space model to capture the image.

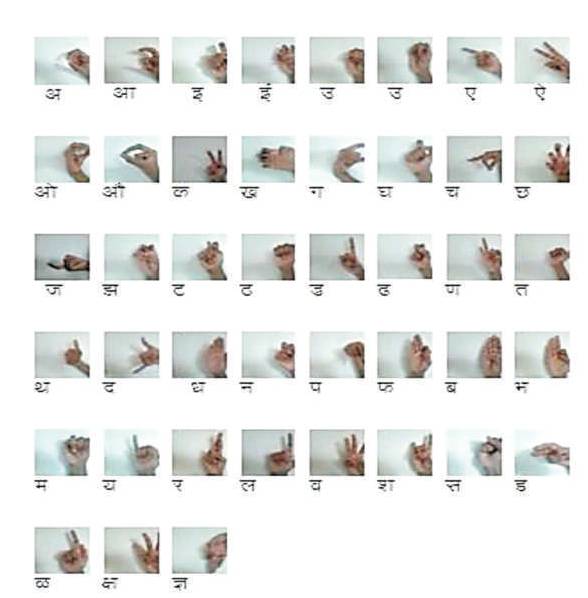

In this paper, the hand gestures of Devnagari Sign Language alphabets were used for recognition purpose. With reference to [6], all the static hand gesture images were captured in real time with a good resolution using webcam and the data set was prepared, which are shown in Figure 2. The images are captured by the webcam in real time without the projection of the arm of the person and were saved in .jpg or .png format.

Figure 2. Hand Gesture of Devnagari Sign Language Alphabets

All the gestures in the Devnagari Sign Language database are static in nature except the alphabets [5] . All the gestures in the Devnagari Sign Language database are static in nature except the alphabets

Since these two gestures involve movement of fingers, they remain out of range of study in this system and are not included in the dataset.

Since these two gestures involve movement of fingers, they remain out of range of study in this system and are not included in the dataset.

Image Preprocessing is very much required tasks to be done in hand gesture based Devnagari sign language recognition system. A large database is considered which is a standard database in gesture recognition. Total 20 signs each sign with 10 images. Preprocessing is applied to images, before we can extract features from hand gesture images. Image preprocessing consists of two segmentations.

A very good image segmentation is needed to select an adequate threshold of gray level for extracting hand from the background, i.e. no part of hand should have the background and the background also should not have any part of the hand. In general, the selection of an appropriate segmentation algorithm depends mostly on the type of images and its application. Thresholding are used to obtain a binary image from the normalized gray scale image. Global threshold techniques are used in this work for segmenting the region of hand from all input images, even though many thresholds based techniques exists. Because of simple background, the threshold differentiates the foreground and background pixels quickly and easily and segmentation of the hand is made from the image. After segmentation, as a result the hand region would become white and the background would become black in the image.

As the segmented parts of the hand image still contain noise, erosion, and dilation [12], image morphological operations are performed on it. The smaller components less than 300 pixels are removed from the background of the image and only the biggest connected component are present, i.e., the hand is retained in the image using labeling. Now, the labeled image uses filter for the noise removal. In image processing, noise reduction is performed on an image and it is the typical pre- processing step which is used to enhance the result of further processing such as detection of the edge of an image. Median filter, a kind of a non-linear filter is used to remove noise. The median filter is applied to smoothen the hand image which is used for detection of edge in the next stage. A morphological filtering approach is applied based on a sequence of dilation and erosion to get a closed, smooth, and the complete contour of the gesture. The conversion from gray scale to binary image is done using segmentation. So that only two objects in image; one is hand and other is background should be present. Otsu algorithm [2] is also used for segmenting gray scale images to binary image consisting hand or background. After conversion, it is important that there should be no noise present in the image, therefore using the morphological filter technique. Morphological techniques consist of four basic operations, such as dilation, erosion, opening, and closing.

The Canny edge detection is an edge detection process that uses a multistage algorithm to detect a wide-range of edges in morphological filtered images. There are many edge detection processes in the image processing field and canny edge detector is one of the most effective detection techniques. It is able to perceive an extensive range of edges in a morphological filtered image. As it meets with an accurate general criteria for image edge detection and the implementation process is quite simple, it has achieved well recognition for edge detection.

After the detection of the edges of the hand gesture image by using canny technique, the Histogram features based on the edge detections are extracted from the images. Feature vector is formed using centroid of hand gesture images given by (Cx , Cy ) and area of the static hand edge region given between area.

Feature vectors of training images are stored in .mat database files of MATLAB and feature vector of input Devnagari hand gesture image are calculated at run time.

Support Vector Machine (SVM) is applied for hand gesture image classification as represented in Figure 3. The basic SVM scheme classifies input pattern of gesture images to one of the two classes, but a multi class extension of SVM follows one to all differentiation to classify one class for all other classes. An input devnagari hand gesture image is to be assigned to one of the classes  ,where character is the label for alphabets.

,where character is the label for alphabets.

Figure 3. SVM Scheme Input Pattern of [6] Gesture Images

This technique is based on the static gesture recognition for the devnagari sign language [3]. Every hand gesture in the devnagari sign language database is static in nature except some of the alphabets in Hindi language. Since the gestures involve the movement of fingers, those alphabets are not included in the database.

This system requires a webcam for input section. Therefore, people need to carry a webcam wherever they go. Webcam needs to be a good quality resolution which in turn makes the system costly. In this system, we cannot convert a large sign that represents an expression of a work, like ‘I want to drink a glass of water’. This system can convert only a single sign to respective devnagari single character.

The authors have designed a helpful system for the hearing impaired Indian people. Impaired people of many institutions were surveyed who cannot communicate easily. They can use this sign language recognition system method instead. So a new, efficient algorithm for detecting their sign language was designed and converted into text character. After conversion, the character can be given to the speech synthesizer for text to speech conversion. This system helps hearingimpaired people to communicate with the normal people.

This paper proposes a novel and easy approach for hand gesture recognition of Devnagari characters, which uses this gesture for Devnagari sign language recognition. The proposed work focuses only on hand gesture recognition of some characters. Since hand tracking is the primary step in hand gesture recognition, using various functions of Matlab image processing hand tracking have been accomplished. In future, the system will be working with hand gesture recognition of both the hands for Devnagari language.

The author would like to acknowledge her gratitude to a number of people who have helped her in different ways for the successful completion of the thesis. She takes this opportunity to express a deep sense of gratitude towards her guide, and co-author Mr. Sandeep B. Patil, Assistant Professor (E&I), Faculty of Engineering & Technology, SSTCSSGI, Bhilai for providing excellent guidance, encouragement, and inspiration throughout the project work. Without his invaluable guidance, this work would never have been a successful one. She is thankful to Mr. Chinmay Chandrakar HOD (ET&T) and Dr. P. B. Deshmukh, Director, SSTC-SSGI, Faculty of Engineering & Technology, Bhilai for their kind help and cooperation. She would also like to thank Mr. Chandrashekhar Kamargaonkar M.E. Incharge, SSTC-SSGI, and Mr. Sharad Mohan Shrivastava, Faculty of Engineering & Technology, Bhilai for their kind support and helpful suggestions. She feels immensely moved in expressing her indebtedness to her parents whose sacrifice, guidance, and blessings helped her to complete the work.