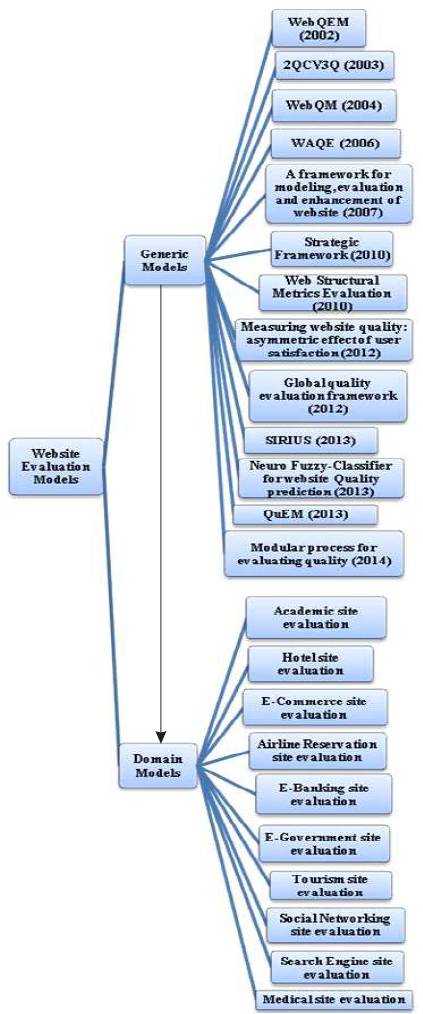

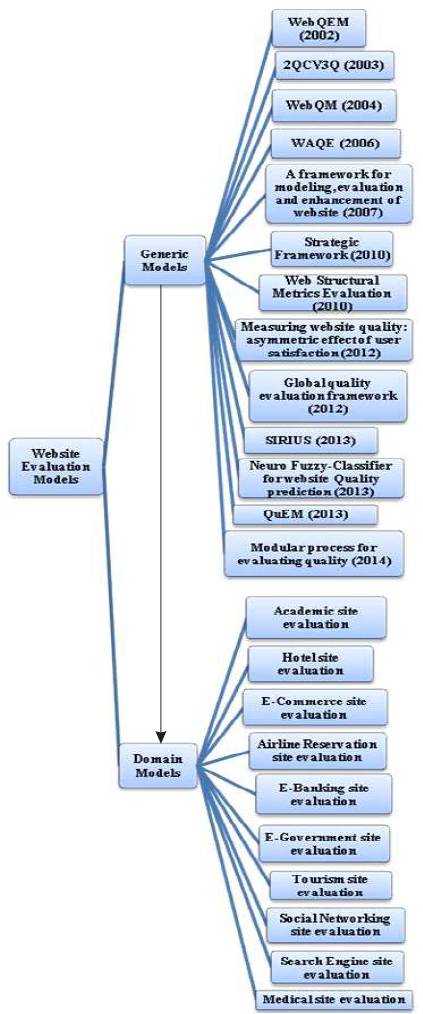

Figure 1. Classification of Web Evaluation Studies

Due to rapid development in the web era, one must know the main issues which are involved in the design, evaluation, and enhancement of the website. These issues carry out the identical importance for all persons, whether they are designers, users or managers. These issues are also having a large implication for researchers, who delve into the discipline of web evaluation. User satisfaction plays a major role in the success of a website. This paper aims to discuss the issues related to the discipline of web development and enhancement. In-depth study of reputed research papers has been conducted to determine different persons involved in website designing and to identify their goals, as well as to find different domains of websites. Different evaluation criteria and evaluation methods have also been attained from the previous studies conducted in the discipline. The paper concludes with the proposal of few guidelines, which must be kept in mind during the website construction and evaluation. A modular approach for the website evaluation is proposed by recognizing the role of the various persons involved while achieving a quality website.

Today, website development is one of the leading professions in the world. People survive on the web services even for their basic needs. They can do online shopping and can pay bills for electricity and telephone via online. They use the social networking sites to entertain themselves, to share their data and to find more friends. They access sites for playing games as well as for education purposes. Online classes are organized on the web and many sites even provide full lecture notes. People have left out hard copies of documents and rely on the internet for their full needs as it is easily accessible everywhere and on mobile devices. People can store their data on online drives and can access them at any time. The provision of these services becomes mandatory for a website to exist in a competitive world. So, the main goal of a website is to provide enhanced usability.

The usability discipline is in the evolution form, since last three decades. Its main goal is to develop user interfaces which can be easily operated. Web developers can only make proper interfaces if they can measure usability with some guidelines. Very high level of principles in the discipline of usability have been provided by Damodaran et al.(1980) and Preece (1994). Then, first ISO standards have been established as ISO/IEC 9126 in 1991. These standards are further enhanced from time to time as ISO 8402 (1994); ISO 9241-11 (1998); ISO 13407 (1999); ISO/TR18529 (2000); ISO/TR 16982 (2002); ISO 15504 (2004); ISO 9241-151 (2008); ISO/IEC 25010 (2011).

Due to the existence of numerous methods, tools and models to measure the usability, the current scenario has become very cumbersome. The major fact is that none of these models has been approved as standard universal model. It is very difficult to decide which method should be followed. The second problem in this field has been defined by Kano et al.’s model (Kano et al., 1984). The main challenges in the evaluation of usability are the selection of a refined method which can assure the successful measurement. This is due to the lack of awareness of multiple issues involved in the web designing field. So, this paper intends to classify and discuss the chief models expressed in the literature along with their limitations, and measures to overcome the shortcoming of appropriate and simple guidelines to identify the goals of a website on the quality basis. The proposed guidelines follow the modular approach and define the relationship between the various persons involved, along with their roles in identification and evaluation of objectives of the website. The existing website evaluation criteria and methods are elaborated in Section 2. The outcomes of application of criteria as well as methods i.e. models are embodied in Section 3. Finally, this paper recommends certain ethics along with general strategy to be followed during a website construction and evaluation.

Various academicians have proposed their own evaluation criteria, methods and even models to evaluate the website. All these are goal oriented as well as domain oriented. For the purpose of systematic study, the research worked out in this discipline has been divided into two classes, one class comprised of general models whereas another class encompassed the studies in ten particular domains. To determine the reputed studies in the field of web evaluation, research methodology has been defined in two steps. The first step has been adopted for the determination of different domains of websites from related studies as well as for retrieving the different evaluation studies. Second step has been used to analyze the various web-evaluation approaches which have been followed by these studies. Zviran et al. (2006) has classified websites on the basis of traffic volume into five categories as informational, shopping, e-banking, trading and business to business whereas, Lee and Koubek (2010) has classified sites according to the usabil ity aspectin to four categories such as entertainment, information, communication and commerce. Recognizing the functionality and services offered by websites to users as obligatory factors, the websites have been dispersed into ten domains as academic, hotel, e-commerce, airline reservation, ebanking, e-government, tourism, social, search engine and medical sites. Various generic models and web domains selected for in-depth study have been portrayed in Figure 1.

Figure 1. Classification of Web Evaluation Studies

Academicians have enriched the literature with a lot of criteria to evaluate the website. Design aspects have been well defined and accessed qualitatively by Cebi (2013b) for predicting the quality of shopping sites. However, ten aspects have been dispersed into eightythree criteria by Torrente et al. (2013). Zhu (2004) has used three quality dimensions with thirteen evaluation criteria to evaluate the web source quality. Aladwani and Palvia (2002) have assessed four aspects viz. technical adequacy, specific content, content quality and web appearance for measuring twenty-five parameters. Bai et al. (2008) have evaluated responsiveness, competence, information quality, empathy, web assistance and callback systems. Criteria taken for evaluation depend upon the goal of the study as well as the methods and techniques used for evaluation.

Depending upon the type of assessor involved, methods are divided into two categories. In the first category, evaluation is performed by experts to check the interface against some existing set of design principles (Alsmadi et al., 2010; Malhotra and Sharma, 2013; Yen et al., 2007; Zhu, 2004) whereas the other category, users opinion has been taken to estimate the usability criteria (Cebi, 2013b ; Chiouetal. ,2010; Kincland Strach,2012 ; Mavromoustakos and Andreou, 2006). The main method adopted by many studies is heuristic evaluation (Nielsen and Molich, 1990; Petrie and Bevan, 2009). A certain heuristic is decided by experts to check the selected criteria which are followed by inspection process. So, heuristic evaluation helps developers to correct the problems during the design process and evaluate design quality. Other methods are performed by potential users which involves survey using questionnaires, interviews, case studies etc. (Chinthakayala et al., 2004; Joo et al., 2011). These are basically employed to check the websites for managerial goals so that they can win their competitors and evaluate operational quality features.

Some models have elucidated step by step procedure for evaluation of website (Becker et al., 2012) whereas, few models have been depicted according to ISO guidelines, but they are very general to implement (Olsina and Rossi, 2002) . So, two types of models exist in literature, the first category involves general models which describe the particular strategy to follow and can be applied to any site. Another category of models comprises of domainoriented models which are practical in nature and work on a specific objective. These models are extracted in refined form from first category models as well as are dynamic in nature.

Among the two types of models, first are those which describe general layout for website evaluation and they can be applied to any website. These models are mainly milestones of web evaluation as without them domainspecific models can't be fabricated. These models are also competing for standardization. They are also not too much dynamic due to domain independence and become obsolete rarely. The main models under this category have been summarized in Table 1. Generic models are mostly designed according to the software engineering principles and prescribed limited number of steps which seem to be easy, but in reality they impose a lot of decision-making problems to an analyst for implementation, as to evaluate the website, one needs requirements gathering from various types of unknown users, which is a very cumbersome task (Denning et al., 1989). There is also lack of proper tools, activities, and techniques to fully implement them.

The prescribed methodology is used to systematically assess characteristics, sub-characteristics and attributes that influence product quality. The main aim is to classify the web metrics for web evaluation. This model can be applied to different domains as its prerequisite is to define quality attributes, sub-attributes and measuring indicators. Again, it can be implemented from the developer, user as well as managerial point of view. It has focussed on user-perceptible product features such as navigation, interface, and reliability rather than product attributes such as code quality or design. It can discover absent features on the website. Poorly implemented requirements from interface design and implementation point of view, and drawbacks or problems with navigation, accessibility, search mechanisms, content, reliability, and performance can be easily outlined. But model becomes complex to implement, as too many metrics are involved with weight discrepancies. Furthermore, metrics have to be decided by webdesigner at the time of implementation (Olsina and Rossi, 2002).

The 2QCV3Q model helps developers to evaluate website quality from both owner and user viewpoints. It has highlighted that, elements which when suitably combined, can permit thorough site assessment and also guide in website development. Site owners and developers can therefore, use this model in every project phase, which ensures that the developing site matches the constantly updated requirements to achieve total quality. From the model's nature, its application can't be completely automated; however numerous tools can effectively support it. Developers can also use 2QCV3Q to design an integrated support environment. But for its implementation, quantitative metrics and micro discussion are not demonstrated (Mich et al., 2003).

WebQM has been proposed to evaluate the web resource quality by using some critical features such as autonomy and dynamics, openness and heterogeneity of contents. It also considers the feasibility of model, its effectiveness, and fitness for the web quality issues. This model has been further formally specified and validated (Zhu, 2014).

The proposed model assesses the quality of web application by taking the quality factors of ISO 9126. The developed questionnaires have been enquired from internal experts as well as end users, so that quality can be evaluated from two different perspectives i.e. from organization's goal and objectives, and also from user perceived quality. Collected data have been analyzed by taking statistical figures of frequency and median. The relative importance of certain features of the web application can be easily predicted which facilitates the application enhancement (Mavromoustakos and Andreou, 2006).

A three-layered framework (application analysis layer, generic website design layer, and graph modeling layer) with necessary mappings in-between them has been presented. It can be applied across different domains or sectors. While the current practice is mostly qualitative and ad hoc, yet formal modeling methods and analytical techniques have been adopted to evaluate and improve website performance. The framework has not been designed to predict the usability as the central measures are content and design only. Both investigation and validation for categorization of website design objectives and constraints are required at the conceptual as well as empirical level (Yen at al., 2007).

This Strategic Framework is has been based on a review of the literatures from 1995-2006 for web evaluation. In this study, twelve unified factors with the percentage of their supported studies have been demonstrated. Evaluation criteria with five factors and various sub-factors have been presented and finally, a five-step web evaluation process has been identified for e-commerce studies. There has been very limited research performed to explore the web strategic issue in website evaluation. Practical implementation of the model has not been demonstrated in this study; only the conceptual method has been presented, so web analyst needs high expertise to implement it (Chiou et al., 2010).

The study has recommended navigability features in order to evaluate the popularity of the website. A number of links i.e. the links received by the site from other sites and outlines, which are hyperlinks originating within a website have been measured to evaluate popularity. An HTML parser has been developed to measure some features which evaluate structural complexity (Alsmadi et al., 2010) .

This study has given more impact from the user point of view; and hence, identified content and navigation are the key ingredients for a quality website. So, it has been suggested to web designers and website evaluators that these attributes should be taken into consideration more closely. This study also aims to identify easy administer practical measurement tools. According to usability guidelines, various attributes have been compared and contrasted, which upshot the content and navigation as the key ingredients for evaluation. Websites of various business schools have been evaluated via assignment of a random yet typical task, and their responses have been calibrated before and after completion of the task graphically (Kincl and Strach, 2012).

Three quality criteria i.e. content, service and technical have been taken along with sub-criteria as parameters for quality evaluation of the website. Evaluation results can be used for comparison or enhancement of site (Rocha, 2012) .

It is the recent general method whose aim is to evaluate the usability of a website according to the type of website. So, the very first step in this method is to find the category of the website according to some aspects and criteria. Then, it decides the evaluation values at the lower level. After that, weighing criterion for these values has been determined which is distinct for each type of website. In the final step, usability metrics are shown which help the analyzers to analyze the site well. It is the property of this method as its layout is general, but it becomes domain specific during functioning. The main limitation is, the need for an in-depth requirement gathering and decision making during its implementation. Another constraint is, it only evaluates usability, but it measures accessibility first and then usability as most researchers think that usability can only be increased if the site is more accessible to its users (Medina et al., 2010; Petrie and Kheir, 2007; Watanabe, 2009; Torrente et al., 2013).

The main aim of this model is to predict the website quality whether it is good or bad. It used a Fuzzy Inference System which is based on two techniques, ANFIS-Subtractive clustering, and ANFIS-FCM. It validates these techniques using pixel-awards data. Only 9-parameters are used for web evaluation and all parameters are rooted in webpage structure. This model is complex to use (Malhotra and Sharma, 2013).

This model has given the main impact on weighing criteria of low-level parameters by using Decision Making Trial and Evaluation Laboratory (DEMATEL). By applying Fuzzy set theory, it evaluates the relative degree of interactions among parameters which are helpful in applying weighing constants with more accuracy (Cebi, 2013a) It has also used the Choquet integral to evaluate the expected design quality of the website. This model is represented in the application form and finally evaluates a shopping site. The model has not given any idea on how to measure lower-level metrics, and it highly depends upon experts’ decision in linguistic form (Cebi, 2013b).

The model has been designed by addressing quality gaps from five quality modules i.e. innovation gap from unexpected quality, knowledge gap from requested quality, policy gap from expected quality, user gap from quality in use and communication gap from perceived quality. Different evaluation methods are involved in evaluating these modules. The main aim of the model is to reduce the gap between the evaluated results for these five modules by enhancing the site features. In addition to this, priorities can be assigned to these quality modules and then a linear process can be adopted for their evaluation. This model computes the total quality but needs a lot of previous data and complex methods to implement (Mich, 2014).

The second class of models deal with process models which are very easy to implement, but the way they have prescribed assumptions, instructions, metrics, and tools and techniques (Torrente et al., 2013; Triacca et al., 2004) make them domain and task specific, and hence they lose generality. In the eternally changing technical world, they become obsolete in a few time in their own domain. Due to the evolving web, new domains are created very frequently, and one needs new models for them. But all these models are developed upon generic model guidelines as a base with minor amendments. So, there is a trade-off between the development of generic models and domain models. The core fact is that none of these models is realized as the standard process model for website evaluation.

These models are the basically polished form of generic models. When generic models are applied in specific domain, their features are reorganized according to the objectives of evaluation. Some evaluation metrics are not needed or preferred for some specific domain site, whereas for another type of site, same metric can be most significant e.g. high-quality images for shopping site can be most prioritized requirement as the final product should have high-class visibility than some other banking or educational site. However, the domain of websites is an emerging field, yet some evaluation studies in specific domains which have been thought about are as explained below:-

Joo et al. (2011) have devised a model to evaluate usability for academic library websites based on literature review and expert consultation. The model measures three parameters with sub-parameters from user survey, and then applied various statistical techniques for validation. Another model proposed by Afonso et al.(2012) measures the weblog data i.e. number of unique visitors, total visitors, hits, and bytes accessed to predict the usability of higher school of education etc. Some studies have the mission for evaluating e-learning (Buyukozkan et al., 2007; Hwang et al., 2004) while others evaluate just one or two parameters i.e. service quality (Tate et al., 2007) and usability (Gullikson et al., 1999). One study has proposed the evaluation criteria (Santos, 2015), whereas another study found the priorities among them (Suwawi et al., 2015).

The model by Alhelalat et al. (2008) has tested the quality, usability, and benefits of hotel websites. The resultant model demonstrates the interrelationships among the main conceptual parts, including the specific hotel website features. Another research conducted by Baloglu and Pekcan (2006) has used binary variables to evaluate design and marketing characteristics of hotel website by content analysis, but has the limitation in expressing fully, each criterions’ performance.

Many studies have been conducted in this domain. The chief goal is to evaluate the site from business to consumer point of view, and multiple models exist in the literature for this purpose. A set of refined metrics developed by Gabriel (2007), can be easily adopted by designers and developers, but it is a time-consuming model as it determines too many metrics. Another model proposed by Sun and Lin (2009) has employed 3 quality dimensions and 12 parameters to evaluate online shopping site for competitive purposes. SITEQUAL extract nine dimensions from fifty-four criteria to measure four factors (Yoo and Donthu, 2001). In another research performed by Orehovacki et al. (2013), five dimensions have been used for evaluating a commercial website, and exploratory factor analysis have been performed for factorial structures. Clickstream and data mining approaches have been used to study the performance of relationship marketing activities (Schafer and Kummer, 2013) while Bayesian networks along with ISO 9126 quality factors have been used by Stefani and Xenos (2008) to evaluate the website quality.

The model represented by Naeimeh et al. (2013), helps airline website decision makers to understand the desired quality from customer's point of view. Three sets of ESERVQUAL criteria i.e. performance, information and online-service quality have been chosen, following a review of prior airline website studies in order to map every three levels. In another work (Shchiglik and Barnes, 2004), an instrument to evaluate the website quality has been developed and the online survey has been conducted to validate it.

This domain is enriched with a lot of studies. A recent study has been performed in Poland to compare the banking websites and to conclude which bank is best in e-services (Chmielarz and Zborowski, 2016) whereas, to analyze the different facilities which can be availed through internet banking, a study has been done in Tunisia (Achour and Benesdrine, 2005) . In Nigeria, bank sites have been evaluated for functionality, interactivity and effective communication (Chiemeke et al., 2006). These parameters have been also analyzed with relationship diagrams. To conclude the effective user interface evaluation method, different methods have been analyzed by Wenham and Zaphiris (2003) whereas, to reveal the relationship between navigation and popularity, structural complexity has been evaluated by Kaur and Dani (2013).

A lot of research has been done in this domain with different aims. Different instruments have been designed to measure the usability and user satisfaction by using different methods (Barnes and Vidgen, 2006; Garcia et al., 2005; Henriksson et al., 2007; Jati and Dominic, 2009; Papadomichelaki and Mentzas, 2012; Verdegem and Verleye, 2009), however some researchers (Barnes and Vidgen, 2004) have focused on particular sub-domain like e-taxing and audit official sites. Grimsley and Meehan (2007) have measured the public value of sites whereas Alomari et al. (2012) have determined the critical factors for adoption of e-government websites. Sivaji et al. (2011) have determined the effectiveness of heuristic evaluation technique for evaluating an e-government website.

An excellent review for ten years has been done by Law and Buhalis (2010) to describe the different evaluation criteria and techniques in this domain. Different objectives have been taken by different researchers to evaluate tourism sites. Corigliano and Baggio (2006) have represented the bootstrapping method for evaluation whereas, Bastida and Huan (2014) have evaluated four destination brands by heuristic evaluation. Bauernfeind and Mitsche (2008) have developed an application based on Data Envelopment Analysis (DEA) for evaluation whereas some researchers (Chiou et al., 2011; Ip et al., 2011; Lu and Lu, 2004; Lu et al., 2007; Morrison et al.,2005) have worked on evaluation frameworks and measurement scores.

Different evaluation criteria and methods have been adopted again in this domain. Keenan and Shiri (2009) have evaluated sociability features of four social sites whereas, Greene et al. (2011) have assessed content posted by communities regarding diabetes. Some researchers study relationship between tie strength, trust, homophily, normative and informational interpersonal influence using hypothesis (Chu and Kim, 2011) whereas, some have worked on on-site optimization for maximal usage (Korda and Itani, 2013). Some studies have been performed on motivation factors (Lin and Lu, 2011) enhancement features (Silius et al., 2011) and metrics for evaluation quality (Ellahi and Bokhari, 2013; Neiger et al., 2012).

A very few evaluation studies exist in literature as the main concern of this domain is to optimize searchability across the World Wide Web (WWW) databases. Asmaran (2016) has compared three search engines in terms of quality of search results and speed to provide the results. Another study by Vaughan (2004) has proposed the measurement criteria to evaluate the performance stability of a search engine whereas, Jansen and Spink (2004) x have compared the behaviour of search in different regions.

Medical sites are very wide in the domain, but are mainly oriented towards providing contents regarding precautions and therapies for various health issues. Hence, Martins and Morse (2005) have evaluated the content quality on internet whereas, Perez-Lopez (2004) has evaluated the popularity of website along with contents provided by it. Another research by Moreno et al. (2010) proposed a methodology for evaluation of the quality of websites yet, Howitt et al. (2002) have described a tool and evaluated the randomly selected 108 UK general practice websites.

It can be concluded that a lot of studies have been performed to evaluate sites in different domains, each having the separate objective in addition to the varying set of evaluation criteria and method. So, a lot of heterogeneity exists in literature and every research has been fully oriented towards its specific mission during the study. Due to geographical and cultural differences of various users, existing domain model can't be applied everywhere even on same domain websites with the similar objective of the evaluation study.

Various issues which should be kept in mind while designing and evaluating the website are discussed in this section. The first task is the determination of objectives of the website and categorizing them. The further step involves the determination of persons involved and their role in designing the website and evaluating the quality. After that, quality objectives are related to different persons so that modularised approach can be followed, and responsibilities can be defined to various individuals for providing the quality website.

Determination of the website objectives is the very first as well as cumbersome task. But it can be done easily if one categorizes the objectives in terms of quality aspects to achieve. Owners of the website have complete responsibility to define the objectives of the website. These objectives should be partitioned into different quality aspects to achieve. The major categorization involves three types of quality which are as follows.

Information is the basis for a website to exist. Owners of the site have the sole responsibility of deciding contents and providing them to web developers. Contents must be structured, accurate, on time and complete.

The web developers share the responsibility once they have decided the layout of presenting the information to the user. This layout must be communicated and approved by site owners. Experienced web developers can provide the expected design quality of the website by recognizing its objectives and domains. The next most prioritized point which must be taken into mind is the end users of the site and their age groups e.g. shopping sites are meant for all age groups but entertainment and game sites are operated by young age individuals. The design quality also acts as a bridge between information quality and operational quality achieved by users.

It is concerned with the output quality of the site as it is the quality which an end user receives. Information quality and design quality can be estimated before the final site is build up, but operational quality can only be partly predicted as the main assessors of this quality measure are the end users. Mich (2014) proposed a well-defined strategy to measure this quality by categorizing it as unexpected, requested, expected, and perceived quality as well as quality in use.

Determination of different persons who work together during designing and evaluating the site is the next step in the process. By recognizing their roles, the goals of the website must be partitioned and shared among these personnel. These are the persons who actually measure website quality.

These are the persons who originate the need for a website. The website is designed only if someone has a need for it. So, before designing it is very necessary to determine the objectives of the website from owner's point of view. The main aims are to convey information online and to provide some services.

These are the persons who actually design the website. They decide the layout of the home page as well as other pages. They are responsible for fulfilling the design quality objectives. They write the script for the website.

To test the website, these persons first determine the objectives of the website and then objectives of evaluation. Evaluation objectives evaluate the design quality during development phase or evaluate the site after implementation. So, they recognize the proper need and refine the objectives of evaluation accordingly. They also decide criteria, methods and weighing procedures to follow.

Two types of users exist. First type of users are administrators who are responsible for providing services to end users, and others are end users. The end users are the persons who should be more satisfying for the perceived quality of the website.

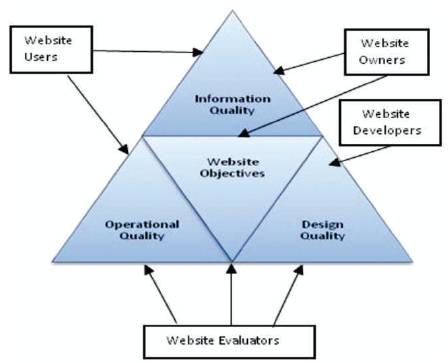

Determination of responsibilities for providing and evaluating the website quality should be the further step in the process. By recognizing the role of different personnel in website development, relationship for attaining and measuring the various website quality objectives have been presented in Figure 2. Website owners can measure the overall website objectives as they are the persons who originate the need for it. These persons are also content providers and hence, they directly are the cause for current information quality. Website developers are the persons who are responsible for design quality of the website. They take information along with objectives from website owners and write the script for the website by structuring the layout of the website. These are persons who directly deal with design quality of the website. Website evaluators can be different professionals or they can be website developers. Large organizations prefer the professionals. These are the persons who actually evaluate the site from its objectives, design and operational point of view. They can instruct the web developers to enhance the design quality for the achievement of perfect quality in use. End users can provide the feedback directly by rating the site as well as indirectly by making or losing interest for the site. They can predict the information quality as well as operational quality by filling the online questionnaires.

Figure 2. Relationship between Different Personnel vs Quality Objectives

Determination of the criteria and method for evaluation is the fourth step in the process. When objectives are clear, evaluators have the responsibility to study the existing criteria and extract the dimensions for evaluation of various quality measures. They can obtain the data from weblogs as well as questionnaires or interviews to decide operational quality. To predict the design quality, web crawlers and parsers can be used. Determination of proper weighing strategy is the next responsibility of evaluators. As each parameter is not equally important, proper weighing should be necessary to attain final index value. Existing weighing methodologies can be studied well to apply proper weighing or the persons who describe weighing criteria can interact from expertise. They can be website professionals or experienced users. For evaluation, Delphi method, Brainstorming, fuzzy TOPSIS, fuzzy DEMATEL can be used.

Interpretation and bench marking of the final performance figures is the final and necessary step for enhancement of a website. Guidelines can be provided to site owners to improve content quality as well as to web developers to enhance the design quality, so that overall quality of the website can be improved.

The above five-step strategy is equally applicable for each website regardless of its domain, size, and users. In future, applications of this strategy will be verified in different domains of websites.

This study has provided in-sight about the various issues that must be considered while evaluating a website. First of all, goals of evaluation must be clear and should be distributed among three quality measures. There should not be discrete discrimination as goals can be overlapped in three quality measures. Goals of evaluation should also be distributed among different personnel involved in discipline. Except website users, all other persons should identify the criteria and methodology to be adopted while evaluating the site. Criteria and methodology for usability evaluation should be in-agreement by the website owner and website developer. The whole evaluated results should be brought together during interpretations for eliminating the gaps between different modules of quality. In future work, there can be subthemes in predicting the information quality, design quality and operational quality. The other measures like usability, aesthetics can also be derived from above quality measures. The proposed strategy should be exercised on academic, commercial, tourism, airline reservation as well as e-government websites.

Website quality evaluation is one of the current and hot disciplines in the field of software engineering. A lot of methodologies and strategies are provided by academicians but the universal model is not yet attained. While reviewing the literature, two types of models are observed in the field of website evaluation. First one is the general model and can be applied to any website whereas the other and is applied to domain oriented studies which have been conducted for some specific objective. After extracting the limitations of previous studies, the general methodology for evaluation of a website has been discussed. The main impact has been given on the role of various personnel who build and assess the website. The responsibilities can be distributed and quality evaluation can be easily modularised. By selecting the proper criteria and evaluation method, quality index can be achieved in each module. The evaluation process should be prioritized by focusing on the companies' goals rather than by web consultant or agency.This strategy also facilitates better communication between web engineers, web analysts and organization managers.