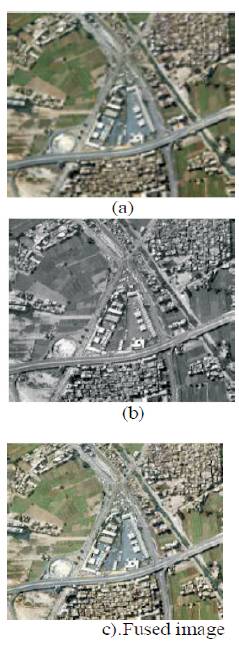

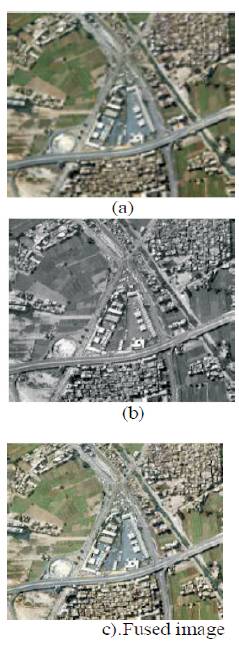

Figure 1. Commercial Earth Observation Satellite Fused image

Image fusion is the technique in which two or more images of same scene are merged together such that the new image formed is suitable for better interpretation. Image fusion result into a fused image that continue to have the most useful information. This fused Image has the characteristics of each input image. Various standard method used for image fusion produce good result spatially but cause spatial noise. To remove this distortion and enhance the quality of fused image, various fusion schemes have been proposed. In this paper, the authors focus on the fusion methods based on various standard techniques and compare it with wavelet transform used for image fusion .Wavelet transform is majorly used in the area of image fusion because it provides better results in temporal domain as well as spatial domain .Performance of any fusion technique is measured by Mean Standard Deviation, Entropy, Average Gradient, Peak Signal to Noise Ratio (PSNR) and Correlation Coefficients.

With the development of new imaging sensors arises the need of a meaningful combination of all employed imaging sources. The actual fusion process can take place at different levels of information representation; a generic categorization is to consider the different levels as, sorted in ascending order of abstraction: signal, pixel, feature and symbolic level. Image fusion is the technique in which two or more images of same scene are merged together. The images used as input are obtained from different types of sensors. The images collected by these sensors have different geometrical structure. The main aim of image fusion technique is to retain every useful information of input images.

The authors use the following four examples to illustrate the purpose of image fusion:

Figure. 1 shows the MS and PAN images provided by IKONOS, a commercial earth observation satellite, and the resulting fused image [1].

In poor weather conditions such as heavy rain or fog helicopter pilot navigation becomes very difficult [16]. For this purpose different image sensors are used. So to help pilot during such conditions, their helmet is provided with sensor for low light and one for infrared detection [16]. Pilot is provided with facility to switch from both sensors. When image fusion is applied, pilot can clearly identify the path and objects.

While analysing a human body, doctor needs to take multiple pictures of the body. This is usually done with help of different sensors. So to correctly examine all the result these images need to be merged Hence, we need image fusion process for this purpose.

Figure 1. Commercial Earth Observation Satellite Fused image

This work is organized as follows: Section 2 presents a brief description of image Fusion techniques, and genetic algorithms, Section 3 describe performance measure and in section 4 comparison between various fusion technique is addressed and conclusion is presented in the last section

Theory of Genetic algorithms and natural selection is given by Charles Darwin. Genetic algorithm proved to be very useful in optimization of larger problem space. Fittest individual is used as optimization problem solver [3]. In genetic algorithm, only fittest individuals are allowed to breed.

In the digital world genetic algorithm may be used for image enhancement and segmentation. But it is obvious that genetic algorithm cannot be directly used, so we replace genetic material by string of bits and natural selection by fitness function. Crossover and mutation processes are used for representation of breeding.

Before image fusion input images are chosen, they have to undergo different preprocessing steps

1.2.1 Image-registration

1.2.2 Image re-sampling

1.2.3 Histogram matching

Images obtained from various sensors are not geometrically fit i.e. they are either out of shape by rotation, shearing or twisted. Image registration is used to align the out of shape images.IR may be defined as the process of aligning different images into same coordinate system. IR uses the given image as reference, then align the out-of-shape images to be the same as the given image fusion.

Image re-sampling is used to alter the size of an image .It is used to change the width and height of an image. This is basically of two types.(a) Up sampling,which is the process of increasing the size of an image .(b) Down samplings which is the process of decreasing the size of an image. Image re-sampling process should not change the spatial characteristics of an image as it will produce spatial distortion.

Histogram matching is the process of color adjustment of two images using histogram. Histogram matching in image fusion is necessary because images obtained are from two different sensors having different response.

The process of image fusion retrieves good information from each of the given images and is fused together to form a resultant image whose quality is superior to any of the input images .Image fusion method can be broadly classified into two groups –1.Spatial domain fusion method 2.Frequency domain fusion [4].

In spatial domain techniques, we directly deal with the image pixels. The pixel values are manipulated to achieve desired result. In frequency domain methods the image is first transferred into frequency domain. It means that the Fourier Transform of the image is computed first. All the Fusion operations are performed on the Fourier transform of the image and then the Inverse Fourier transform is performed to get the resultant image. Image Fusion is applied in every field where images are ought to be analysed. Techniques such as PCA and IHS work on spatial domain. But this fusion causes noise in the image. One main disadvantage of current. Image fusion techniques is that most algorithms simply merge the image data together without weighing or analyzing the source images and some techniques result in information loss and a change in spectral characteristics of an image [5]

Therefore, compared with the ideal output of the fusion, these methods often produce poor result. This plays a very important role in optical remote sensing if the images to fuse were not acquired at the same time [6]. Over the past decades, various technique have been purposed. The wavelet transform has become a very useful tool for image fusion. It has been proved that, wavelet-based fusion techniques outperform the standard fusion techniques in spatial and spectral quality, especially in minimizing colour distortion [2]. Hybrid techniques involving IHS produce superior results than other standard methods. However, this is more complex.

Some of the other techniques are [2].

It is a well documented fact that regions of images that are in focus tend to be of higher pixel intensity. this algorithm is a simple way of [5] obtaining an output image with all regions in focus. The value of the pixel P (i, j) of each image is taken and added. This sum is then divided by 2 to obtain the average. The average value is assigned to the corresponding pixel of the output image in. This is repeated for all pixel values. K (i, j) = {X (i, j) + Y (i, j)}/2 (1) Where X (i , j) and Y ( i, j) are two input images.

As the MS image is represented in RGB color space, we can separate the Intensity (I) and Color Information, Hue (H) and Saturation (S), by IHS transform. The 1 component can be deemed as an image without color information. Because the 1 component resembles the PAN image, we match the histogram of the PAN image to the histogram of the 1 component. Then, the 1 component is replaced by the high-resolution PAN image before the inverse IHS transform is applied. [2].

Multi focus image fusion is a type of image fusion that is used in medical fields, [10] surveillances, and military issues to get all the images all in focus. From multiple images where all are is in focus in a different part, and for making the input images more accurate before making the fusing process, we use Genetic Algorithm (GA) for image de-noising as a pre-processing process. Curvelet image fusion when combined with genetic algorithm produces better result than any other technique.

This algorithm is based on contrast using wavelet transform decomposition [11]. This algorithm is described as follow:

All the source images are decomposed into low and high frequency sub-bands and then fusion of high frequency sub-bands is done by means of Directive Contrast. Now, inverse wavelet packet transform is performed to reconstruct the fused image.

Pixel-level image fusion integrates the information from multiple images of one scene to get an informative image which is more suitable for human visual perception or further image-processing. Sparse representation is a new signal representation theory which explores the sparseness of natural signals. Comparing to the traditional multi scale transform coefficients, the sparse representation coefficients can more accurately represent the image information. Thus, this paper proposes a novel image fusion scheme using the signal sparse representation theory. Because image fusion depends on the local information of source images, we conduct the sparse representation on overlapping patches instead of the whole image, where a small size of dictionary is needed. In addition, the simultaneous orthogonal matching pursuit technique is introduced to guarantee that different source images are sparsely decomposed into the same subset of dictionary bases, which is the key to image fusion. The proposed method is tested on several categories of images and compared with some popular image fusion methods. The experimental results show that the proposed method can provide superior fused image in terms of several quantitative fusion evaluation indexes.

Besides most image fusion algorithms where split relationship is employed among pixels treating them more or less independently, this paper proposes a regionbased image fusion scheme using Pulse-Coupled Neural Network (PCNN), which combines aspects of feature and pixel-level fusion [13]. The basic idea is to segment all different input images by PCNN and to use this segmentation to guide the fusion process. In order to determine PCNN parameters adaptively, this paper brings forward an adaptive segmentation algorithm based on a modified PCNN with the multi-thresholds determined by a novel water region area method. Experimental results demonstrate that the proposed fusion scheme has extensive application scope and it outperforms the multiscale decomposition based fusion approaches, both in visual effect and objective evaluation criteria, particularly when there is movement in the objects or misregistration of the source images.

Dempster-Shafer (DS) theory provides a solution to fuse multi sensor data, which are presented in a hypothesis space comprising of mutually exclusive and exhaustive propositions and their unions[14]. The fusion result is a description of the proposition with the values of support, plausibility, and uncertainty interval. However, in some applications, numerical values of a continuous function, instead of a Boolean value or a proposition, are expected. In this paper, a scheme based on DS reasoning and locally weighted regression is proposed to fuse the data obtained from the non destructive inspections of aircraft lap joints for the estimation of the remaining thickness. The proposed approach uses a pairwise regression that is optimized by the DS method when multiple inputs are involved. The scheme is evaluated with the experiments on fusing conventional eddy current and pulsed eddy current data obtained from aircraft lap joint structures for the characterization of hidden corrosion.

Wavelets are finite duration oscillatory functions with zero average value [15]. They have finite energy. They are suited for analysis of transient signal. The irregularity and good localization properties make them as better basis for the analysis of signals with discontinuities. Wavelets can be described by using two functions viz. the scaling function f (t), also known as „father wavelet and the wavelet function or „mother wavelet. Mother wavelet (t) undergoes translation and scaling operations to give self similar wavelet families as given by Equation (1).

There are 5 various performances measures of quality of fused images.

Definition of these measures and their physical meanings are given as follows [11].

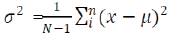

In statistical theory, mean and standard deviation are defined as follows: mean, Standard deviation ,

Where N is the total number of pixels in the image and xi is the value of the ith pixel.

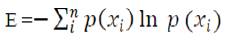

Entropy is the measure of information quantity contained in an image. If the value of entropy becomes higher after fusion, then the information quality will increase. Mathematically, entropy is defined as:

Entropy,

Where p ( Xi ) i is the probability of the occurrence of Xi

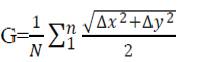

Gradient is given by: Gradient,

Δ X and Δ Y are the differences between x and y

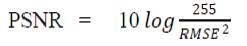

The PSNR indicates the similarity between two images. The higher the value of PSNR the better the fused image is.

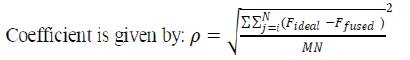

The correlation coefficient is a number which lies between [0, 1] that measures the degree in which two variables are linearly related. Correlation

Where Fideal the ideal image and Ffused is the fused image. Lower values of ρ indicate greater similarity between the images Fideal and Ffused .

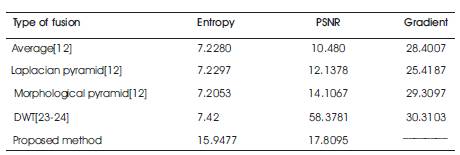

In Table 1, it is shown that, the value of entropy, PSNR in DWT is greater than that of Average, Laplacian and Morphological method, which shows that DWT method is better than that of other method. Now it is evident that, transform domain methods are better than that of spatial domain method.

Table 1. Result of different methods used for fusion of boat image

Table 1 shows the result of various fusion method.

In this paper various fusion techniques are studied. In this The authors have tried to address the objective and definition of images. By studying these algorithm we comes to the conclusion that although standard image fusion techniques are good for spatial domain fusion they create noise in output image which leads to poor interpretation of information .It is found that entropy value and PSNR value of frequency domain method DWT (Descrete Wavelet Transforms) is better than spatial domain fusion methods.

The authors have studied that when DWT combined with PCA (Principal Component Analysis)perform better results. We also found that when contrast based wavelet-packet image fusion is combined with genetic algorithm it will improve the performance of wavelet transform automatically as it will select the best input images for fusion