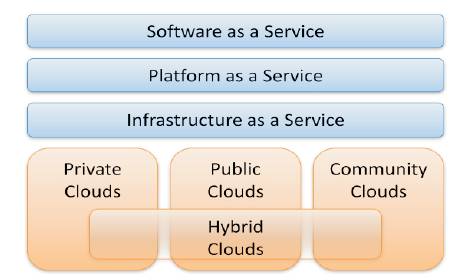

Figure 1. Cloud computing deployment and service models

Cloud computing has recently emerged as a new paradigm for hosting and delivering services over the Internet. Cloud computing is attractive to business owners as it eliminates the requirement for users to plan ahead for provisioning, and allows enterprises to start from the small and increase resources only when there is a rise in service demand. However, despite the fact that cloud computing offers huge opportunities to the IT industry, the development of cloud computing technology is currently at its infancy, with many issues still to be addressed. In this review paper, the author present a survey of cloud computing, highlighting its key concepts and architectural principles. The aim of this review paper is to provide a better understanding of the cloud computing environment and identify important research directions in this increasingly important area.

With the rapid development of processing and storage technologies and the success of the Internet, computing re-sources have become cheaper, more powerful and more ubiquitously available than ever before. This technological trend has enabled the realization of a new computing model called cloud computing, in which resources (e.g., CPU and storage) are provided as general utilities that can be leased and released by users through the Internet in an on-demand fashion. In a cloud computing environment, the traditional role of service provider is divided into two: the infrastructure providers who manage cloud platforms and lease re-sources according to a usage-based pricing model, and ser-vice providers, who rent resources from one or many infrastructure providers to serve the end users. The emergence of cloud computing has made a tremendous impact on the Information Technology (IT) industry over the past few years, where large companies such as Google, Amazon and Microsoft strive to provide more powerful, reliable and cost-efficient cloud platforms., and business enterprises seek to reshape their business models to gain benefit from this new paradigm[1].

No up-front investmentCloud computing uses a pay-as-you-go pricing model. A service provider does not need to invest in the infrastructure to start gaining benefit from cloud computing. It simply rents resources from the cloud according to its own needs and pay for the usage.

Lowering operating costResources in a cloud environment can be rapidly allocated and de-allocated on demand. Hence, a service provider no longer needs to provision capacities according to the peak load. This provides huge savings since resources can be released to save on operating costs when service demand is low.

Highly scalableInfrastructure providers pool large amount of resources from data centers and make them easily accessible. A service provider can easily expand its service to large scales in order to handle rapid increase in service demands. This model is sometimes called surge computing [5].

Easy accessServices hosted in the cloud are generally web-based. Therefore, they are easily accessible through a variety of devices with Internet connections. These devices not only include desktop and laptop computers, but also cell phones and PDA.

This section presents a general overview of cloud computing, including its definition and a comparison with related concepts.

There has been much discussion in industry as to what cloud computing actually means. The term cloud computing seems to originate from computer network diagrams that represent the internet as a cloud. Most of the major IT companies and market research firms such as IBM, Sun Microsystems, Gartner and Forrester Research have produced whitepapers that attempt to define the meaning of this term. These discussions are mostly coming to an end and a common definition is starting to emerge. The US National Institute of Standards and Technology (NIST) has developed a working definition that covers the commonly agreed aspects of cloud computing. The NIST working definition summarizes cloud computing as: A model for enabling convenient, ondemand network access to a shared pool of configurable computing resources (e.g., networks, servers, storage, applications, and services) that can be rapidly provisioned and released with minimal management effort or service provider interaction [1,2].

The NIST definition is one of the clearest and most comprehensive definitions of cloud computing and is widely referenced in US government documents and projects. This definition describes cloud computing as having five essential characteristics, three service models, and four deployment models. The essential characteristics are:

On demand self servicesComputer services such as email, applications, network or server service can be provided without requiring human interaction with each service provider. Cloud service providers providing on demand self services include Amazon Web Services (AWS), Microsoft, Google, IBM and Salesforce.com.

Broad network accessCloud Capabilities are available over the network and accessed through standard mechanisms that promote use by heterogeneous thin or thick client platforms such as mobile phones, laptops and PDAs.

Resource poolingThe provider's computing resources are pooled together to serve multiple consumers using multiple-tenant model, with different physical and virtual resources dynamically assigned and reassigned according to consumer demand. The resources include among others storage, processing, memor y, network bandwidth, virtual machines and email services.

Rapid elasticityCloud services can be rapidly and elastically provisioned, in some cases automatically, to quickly scale out and rapidly released to quickly scale in. To the consumer, the capabilities available for provisioning often appear to be unlimited and can be purchased in any quantity at any time.

Measured serviceCloud computing resource usage can be measured, controlled, and reported providing transparency for both the provider and consumer of the utilized service. Cloud computing services use a metering capability which enables to control and optimize resource use. This implies that just like air time, electricity or municipality water IT services are charged per usage metrics

Pay per useThe more you utilize the higher the bill. Just as utility companies sell power to subscribers, and telephone companies sell voice and data services, IT services such as network security management, data center hosting or even departmental billing can now be easily delivered as a contractual service.

Multi-tenancyIn a cloud environment, services owned by multiple providers are co-located in a single data center. The performance and management issues of these services are shared among ser vice providers and the infrastructure provider. The layered architecture of cloud computing provides a natural division of responsibilities: the owner of each layer only needs to focus on the specific objectives associated with this layer. However, multi-tenancy also introduces difficulties in understanding and managing the interactions among various stakeholders.

The above characteristics apply to all clouds but each cloud provides users with services at a different level of abstraction, which is referred to as a service model in the NIST definition. The three most common service models are:

Software as a Service (SaaS)This is where users simply make use of a web-browser to access software that others have developed and offer as a service over the web. At the SaaS level, users do not have control or access to the underlying infrastructure being used to host the software. Sales force's Customer Relationship Management software3 and Google Docs4 are popular examples that use the SaaS model of cloud computing.

Platform as a Service (PaaS)This is where applications are developed using a set of programming languages and tools that are supported by the PaaS provider. PaaS provides users with a high level of abstraction that allows them to focus on developing their applications. Just like the SaaS model, users do not have control or access to the underlying infrastructure being used to host their applications at the PaaS level. Google App Engine5 and Microsoft Azure6 are popular PaaS examples.

Infrastructure as a Service (IaaS)This is where users acquire computing resources such as processing power, memory and storage from an IaaS provider and use the resources to deploy and run their applications. In contrast to the PaaS model, the IaaS model is a low level of abstraction that allows users to access the underlying infrastructure through the use of virtual machines. IaaS gives users more flexibility than PaaS as it allows the user to deploy any software stack on top of the operating system. Amazon Web Services' EC2 and S37 are popular IaaS examples.

The service models described in the NIST definition are deployed in clouds, but there are different types of clouds depending on who owns and uses them. This is referred to as a cloud deployment model in the NIST definition and the five common models are:

Private cloudA cloud that is used exclusively by one organisation. The cloud may be operated by the organization itself or a third party. The St Andrews Cloud Computing Co-laboratory and Concur Technologies are example organizations that have private clouds.

Public cloudA cloud that can be used (for a fee) by the general public. Public clouds require significant investment and are usually owned by large corporations such as Microsoft, Google or Amazon.

Community cloudA cloud that is shared by several organizations and is usually setup for their specific requirements. The Open Cirrus cloud test bed could be regarded as a community cloud that aims to support research in cloud computing.

Hybrid cloudA cloud that is setup using a mixture of the above three deployment models. Each cloud in a hybrid cloud could be independently managed but applications and data would be allowed to move across the hybrid cloud. Hybrid clouds allow cloud bursting to take place, which is where a private cloud can burst-out to a public cloud when it requires more resources.

Virtual Private CloudAn alternative solution to addressing the limitations of both public and private clouds is called Virtual Private Cloud (VPC). A VPC is essentially a platform running on top of public clouds. The Virtual Private Network (VPN) technology that allows service providers to design their own topology and security settings such as firewall rules.

Figure 1 provides an overview of the common deployment and service models in cloud computing, where the three service models could be deployed on top of any of the five deployment models.

Figure 1. Cloud computing deployment and service models

Cloud computing is often compared to the following technologies, each of which shares certain aspects with cloud computing [1]:

Grid ComputingGrid computing is a distributed computing paradigm that coordinates networked resources to achieve a common computational objective. The development of Grid computing was originally driven by scientific applications which are usually computation-intensive. Cloud computing is similar to Grid computing in that it also employs distributed resources to achieve applicationlevel objectives. However, cloud computing takes one step further by leveraging virtualization technologies at multiple levels to realize resource sharing and dynamic resource provisioning.

Utility ComputingUtility computing represents the model of providing resources on-demand and charging customers based on usage rather than a flat rate. Cloud computing can be perceived as a realization of utility computing

VirtualizationVirtualization is a technology that abstracts away the details of physical hardware and provides virtualized resources for high-level applications. A virtualized server is commonly called a Virtual Machine (VM).

Autonomic ComputingOriginally coined by IBM in 2001, autonomic computing aims at building computing systems capable of selfmanagement i.e. reacting to internal and external observations without human intervention.

In summary, cloud computing leverages virtualization technology to achieve the goal of providing computing resources as a utility. It shares certain aspects with grid computing and autonomic computing but differs from them in other aspects. Therefore, it offers unique benefits and imposes distinctive challenges to meet its requirements.

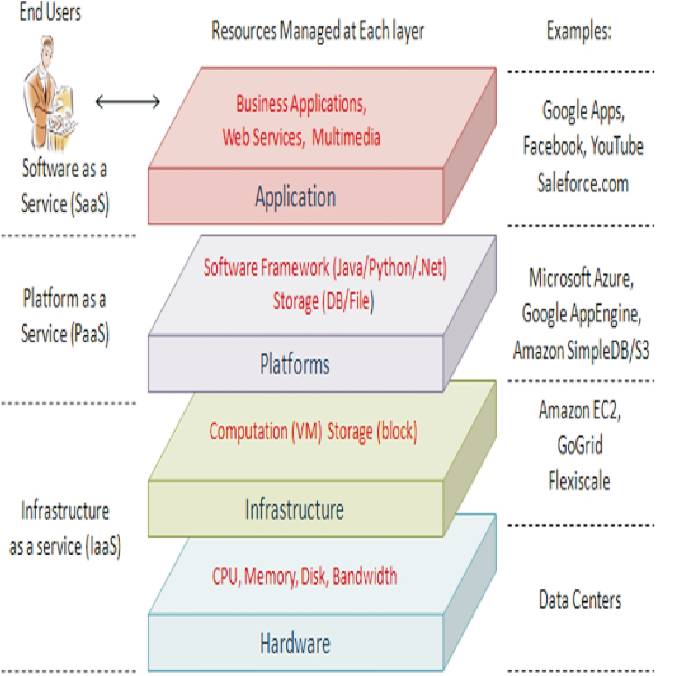

This section describes the architecture of a cloud computing environment can be divided into 4 layers: the hardware/datacenter layer, the infrastructure layer, the platform layer and the application layer [1, 14], as shown in Figure. 2.

Figure 2. Cloud Computing Architecture

This layer is responsible for managing the physical resources of the cloud, including physical servers, routers, switches, power and cooling systems.

Also known as the virtualization layer, the infrastructure layer creates a pool of storage and computing resources by partitioning the physical resources using virtualization technologies.

Built on top of the infrastructure layer, the platform layer consists of operating systems and application frameworks. The purpose of the platform layer is to minimize the burden of deploying applications directly into VM containers. For example, Google App web applications.

At the highest level of the hierarchy, the application layer consists of the actual cloud applications. Different from traditional applications, cloud applications can leverage the automatic-scaling feature to achieve better performance, availability and lower operating cost.

Although cloud computing has been widely adopted by the industry, the research on cloud computing is still at an early stage. Many existing issues have not been fully addressed, while new challenges keep emerging from industry applications. In this section to summarize some of the challenging research issues in cloud computing.

One of the key features of cloud computing is the capability of acquiring and releasing resources ondemand. The objective of a service provider in this case is to allocate and deallocate resources from the cloud to satisfy its service level objectives (SLOs), while minimizing its operational cost. However, it is not obvious how a service provider can achieve this objective. In particular, it is not easy to determine how to map SLOs such as QoS requirements to low-level resource requirement such as CPU and memory requirements. Furthermore, to achieve high agility and respond to rapid demand fluctuations such as in flash crowd effect, the resource provisioning decisions must be made online. Automated service provisioning is not a new problem. Dynamic resource provisioning for Internet applications has been studied extensively in the past [3, 4]. These approaches typically involve: (i) Constructing an application performance model that predicts the number of application instances required to handle demand at each particular level, in order to satisfy QoS requirements; (ii) Periodically predicting future demand and determining resource requirements using the performance model; and (iii) Automatically allocating resources using the predicted resource requirements. Application performance model can be constructed using various techniques, including Queuing theory [5], Control theory [6] and Statistical Machine Learning [7]. Additionally, there is a distinction between proactive and reactive resource control. The proactive approach uses predicted demand to periodically allocate resources before they are needed. The reactive approach reacts to immediate demand fluctuations before periodic demand prediction is available. Both approaches are important and necessary for effective resource control in dynamic operating environments.

Virtualization can provide significant benefits in cloud computing by enabling virtual machine migration to balance load across the data center. In addition, virtual machine migration enables robust and highly responsive provisioning in data centers. Virtual machine migration has evolved from process migration techniques [7]. More recently, Xen [8] and VMWare [9] have implemented “live” migration of VMs that involves extremely short downtimes ranging from tens of milliseconds to a second. Clark et al. [10] pointed out that migrating an entire OS and all of its applications as one unit allows avoiding many of the difficulties faced by process level migration approaches, and analyzed the benefits of live migration of VMs. The major benefits of VM migration is to avoid hotspots; however, this is not straightforward. Currently, detecting workload hotspots and initiating a migration lacks the agility to respond to sudden workload changes. Moreover, in the memory state should be transferred consistently and efficiently, with integrated consideration of resources for applications and physical servers.

Improving energy efficiency is another major issue in cloud computing. It has been estimated that the cost of powering and cooling accounts for 53% of the total operational expenditure of data centers. In 2006, data centers in the US consumed more than 1.5% of the total energy generated in that year, and the percentage is projected to grow 18% annually [11]. Hence infrastructure providers are under enormous pressure to reduce energy consumption. The goal is not only to cut down energy cost in data centers, but also to meet government regulations and environmental standards. Designing energy-efficient data centers has recently received considerable attention. This problem can be approached from several directions. For example, energy efficient hardware architecture that enables slowing down CPU speeds and turning off partial hardware components has become common place. Energy-aware job scheduling [12] and server consolidation [13] are two other ways to reduce power consumption by turning off unused machines. Recent research has also begun to study energy-efficient network protocols and infrastructures.

Cloud computing has recently emerged as a compelling paradigm for managing and delivering services over the Internet. The rise of cloud computing is rapidly changing the landscape of information technology, and ultimately turning the long-held promise of utility computing into a reality. Therefore, to believe there is still tremendous opportunity for researchers to make groundbreaking contributions in this field, and bring significant impact to their development in the industry. To conclude, this review paper discussed the research academia has pursued to advance the technological aspects of cloud computing, and highlighted the resulting directions of research facing the academic community.