Table 1. A Green Computing Version of the Hello World Program For Coarse Grained (Left) and Fine Grained (Right) Greencomputing

Power consumption has always been a crucial factor in deciding the performance of a computing system. The existing factors that serve as a yardstick to measure the performance include Space complexity and Time complexity. However, towards a Greener era of computing, it is the need of the hour to consider High Performance Systems that are energy efficient and thereby lesser heat and carbon emitters. The conventional approach, on the software grounds, include redefining algorithms that focus on the reduction on the time complexity and Space Complexity of the program .The authors, hereby, propose a novel approach that takes into consideration the Power Optimization, with respect to making an algorithm more efficient, as an equally important issue for a greener solution towards energy efficient programs.

As quoted by Alan M. Eddison, “Modern Technology owes ecology, an Apology”. In the entire sense the Technology providers and its users are equally contributing their own effort to squeeze out the benefits of the contemporary and ubiquitous Technology. However, the failure, to identify the precursors of the Nature, is no more tolerable. Henceforth, the attempt is to “propose a panacea for the calamities of modern technology which so far created chaos to the habitation”.

To begin with, Green computing is expressed as “the study and practice of designing, manufacturing, using, and disposing of computers, servers, and associated subsystems - such as monitors, printers, storage devices, and networking and communications systems -efficiently and effectively with minimal or no impact on the environment.”This was defined by Samir Botros, in Green technology and design for the environment 3rd ed New York: McGraw-Hill, 1996. Internet documents [1].

With the advent to novel technologies to address complex problems in the world of computation ,the focal point, to a larger extent, remains to be the performance factor marring the consequences that it renders to the milieu around ,in the name of carbon and heat emissions affecting the human and the environment equally. Thence is the yearning for ecofriendly alternatives that consume less units of power for execution and also save your pocket.

In the argot used in software industries, the recent developments towards the inclination of energy efficient alternatives at instruction level stimulated a phenomenon for further advancements and thus triggered a novel dimension towards the same. Here is an excerpt of the work in the related domain.

Fine-grained green computing refers to running a program efficiently and effectively via a subtle power control on each computing resources as CPU, memory, registers, peripherals, clock management, and power supply. A simple “power cut” as a whole like coarse green computing yields less leverage on manipulating energy consumption in light of the characteristics and the context of a specific application. Table 1 shows a green computing version of the Hello World program with respect to coarse grained and fine grained green computing methodologies. The code to the left shows a coarse grained green computing in which the program's execution is in a full power mode disregarding to whether the program is using a memory banks or I/O peripherals. Note that the system is set to a full power mode when an external event occurs such as a key press or a peripheral interrupt. Therefore, on the entry of the program, the system is assumed to be in full power mode [3].

With the fine grained green computing (the code on the right hand side in Table I), only the required memory banks and I/O peripherals are activated for the program. How many memory banks should be activated and what I/O peripherals should be turned on, really depends on the underlying application. This approach allows energy consumption for system components to be fine tuned, and will further reduce power consumption as low power modes are to the CPU only.

Table 1. A Green Computing Version of the Hello World Program For Coarse Grained (Left) and Fine Grained (Right) Greencomputing

Components other than CPU will require another control unit in the system. However, this increases the complexity of the design and some algorithms may have to be redesigned to achieve this objective. Note that it seems plausible that the green code at the right hand side in Table 2 involves more instructions, and thus consumes more power. Actually, it is the idle power consumption that makes the non-green code draining more current than the green code.

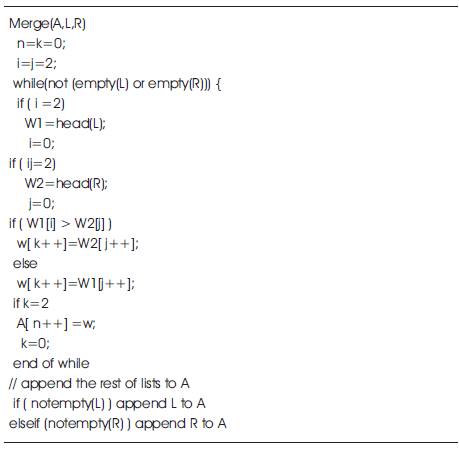

Table 2. A Power Aware Merge Algorithm for Memory Power and Size Reduction

A big portion of power consumption is attributed to memory, in which programs and their data are stored. Thus, a compact design of code and data structures is a key to power reduction. For example, a regular merge sort algorithm will waste about 50% of memory space in storing sub-type elements, say a 32-bit CPU were to sort a 16-bit short integers. Two problems raise. First, it doubles the memory space requirement to store the data, and therefore, it may not take advantage of turning off unused memory banks to save power. Second, it requires two times more memory loads than if data were to be sorted in a compact form, i.e., two short integers are stored in a 32-bit memory space. Table 2 illustrates a power-aware merge algorithm that reduces memor y power consumption and size by 50%,and increase program efficiency by eliminating a half of lengthy memory accesses (assume all auto variables i, j, k, and n can be allocated in registers).

The idea of the power-aware merge algorithm is to pack two short integers into one word, which is a basic unit for CPU to load data into registers. Each load instruction will actually load two short integers. The operations of the merge algorithm are similar to those of a traditional one. The only difference is the three “if” statements to check if the indices reach 2.

The asymptotic time complexity will remain the same, i.e., O(n), but the actual run time will be shorter if m >4 where m is the number of times slower for memory access compared to CPU instructions. The following depicts the time complexity for the merge algorithm of the original version and the power aware version shown in Table 2.

T (n)=nm+ n

T'(n)=nm/2 + 3n

Where T(n) is the time complexity of original algorithm and T'(n) is that of the power_aware algorithm.

However, the energy complexity for the original version is two times as much as that of the power aware version if the data memory is much larger than the program. Therefore, it is necessary to fine grained tuning power consumption of an algorithm. To benefit that, an algorithm will typically be redesigned.

Similarly, when green computing is applied to operating systems, especially, its scheduler would have to handle per process requirements in order to optimally control all peripherals in terms of power consumption. If dynamic voltage scaling (DVS) were to be added, the scheduler would have to consider CPU power supply in light of deadlines and priorities of a task. Often, there are situations where lower voltage may end up consuming more power for completing a task. Perhaps, the most difficult is to find where to sneak in green computing and how it improves system performance.

There are two possible domains in which the study can progress. One is Software, and the other Hardware. On the grounds of Software issues, there are four approaches with reference to a typical C /C++ program ,for instance.

Source code and instruction-level optimizations appear as an alternative in low power consumption analysis (V. Dalal, 2001; J. Oliver, 2003; Y. Yingbiao, 2004].

Firstly, the usage of some special operators in the source code can contribute remarkably, to the lesser power consumption during its execution. This technique is termed as Algorithmic Transformation.

These special operators include

Secondly, the usage of operands from the registers rather than memory will prove an appreciable deal. An instruction using register operands cost upto 300mA of current per cycle whereas the Memory read/write operations cost in the range of 430-530 mA per cycle. Since the register set is limited and hence cannot be used for larger memory required applications, the next alternate is seen in the form of storing operands using Caches. Further, the code transformations available in the recent scenarios help improve the cache hit ratios and make it a better option for use compared to registers. This technique is associated with the Memory Management, as illustrated in Compilation Techniques for low Energy: An Overview: by Vivek Tiwar, Sharad Malik &Andrew Wolfe in a Symposium on Low power Electronics,1994.

Thirdly, the technique of loop unrolling is being considered. Loop unrolling is an optimization technique where the body of a loop is copied several times. This prevents the amount of power consumed in the otherwise overhead caused during the change in the control flow due to loop transformations and iterations. Since loop unrolling has shown good results in terms of low power consumption for the Intel 8051 platform in previous literature, there seems to be a good sign for the validity of this approach, as demonstrated by D.A. Ortiz & N.G. Santiago (2007).

Fourthly, when the use of inline functions is taken into account, it has a significant impact on the power requirements. In function inclining, the body of the function is inserted directly in the code structure where it is used. Also, Variables declaration is used to replace variable types by others which tend to lower power consumption inferred from J. Zambreno, M.T. Kandemir, & A. Choudhary (2002).

Source code-level optimizations for execution time have been studied extensively by Leupers (2000). There are important works in terms of source code-level optimization. Leupers (2000) and Sharma (2000) classified source code optimizations in machine-independent and machinedependent. In terms source code optimization for power reduction, Simunic et al. (2001) classified code optimization techniques in algorithmic, data, and instruction-flow optimizations. In our case we used algorithmic optimizations since they do not take into consideration the target platform. Dalal et al. (2001) studied software-dependent components such as arithmetic circuits, data busses, and memories, as a way to lower power consumption in embedded applications. Sharma and Ravikumar [11] presented a study of the implementation of the ADPCM codec benchmark. In this work, optimization techniques applied at the source code-level were classified into structural and machine dependent optimizations.

The MATLAB software serves as an apposite tool for the analysis of the subject being researched herewith. The following are a few such utilities available in this tool.

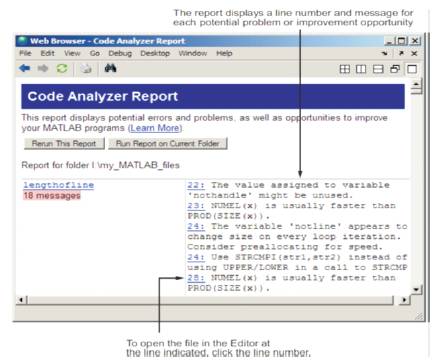

The Code Analyzer Report displays potential errors and problems, as well as opportunities for improvement in the MATLAB programs. It displays a message for each line of MATLAB code and determines how it might be improved. For example, a common message is that a variable is defined but never used is shown Figure 1. A snapshot of the code Analyzer in Matlab tool that exhibits a report of the analysis of every function used in the input code and renders a draft representing the function-wise analysis and suggested modifications to improve the code's performance.

If the suggested transformations in the report are performed in the code, we are close to achieving lesser number of cycles to execute the instructions hence can claim the objective to be satisfied.

Figure 1. Code Analyzer Report

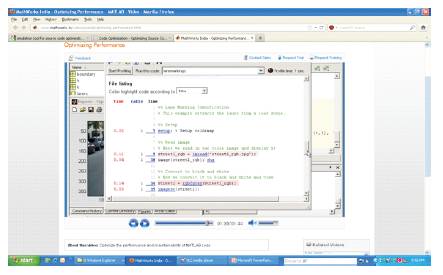

The MATLAB Profiler helps you improve the performance of your MATLAB programs. Run a MATLAB statement or any program file in the Profiler and it produces a report of where the time is being spent. Access the Profiler from the Desktop menu, or use the profile function. This utility in Matlab again renders the time statistics per instruction of the input code.

Here is an indirect approach for power optimization. The segment of the code that exhibits the maximum time for its execution, as is depicted by the profiler, can be modified so that it consumes lesser number of time cycles for execution. This can contribute to the lesser amount of power consumed and henceforth fulfil the above said objective.

Figure 2. Optimizing Performance - MATLAB

The contemporary developments towards a greener epoch of computing have by far gained momentum in an unexpected and remarkable manner. In the near future, the urge for the eco-friendly solutions towards computing shall be on the rise and thus the advancement in the technology as well. In the domain of Software engineering, there's an imperative requirement for the genesis of power aware algorithms and such transformations at the instruction and Source-code level to optimize the energy efficiency of a given software product thereby making it a Green Software.