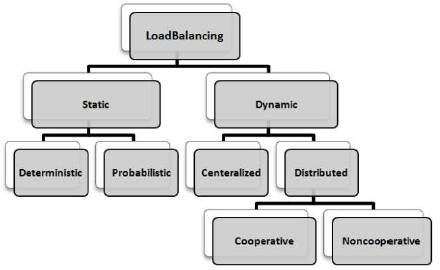

Figure 1. Taxonomy of Load Balancing Algorithms

With elevation in the technologies, parallel computing has become an apparent area with the aspiration of providing adequate and faster result for various applications. Parallel computing focuses on parallel processing between operations in some way. Today, parallel computing is growing at an accelerating rate. Parallel computing emphasizes parallel and concurrent computation among different processors. Parallel computing refers to sub-division of a problem, and executing these sub problems simultaneously. Imbalance in the load is a well-known problem in the region which involves parallelism. One of the challenging tasks of parallel computing is to balance the load among processors. Load balancing optimizes the way processing load is shared among the multiple processors. Load balancing algorithms are broadly categorized as Static and Dynamic. Static load balancing distributes the processes to processors at compile time, while dynamic load balancing bind processes at run time. Static load balancing algorithms may be either deterministic or probabilistic. Dynamic load balancing is further categorized as centralized and distributed. This paper confers about the study and analysis of load balancing approaches in multiprocessor systems. Performances of sender initiated load balancing is observed on the basis of execution time, speedup and steal ratio. To implement load balancing algorithms, scale simulator is used.

Load balancing is a term which is basically materialized from electrical engineering. It is a technique used by electric power stations to store excess electrical power when demand is low, and release that power when demand increases. Load balancing re-allocates the total load among the processors to improve resource utilization and response time of the job, simultaneously eradicates the case in which some of the processors are overloaded while others are underloaded. Load balancing emphasizes the fairness of the processing load among the shared multiple processors. Load balancing resettles the load from the heavy loaded processor to lightly loaded processor. Resettlements require migration; migration of a process is a mechanism which deals with the actual transfer of the process from its source node to its destination node and handling the related messages during and after migration (Sinha, 1998). Load balancing, with its implication of attempting to equalize workload on all the nodes of the system, is not an appropriate goal. This is because, the overhead involved in gathering state information to achieve this objective is normally very large, especially in distributed systems having a large number of nodes. Moreover, load balancing in the strictest sense is not achievable because the number of processes in a node is always fluctuating and the temporal unbalance among the nodes exists at every moment, even if static load is perfectly balanced. Rather, it is necessary and sufficient to prevent the nodes from being idle while some other nodes have more than two processes. Designing a good load balancing algorithm is a difficult task because of the issues involved like Load estimation policy, Process transfer policy, State information exchange policy, Location policy, Priority assignment policy and Migration limiting policies (Sinha, 1998).

Multiprocessor system has more than one processor in close communication, sharing the computer bus, the clock, memory as well as peripheral devices. This system is referred to as tightly coupled system. By increasing the number of processors, more work can be done in lesser time and throughput will be increased. It saves money as compared to multiple single systems because the processors share peripheral devices and power supplies. They also increase reliability, as its function is to distribute tasks properly among several processors, and in this way the failure of one processor will not halt the system, but will only slow it. Based on the processor symmetr y, multiprocessors can be classified as symmetric and asymmetric multiprocessor. Based on memory access of the processors, multiprocessor systems are classified as Uniform Memory Access (UMA) systems and Non-Uniform Memory Access (NUMA). systems Based on the way of connectivity and communication in the system, processor coupling is classified into tightly-coupled multiprocessor systems and loosely coupled multiprocessor systems. Based on memory sharing, it is classified as shared memory and distributed memory. Balancing of load is one of the hardest problem in multiprocessor systems. Multicore is a type of multiprocessor which is designed in such a way that it places multiple processors on a single die. Each processor is called a core (Cameron and Tracy, 2008). As there is increased chip capacity, placing multiple processors on a single chip became practically possible. These designs are known as Chip Multiprocessors (CMPs), because they allow single-chip multiprocessing. Single-chip multiprocessors are popularly known as Multicore processor. A multi-core processor is a single computing component with two or more independent actual processors. The instructions on multi-core are ordinary Central Processing Unit (CPU) instructions, but the multiple cores can run multiple instructions at the same time, increasing the overall speed of the programs. Multicores are becoming popular for both server and desktop processors. By the next decade, it is expected to have processors with hundreds of cores on a chip. In order to efficiently utilize these processing cores and to improve their performance, it is important to assure that the processes are evenly allocated to each core. Otherwise, some cores would be idle while the others are running. The goal of load balancing is to improve the performance of the system, optimize the resource utilization, maximize the throughput of the processor and minimize the task response time of processing. There are some conditions which can change a load of processors at run time like, the arrival of a new process, completion of any running processes and abnormal termination of any existing processes (Thakur and Kumar, 2014).

Load balancing can be done in two ways; centralized or de-centralized. In centralized strategies, the load balancer is located at one master workstation node where all decisions are made, while in decentralized strategies, the load balancer is divided into all workstations. There are various policies which are used during the process of Load Balancing.

The main goal of load balancing algorithms is to balance the workload on all the processors of a system. However, before an algorithm can attempt to balance the workload, it is necessary to decide how to measure the workload of a particular processor. This policy determines how to estimate the workload of a particular node of the system.

The strategy of load balancing algorithm is based on the idea of transferring some processes from heavily loaded processors to the lightly loaded processors for processing. Hence, policy is needed to determine whether a node is lightly loaded or heavily loaded and where to transfer process.

Once a decision has been made through the transfer policy to transfer a process from a node, the next step is to select the destination node for execution of a transferred task. This policy determines which processor will execute the migrated task.

This policy determines how to exchange the system load information among the nodes. This can be done in three ways; periodic, broadcast (when state change) and on demand.

This policy determines the priority of execution of local and remote processes at a particular node.

This policy determines the total number of times, a process can migrate from one node to another.

This policy determines the appropriate period as, when to start the load balancing operation.

As an area of research, load balancing has received considerable attentions since early days of parallel and distributed systems. Load imbalance problem arises in many applications, but most importantly plays a special role in the operation of Parallel and Distributed computing. Load balancing algorithms are broadly categorized as Static and Dynamic. Taxonomy of load balancing algorithms is shown in Figure 1 ( Lu and Lau, 1994; Thakur and Kumar, 2015).

Figure 1. Taxonomy of Load Balancing Algorithms

Static load balancing redistributes the processes to processors at compile time, while dynamic load balancing binds processes at run time. Static load balancing algorithms may be either deterministic or probabilistic. Deterministic algorithms use the information about the properties of nodes and the characteristics of the processes. Probabilistic load balancing uses information regarding static attributes of the system such as number of nodes, the processing capability of each node, the network topology and so on. Dynamic load balancing is further categorized as centralized and distributed. Centralized load balancing technique stores global information at a central location and use this information to balance the load. Centralized load balancing uses the computing and storage resources of one or more processors while in distributed load balancing, the state information is distributed among the processors that are responsible for managing their own resources or allocating tasks residing in their queues to other processors. In non-cooperative approach, each processor has autonomy over its own resource while in cooperative load balancing, processes work together towards a common system-wide global balance. Dynamic load balancing is further classified depending on who initiated the load balancing as sender initiated, receiver initiated and symmetrically initiated (Shivaratri et al., 1992) . In sender initiated approach, the overloaded nodes transfer one or more of their tasks to several underloaded nodes. In receiver-initiated approach, underloaded nodes request tasks to be sent to them from nodes with higher load. In the symmetric approach, both the underloaded as well as the overloaded nodes may initiate load transfers ( Ghanem 2004; Thakur and Kumar, 2015)

Load balancing is required to dispense the load among processors, so that maximum resource utilization and minimum task execution time can be possible. Although there is quite a large number of research been done on load balancing techniques, there are some noteworthy papers which motivated this work. Musoll (2008) studied a thermal friendly load balancing technique for the multicore processor. In this work, they provided low overheads in performance and energy, with respect to the highest performance. The author investigated the effect of power gating, a technique that is very effective in reducing the overall power consumption of the processor. A new load balancing technique had been proposed in this work, which had features of a low hot spot of Round Robin technique and low-performance penalty of Lower index. The author stressed on power saving by using load balancing. Zomaya and Teh (2001) investigated how a genetic algorithm can be employed to solve the dynamic load balancing problem. In their proposed method, a fixed number of tasks, each having a task number and a size, were randomly generated and placed in a task pool from which tasks were assigned to processors. Max span and average utilization were calculated for evaluation of combined fitness function. This approach is effective for large number of tasks Rathore and Chana (2011) provided cognitive analysis of different approaches of load balancing and job migration in a distributed system. The authors presented the classification of dynamic load balancing mechanism and basic steps used for load balancing. Comparative study of various load balancing algorithms was discussed on the basis of various parameters like environment, load monitoring, synchronization, rebalancing criteria and job migration. The authors pinpointed the loopholes of various existing load balancing and job migration techniques. The authors, after an exhaustive review found that, there is a need for innovative load balancing techniques. Srivastava et al. (2011) proposed a dynamic load balancing algorithm for improving the performance of grid computing. In this work, there were four basic steps: monitoring workstation performance and exchanging this information between workstations, calculating new distributions, making the work movement decision and finally, actual data movement. A Load Balancing component had been developed which is executed in grid environment using GridSim toolkit. The application was developed using ASP.NET 3.5 and SQL Server 2005 database server.

In this section, three load balancing algorithms are discussed namely random algorithm, hierarchical algorithm, and cluster-aware algorithm and are compared using a Scale Simulator. In random load balancing, the load is transferred randomly from overloaded node to any underloaded node. Hierarchical load balancing was proposed for divide and conquer approach. Here, systems are organized into a tree of manager and worker. In case of cluster aware load balancing algorithm, if some processor is underloaded then it prefers to take a load from remote cluster rather than from its local cluster. If the remote cluster is not available, then only it takes a load from local cluster Load balancing needs migration of task (Janjic, 2012).

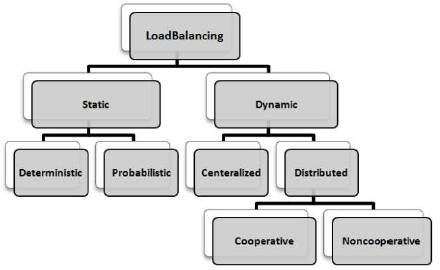

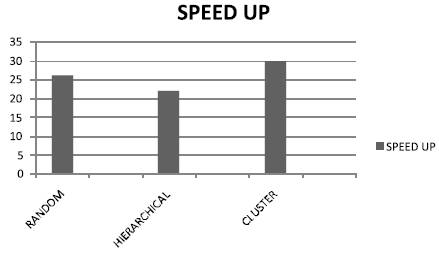

Performance is measured using factors like execution time, speedup and steal ratio for load balancing algorithms. In parallel computing, speedup refers to how much faster a parallel algorithm can run in parallel. Speedup is the ratio of, before improvement to after improvement of the load. Speedup (random-26, Hierarchical-22, Cluster aware-30). Steal ratio is for transferring a number of task to sender nodes. For experimental purpose, a computer system is taken with dual core processor having 1.83 GHz speed, and Linux operating system. In this experiment, load balancing is executed in Scales Simulator. Performance of load balancing algorithms is shown in Table 1.

Table 1. Performance of Load Balancing Algorithm

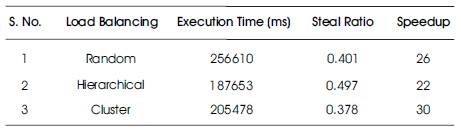

Performance of Random, Hierarchical and Cluster aware load balancing algorithms are obtained and compared. The results of simulation study done to compare the performance of these load balancing algorithms are tabulated in Table 1. In Figures 2, 3, and 4, graphs are plotted using the observation made from Table 1. From Figure 2, it is found that the execution time of a random algorithm is more as compared to other algorithms. Due to random selection of processor and tasks, there may be a chance that already loaded processor can be chosen, while hierarchical takes lesser execution time as it chooses only the parent processor if it is underloaded. Hence, from an execution point of view, Hierarchical load balancing is the best. From Figure 3, it is observed that Steal ratio is more in case of hierarchical and is least for cluster aware load balancing. In hierarchical load balancing, more load is stolen by the victim. Hence, from steal ratio point of view, hierarchical load balancing is the best. From Figure 4, it is clear that speedup is more in case of cluster aware and is least for hierarchical. Cluster aware mostly steals work from remote cluster, so speedup is more in the case of cluster aware. Hence, from speedup point of view, cluster aware load balancing is the best.

Figure 2. Graph of Execution Time for Three Load Balancing Algorithms

Figure 3. Graph of Steal Ratio for Three Load Balancing Algorithms

Figure 4. Graph of Speed-up for Three Load Balancing Algorithms

Whenever a complex program is implemented on a system, only one core is utilized and others are utilized rarely which can be verified experimentally. To avoid such type of situation, load balancing is needed. Load balancing is an important term in computer domain where common features are that, the number of processors is more in this type of computing. Load balancing is a challenging task in this type of computing. It is found from the simulation results that, Hierarchical load balancing is better as compared to cluster load balancing and random load balancing in terms of execution time and steal ratio. Cluster aware is better in terms of speedup.