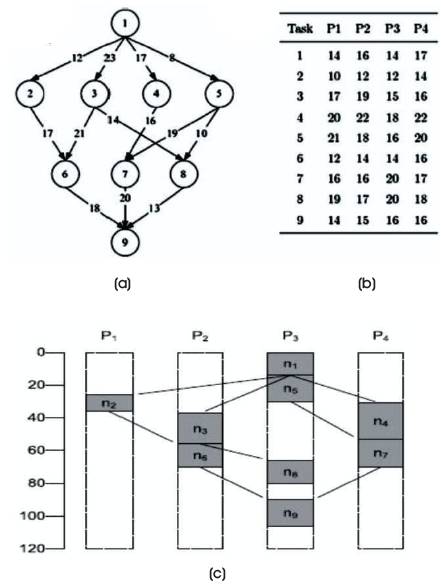

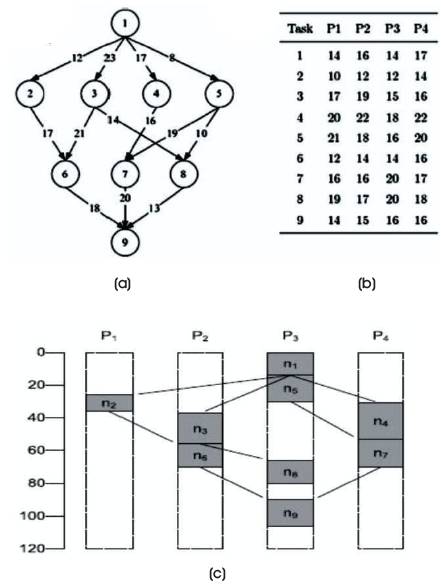

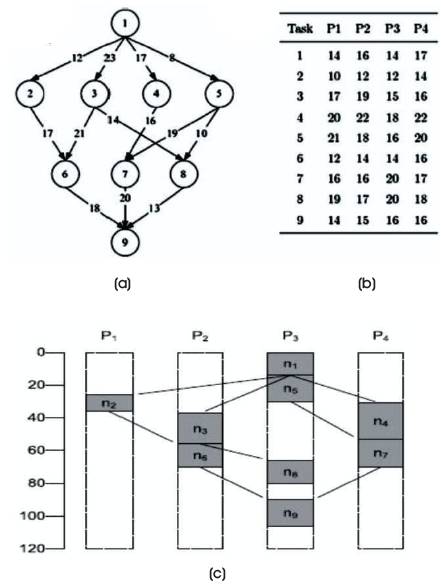

Figure 1. Multiprocessor Scheduling Problem, (a) A Simple Task Graph with Communication Costs, and (b) Computation Costs, (c) A Sample Schedule

Parallelism has been employed for many years, for high performance computing. Parallel computers can be classified according to the level at which the hardware supports parallelism with multi-core and multi-processor computers having multiple processing elements within a single machine, while clusters, Massively Parallel Processors (MPPs), and grids use multiple computer to work on the same task. Scheduling and Mapping of heterogeneous tasks to heterogeneous processor dynamically in a distributed environment has been one of the challenging area of research in the field of Grid Computing System. Several general purpose approaches with some modified techniques has been developed. This paper presents a comparative study of different algorithms such as Directed Search Optimization (DSO) trained Artificial Neural Network (ANN), Parallel Orthogonal Particle Swarm Optimization (POPSO), Lazy Ant Colony Optimization (LACO), and Genetic Algorithms (GA), in the basis of workflow scheduling in grid environment of multiprocessors. It also presents various heuristic based methods used in task scheduling.

Heterogeneous tasks are computed with heterogeneous processors, but number of issues arises in normal distribution process whereas it can be nullified in parallel distributed system. It is a NP-hard (non-deterministic polynomial -time hard) problem. Scheduling of dependent or independent tasks is a promising approach to meet the effectiveness of computational requirements (Tripathy et al., 2015a; 2015b). Dynamic task scheduling has number of advantages, such as efficient computing, lower computational cost, lower communication cost and so on. So, a lot of research is going on in dynamic task scheduling problem.

The main goal of the dynamic task scheduler is to maintain an ordered list of tasks, by assigning priority to each task. Then tasks are assigned to processor dynamically with their priorities. Recent research is focused on to minimize the execution time of a task among different processors. Different heuristic and metaheuristic approaches are defined for task scheduling problems in an efficient manner. This paper provides an overview of current optimization techniques such as:

Task scheduling means assignment of dependent or independent tasks to different processors. In the multiprocessor scheduling problem, the schedule is normally presented as Task Graph (TG). The task graph T=(N,S) consists of a set N of 'n' nodes and a set S of 's' sides, (Figure 1 (a)). Here the tasks are dependent on an entry task and exit task (Tripathy et al., 2015a; 2015b). The task with no predecessor is the entry task and with no successor is the exit task. The weights between the nodes are called as communication costs. Communication cost would be calculated for the dependent tasks with different processors. So it is assigned with null, if the tasks are not dependent and are computed in the same processor. Another term used is ‘computation cost’ of a task which is defined in Figure 1 (b). The task execution time is considered as computation cost in this approach.

Figure 1. Multiprocessor Scheduling Problem, (a) A Simple Task Graph with Communication Costs, and (b) Computation Costs, (c) A Sample Schedule

The aim of this approach is to allocate tasks to different processors with minimum communication cost and computation cost. There are different task scheduling methods available such as List Scheduling Method, Clustering-based Scheduling Method, Task Duplication based Scheduling Method and Guided Random Search Method. This paper presents some scheduling methods in comparison with other schedulers, their advantages and disadvantages. A sample schedule is shown in Figure 1 (c).

There are various methods used for the optimization technique. Hybridization of these techniques are more efficient in the task scheduling problem.

Artificial Neural Network (ANN) is an efficient approach mostly used for function approximation and classification. It is a 3-layer network used for task assignment problem. A Mini-Mental State Estimation (MMSE) method is used in second layer of ANN to train the weights. It is used to estimate the severity and progression of cognitive impairment and also used to find the cognitive changes in an individual, over time. In the third layer, Directed Search Optimization (DSO) is used for the training of data and also to provide an estimate of the input task. In this Neural Network, second layer computes the weights of ANN while the third layer calculates one step prediction. Then the estimated task is fed back to second layer for task assignment.

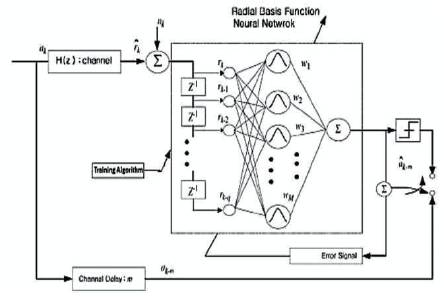

Radial Basis Function Neural Network (RBFNN), shown in Figure 2, is also considered to improve the estimation in task scheduling problem. This network is also trained by DSO to provide the optimal task at the desired processors, and is presented by Tripathy et al. (2015a; 2015b).

Figure 2. Model of Directed Neural Network

Visalakshi and Sivanandam (2009) presented PSO technique which operates based on the behavior of particles. All particles are moved in an open space with a best position. The position is updated itself by comparing the best particles’ position. Orthogonal PSO is an extended PSO, used to refine initial values from discrete values (Visalakshi and Siyanandam, 2009). This algorithm can find the solution with best points and it iterates more to improve the quality of the solution.

Parallel processing is mostly used for simultaneous computation. This method uses master-slave concept with OPSO based on parallelism. Masters allot the populations of particle which is orthogonal, to Slaves. Initial swarm is generated randomly by the Master. Then masters send orthogonal design to all its slaves. Slaves initialize the personal best and global best of entire Swarm using fitness function. The slaves obtain the optimal solution at the end of a specified iteration time and sends it to the master. Then master again decides the best among all the slaves, based on desired output.

ACO is a probabilistic technique for computing, and in general, it is used to find a good path in a directed or nondirected graph. Normally, ants create path towards the desired output using the smell of a chemical secreted by each ant. Some lazy ants are generated which copies a major portion of its path, from the best ant. According to this technique, number of lazy ants are created. Lazy ants also remain alive till the fitter lazy ants have been generated in a successive generation (Tiwari and Vidyarthi, 2016) . So the lazy ants help in faster solution and increases the probability of best solution.

This scheduling method uses grouping of different nodes in a task graph. To reduce the communication cost, this technique is more applicable. It finds the unnecessary communication between the processors. So the total task is divided into clusters using various techniques and each cluster is assigned to virtual processor. This process reduces the communication cost by finding the unnecessary communication. Then in the next step, all the clusters are assigned to actual processor to perform the computation. This technique may require more number of processors because each cluster will be assigned with an individual processor (Fan et al., 2015).

Different clustering algorithms are used for grouping the task graph. Dijkstra's algorithm, K-means, fuzzy C-means, Nearest Neighbor algorithms and many other techniques give better results for grouping.

Page and Naughton (2005) and Omara and Arafa (2010) have done research on Genetic Algorithm (GA) which is an evolutionary algorithm, based on natural selection. Commonly, it is used for high-quality solutions for optimization. Parallelism of genetic algorithm added new feature in case of large population size. The basic idea is to divide the task into subtask and assign it to different processor to execute. So based on parallelism master, slave technique is applied and evolution of fitness function is distributed among different processors. Then, selection and crossover occurred for entire population. In general, master always wait for the slowest slave processor before going to the next generation. Parallelism may avoid such problem and doesn't wait for the slowest slave processor. So the execution time can be minimized. By considering the total execution time and task duplication technique, two modified genetic algorithms have been developed. Two fitness functions have also been developed.

Heterogeneous Earliest Finish Time (HEFT) algorithm is a scheduling algorithm that has a limited number of processors fully connected. In two steps, it completes the operation. First, it determines the task priority and Second, the selection of processor. For every iteration, it finds the task having highest priority and allocates it to the processor. In heterogeneous computing system, HEFT is one of the best heuristic algorithms as discussed by Topcuoglu et al. (2002) and Kafil and Ahmad (1998).

Boeres et al. (2004) says that Combined Heat and Power (CHP) algorithm is considered as the best algorithm if the environment is having unlimited slow processor. It creates clustering tasks using clustering algorithm and allocates the clustering generated in the last step to the limited heterogeneous processor. Its performance is better than the HEFT algorithm.

Multiple Task Allocation (MTA) algorithm is a multi-task scheduling algorithm for a distributed computing system. It is a good load balancing algorithm. It also uses clustering operations for the task and processor (Boeres et al., 2004) .

Hagras and Janecek, (2004) presented Heterogeneous Critical Parents with Fast Duplicator (HCPFD) algorithm performs for a fully connected system with a limited number of processors in computing environment. It determines the task’s priority.

Critical Parents with Fast Duplicator (CPFD) algorithm is also used in limited processors. It performs the operation by creating task duplication techniques as discussed by Ahmad and Kwok (1994) and Liu et al. (2006).

To develop an efficient scheduler in a high speed digital communication, various resources are interconnected with high speed networks. With the advent of the age of big data, it is more important to achieve a high performance computing.

Hence, a good task scheduling method is required to develop an efficient computational environment.

The aim of dynamic task scheduling method is to assign tasks to different processors with a minimal communication cost and minimal computation cost. Various task scheduling methods have been developed, which are listed below.

If the task graph is having limited number of processors, then list scheduling method is suitable for better performance in lower scheduling time. All the processors must estimate the execution time to proceed next node in the task graph. Each processor can only get the result of sub scheduling. It may not give better result as there is no intelligence in task graph.

In large number of processors, clustering based scheduling method has added more advantages like scalability, less load, less energy consumption and more robustness. It is not suitable because more number of processors are required to assign every cluster with a processor.

Task duplication technique also can achieve better performance in comparison with others. It is mostly used to reduce the execution time.

Guided Random search method gives better scheduling performance, however the cost time of their scheduling is always higher than other scheduling algorithms. All these various methods are categorized in Figure 3.

Figure 3. Classification of Task Scheduling Methods

The goal of the Dynamic Task Scheduling in grid computing environment is to perform a task with high computing power, scalability, and reliability. Scheduling of task is the key issue for high performance computing.

Minimum communication costs and minimum computation costs are also required to perform in a high performance computing environment. Here, some heuristic algorithms are described to create an efficient scheduler. Different typical methods on task scheduling problem are also discussed.

Artificial Neural Network (ANN) is discussed with Directed Search Optimization (DSO). ANN is used to allocate the tasks to processor in an efficient manner. Training of the neurons in ANN is done by DSO, which adds some feature to get the optimized solution for task scheduler. Another network discussed here that is Radial Basis Function Neural Network (RBFNN) is also directed by DSO.

Automatic Ant Colony Optimization technique introduced Lazy Ant concept. It performs scheduling of the tasks with minimum communication cost and ver y faster communication.

Parallel Orthogonal Particle Swarm Optimization (POPSO) is also discussed which introduced master-slave concept and gives optimal results.

Different clustering based scheduling methods are also discussed which reduce unnecessary communications and make the system faster. One disadvantage is that, more number of processors may be required for this technique.

Modified Genetic algorithms are discussed in terms of task duplication, priority based scheduling and load balancing system.

All the algorithms are more efficient in task scheduling environment with less complexity. Other schedulers are also developed using different techniques. But these are the recent methodologies which give better results.