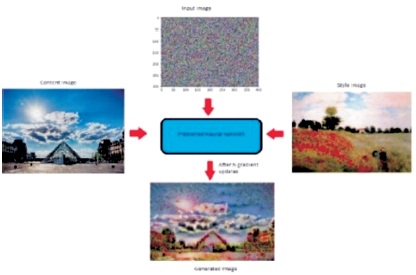

Figure 1. Style Transfer Steps

With the advancement of Artificial Intelligence and Deep Learning techniques, Neural Style transfer has become a popular methodology used to apply complex styles and effects to images which might have been an impossible task to be done manually a few years back. After using one image to style another successfully, research has been done in order to transfer styles from still images to real time video. The major problem faced in doing this has been, unlike styling still images there were inconsistent “popping”, that is inconsistent stylization from frame to frame and "temporal inconsistency" which is flickering between consecutive stylized frames. Many video style transfer models have been succeeded in improving temporal inconsistency but have failed to guarantee fast processing speed and nice perceptual style quality at the same time. In this study, we look to incorporate multiple styles to a video for a defined specific region of interest. As many models are able to transfer styles to a whole single frame but not to a specific region. For this, a convolutional neural network with the pre-trained VGG-16 model for transfer learning approach has been used. In our results we were able to transfer style to a specific region of interest of the video frame. Also, different tests were conducted to validate the model (pre-trained VGG-16) and it has been shown for which domains it is suitable.

With the advancement of deep learning, the topic of Neural Style Transfer has gained a lot of interest. Here, style transfer means transferring the style of one image to another. For example, when you have two images a content image and a style image, the critical activity is to transfer the style of the style image to the content image without losing important content information such as edges, corners and borders of the image. In this situation, style means the texture, patterns, colors and brush strokes that are being transferred.

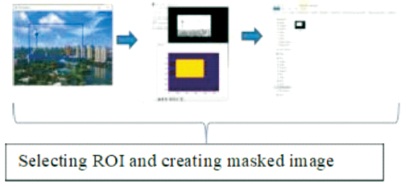

The principle of neural style transfer (Koidan, 2018) is to define two distance functions, one which describes how different the content of the two images are (the content and stylized image), and one which describes the difference between the two images in terms of style. Then given three images, a desired style image, a desired content image, and the input image (initialized with the content image) as shown in Figure 1. We try to transform the input image to minimize the content distance with the content image and its style distance with the style image.

Figure 1. Style Transfer Steps

With many studies conducted since 2015, this topic has gained much popularity especially among mobile image filtering applications such as Prisma. Many applications exist for Neural Style transfer such as image filtering, visual effects (VFX), computer generated art, and also for finding the right texture for real-world objects such as tables, walls and clothes.

After having successful results in the fields of Image-Style- Transfer, studies were conducted on style transfer to video. The major problems associated with style transfer to video is to consistently style each and every frame in order to avoid flickering of the video by preserving temporal consistency between stylized frames. Many video style transfer models have succeeded in reducing this in consistency but have failed to guarantee fast and smooth transfer of style to video. In this study, we look at how to blend multiple styles (Mroueh, 2019) only to a specific region of interest in a video frame. For this a convolutional neural network together with a pre-trained VGG-16 model for transfer learning is used. The main objectives of this research are summarized as follows:

Existing models only provide a method to implement style interpolation transfer for images but not for videos. Therefore, our main goal in this research is to propose a method to blend multiple styles to a video for a specific region of interest by using style interpolation transfer approach.

The knowledge gained by Cui et al. (2017) has been used to implement the proposed approach for this study.

Previous studies conducted and how they have evolved over time with different approaches taken together with their strengths and limitations will be discussed. The proposed approaches for this study, the architectures used and how it has been implemented will be discussed. The results obtained from the proposed approach will be validated and also compared with the state-of-the-art method. Finally, the proposed study will be summarized and its strengths and limitations will be discussed.

Many recent studies have focused on the problem of Neural Style Transfer since its inception in 2015 by Gatys et al. (2016). Research has evolved in trying to find different approaches to transfer styles among images and videos efficiently. Early studies focused on image style transfer and gradually it obtained great results. Thereafter studies focused on style transfer to video where different issues such as obstructions and frame distortion were discussed.

Leon Gatys conducted the first study on Neural Style Transfer to images in the year 2015. In this study by Gatys et al. (2015), how to differentiate the style of an image from its content has been first shown, so that the style can be applied to other images as well. They used the concepts of deep learning, convolutional neural networks and its ability to effectively extract features to successfully implement the proposed method.

Justin Johnson in the year 2016 improved the method proposed by Leon Gatys and has been able to perform style transfer to images in real time (Johnson et al., 2016). For this they used feed-forward neural network to solve the optimization problem proposed by Leon Gatys and achieved real time image style transfer. In his approach he trains a feed forward transformation network for image transformation tasks and trains the network using a perceptual loss function depending on high level features of a pre-trained network to obtain state-of-the-art visually pleasing results.

After style transfer to image gaining huge success, Manuel Ruder in the year 2016 first looked at style transfer to video (Ruder et al., 2016).

In this study they extended the approach of Leon Gatys and discussed on how to preserve temporal consistency and achieve smooth style transfer to each frame of the video.

In this approach to preserve smooth transition between frames they used a temporal constraint that reduces the deviations between two frames. They showed that his method can produce stable and visually attractive videos even when strong occlusion and fast motion is present.

Brandon Cui in the year 2017 extended the technique of Fast Neural Style Transfer to multiple styles (Cui et al., 2017). In the proposed approach they implemented a single style transfer and connected it to a pre-trained VGG 16 network. They showed that the proposed method produced promising preliminary results for multiple style transfer.

Yijun Li in the year 2017 aims to transfer arbitrary visual styles to content images (Li et al., 2017). The key ingredient of their method is a pair of feature transforms, whitening and coloring, which are embedded to an image reconstruction network. They demonstrate the effectiveness of their algorithm by generating high-quality stylized images with comparison to a number of recent methods.

Dongdong Chen in the year 2017 proposed Style Bank, which is composed of multiple convolution filter banks and each filter bank explicitly represents one style, for neural image style transfer (Chen et al., 2017). Their method is easy to train, runs in real-time, and produces results that are qualitatively better than existing methods.

Previous studies conducted have not looked to transfer multiple styles to a specific region of interest in a video. So, our study uses the concepts of Image Style Transfer, Style Transfer to Video and Multiple Style Transfer to implement Multiple Style Transfer to video for a specific region of interest.

In previous methods adopted for style transfer to video, a single style has been applied to the entire frame of the video. This had limitations of not being able to style the video for specific styles of interest for a specific region of interest. In this study, in order to overcome these limitations, the following approaches were taken in order to achieve desired results.

In this approach, a masked region corresponding to the region of interest has been extracted from each frame of the video using a Python script. The whole point of making a masked image is that, unlike usual style transfer where the entire frame is styled, here only the masked area is styled.

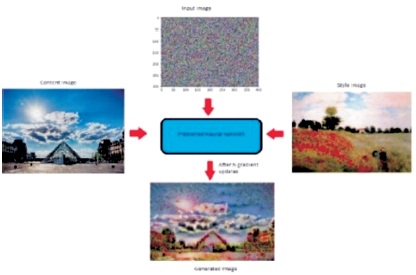

Next the masked frame, the original video frame and the style image has been given as inputs to a convolutional neural network (Marcelino, 2018). Figure 2 shows steps taken for approach 1 and Figure 3 shows the results obtained for each step.

Figure 2. Steps Followed for First Approach

Figure 3. Approach 1 Steps

The main drawback of this approach has been the high computational power required to style the frame by using the masked frame, original frame and style image at once. And also, there has been no way to style all of the frames at once which resulted in the ability to achieve real-time style transfer.

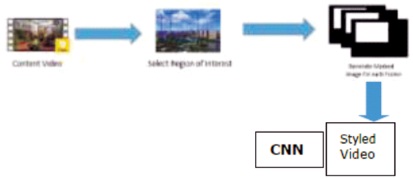

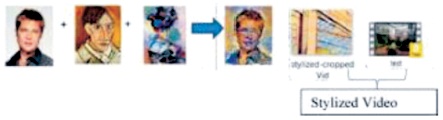

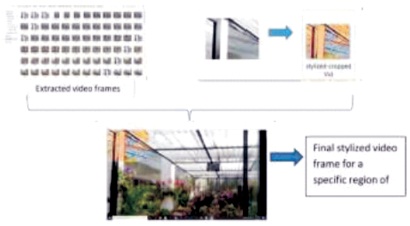

The second approach first styles the video by blending multiple styles (two styles) for the entire frame of the video (Handa et al., 2018). Next the styled video has been cropped according to required dimensions to suit the region of interest of the video frame to be styled (the region of interest would be a rectangular region). Finally, the cropped video and the original source video were combined to give the final stylized video where the style has been only applied to the cropped region within the whole frame of the video. Figure 4 shows the steps taken for approach 2, and Figure 5 shows the results obtained.

The steps taken in this approach are as follows:

First the source video has been cropped to required dimensions using a python script by extracting each frame, cropping to required dimensions and finally combining them to form the final cropped video.

The cropped video has been styled using style interpolation transfer (transferring style by blending multiple styles together) (Johnson et al., 2016).

For this a convolutional neural network is used together with the VGG-16 pre-trained model.

Finally, the stylized cropped video and the original source video are combined frame-by-frame to produce the final stylized video where multiple styles are combined to a specific region of interest. So, this approach has been successful in implementing multiple style transfer to a video for a specific region of interest.

Figure 4. Steps Followed for Second Approach

Figure 5. Approach 2 Steps

Figure 6 illustrates the results for the steps taken in approach 1. The frames of the video were extracted, cropped to required dimensions, styled and recombined to form the final stylized cropped video.

Figure 6. Approach 1 Results

The frames of the video were extracted, cropped to required dimensions, styled and recombined to form the final stylized cropped video.

Figure 7 shows the results obtained for each step taken in approach 2.

Figure 7. Approach 2 Results

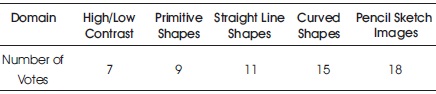

Table 1 shows the results of the votes given by 60 independent people on how well they think the style has been transferred for different domains such as primitive shapes, straight line shapes, curved shapes and pencil sketch images. Based on the results obtained we can see that a majority has voted for images having curved shapes and also for pencil sketch images as being the domains where the VGG-16 pre-trained model has been able to successfully transfer the style.

Table 1.Vote Feedback for Specific Domains

In the results shown, it can be seen that style transfer to video by blending multiple styles to a required region of interest can be performed successfully. Although the above-mentioned approach might not be the most suitable method to provide optimum results, this will be a good starting point to focus on style interpolation transfer to video for a masked region of interest. Further studies can be conducted in order to come up with more advanced techniques in order to achieve successful results.

A pre-trained VGG-16 model is used in the transfer learning approach in order to transfer styles, and the biggest problem is on how to validate the model so that we can confirm that the stylized image is what we expect.

For this purpose, couple of tests has been carried out and random opinions from multiple people were taken to provide a more generalized validation. Tests carried out are as follows:

Style transfer using VGG-16 has been tested for specific domains such as, primitive shapes, high/low contrast images, images containing straight line shapes, images containing curved shapes and pencil sketch images. The results were shown to 60 independent people and their vote's feedback has been taken on which domain is more suitable for style transfer (Table 1).

From the test results it can be concluded that the VGG-16 model performed style transfer well for some domains such as pencil sketches as shown in Figure 8 and curved shapes and not for other domains such as low contrast images.

Figure 8. Model Validation for Pencil Sketch

So, choosing the appropriate pre-trained model for style transfer based on the domain of concern is important when it comes to style transfer using transfer learning approach.

The main purpose of this research is to blend multiple styles into a specific region of interest in a video using style transfer. From the obtained results it can be seen that although we are able to achieve the desired goal, this might not be the most effective method for this purpose. Further studies can look at how to improve this in order to style specific regions of interest of arbitrary shapes rather than only a rectangular region and perform multiple style transfer to video in a smooth and efficient way. In addition, it is important to note that from the validation tests conducted it can be concluded that when using a transfer learning approach, we must be very careful in choosing the correct pre-trained model that suits our purpose.

The validation tests show us that the VGG-16 model is only suitable for some image domains and is unsuitable for others. Some other pre-trained models such as Inception- V3 can be used based on the domain of interest.