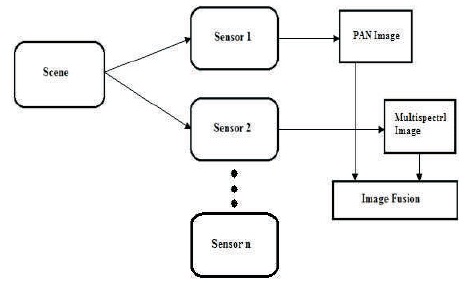

Figure 1. Block Diagram of Image Fusion

Image fusion is a process of combining two or more images of same scene captured by different sensors and converting into single image to get the detailed information of an image. Image fusion method is used to improve the quality of an image. Image fusion is applicable in image analysis applications, such as in medical, remote sensing application, robotics, etc. Many fusion methods are used for image fusion, such as Brovey, Multiplicative and Principal Component Analysis (PCA), etc.

Image Fusion is a combination of two or more images which are obtained from one sensor or multi-sensor, which convert that images into signal image. For combining the images, different methods of image fusion are used, such as Brovey, Multiplicative and Principle Component Analysis, etc. The resultant image of this method will be more informative than any other input and enhanced quality of image. The resultant image has better visualization and accuracy than input images. The, images captured by sensors may be at different view, different resolution and different time. Therefore, image fusion categories by multiview, multitemporal, multifocus, and multimodal image fusion.

Remote sensing is one of the applications of image fusion. Different image fusion methods are used for image analysis like Image Classification, Change Detection, Natural Hazards, etc. Many research has been done on merging of fused high resolution panchromatic images with lower resolution multispectral data of remote sensing data to obtain high resolution multispectral imagery while retaining the spectral characteristics of the multispectral data.

The literature review of various image fusion techniques and their importance in various applications are discussed. Singh and Gupta (2016) have presented a paper on “Improvement of classification accuracy using image fusion techniques” in which they explained image fusion methods used for enhancing the image of satellite. Sarup and Singhai (2011) proposed a paper on “Image fusion techniques for accurate classification of Remote Sensing data” in which they have explained the comparison of various fusion techniques and their accuracy. Al-Wassai et al. (2012) have presented a paper on “Spatial and spectral quality evaluation based on edges regions of satellite: Image Fusion” which gives information about quality evaluation of the fused images carried through describing of various spatial and spectral quality metrics that used to evaluate them.

Image fusion is a combination of two or more images which are obtained from one sensor or multi-sensor and convert that images into signal image. Image fusion of high resolution PAN image and low resolution multispectral image is possible by using different image methods, and the block diagram for image fusion is shown in Figure 1.

Figure 1. Block Diagram of Image Fusion

Satellite imagery can have two types of images:

Figure 2. Panchromatic Image (Singh & Gupta, 2016)

Figure 3. Multispectral Image (Singh & Gupta, 2016)

For combining images, different methods of image fusion are used such as Brovey, Multiplicative and Principle Component Analysis, etc.

Brovey fusion method is one of the methods used to combine two or more images captured by multisensory. It is used to improve the characteristics of an image. In this method, to get fused image of each band of Multispectral image, first simplify MS bands and then multiply with the panchromatic image (Ehlers et al., 2010; Pandit & Bhiwani, 2015; Suthakar et al., 2014). The mathematical representation of Brovey fusion method is shown in equation (1).

Multiplicative fusion is a simplest method. In this method, in order to get fused image of each band of MS image, multiply band of MS which is to be fused with PAN image (Pandit & Bhiwani, 2015).

Band1MS = Band1MS × PAN

Principle Component Analysis (PCA) is useful in image processing for image enhancement, image compression, and image fusion. The PCA is applied to the multispectral image bands and the principal components are computed. For principle components find covariance matrices, Eigen values for Eigen vectors. Then replace first principal component with the panchromatic image. To get back into the image domain, compute the inverse PCA transform (Sahu & Parsai, 2012; Wenbo et al., 2008; Rani & Sharma, 2013).

In image fusion, fused image is a combination of images captured by different sensors at the same time or same sensor at different times. Hence, image fusion is divided into Multimodal, Multitemporal, Multiview, and Multifocus.

Image fusion takes place by getting images of monitoring area captured by different sensors such as Multispectral sensor, Panchromatic sensor, etc.

For example- The multispectral images is taken from IRSP6 also called Resourcesat-1 satellite with the help of on-board LISS-III sensor which provide image with spatial resolution of 23.5 m as shown in Figure 4 and an IRS-P5 also called Cartosat-1 satellite which provides single band panchromatic image with spatial resolution of 2.5 m of Khedla village, part of Pilani town of district Jhunjhunu in Rajasthan State, India as shown in Figure 5 (Singh & Gupta, 2016). Different methods such as Brovey fusion, Multiplicative fusion, and Principle component analysis fusion are applied for fusion of PAN and multispectral image as shown in Figure 6 through Figure 8 (Singh & Gupta, 2016).

Figure 4. PAN Image with 2.5 m Resolution (Singh & Gupta, 2016)

Figure 5. LISS-III Image with 23.5 m Resolution (Singh & Gupta, 2016)

Figure 6. Brovey Image Fusion (Singh & Gupta, 2016)

Figure 7. Multiplicative Fusion (Singh & Gupta, 2016)

Figure 8. PCA Image Fusion (Singh & Gupta, 2016)

In this, image fusion takes place by getting images of monitoring area captured by sensor at different times. This fusion type is used to find the change detection in particular area.

For example- The multispectral images are taken from MODIS data (spatial resolution: 250 m) in 2006 as shown in Figure 9 and an Landsat ETM+ provides Panchromatic image with spatial resolution of 10 m as shown in Figure 10 of Chongqing City, Southwest China, in 2000 (Dong et al., 2011). To get more information, Brovey fusion method is used to fuse the PAN and multi-spectral image as shown in Figure 11.

Figure 9. MODIS Image, 2006 (Dong et al., 2011)

Figure 10. ETM Image, 2000 (Dong et al., 2011)

Figure 11. Brovey Image Fusion Result of Multiple Sources Images of Chongqing City (Dong et al., 2011)

In this, image fusion takes place by getting images of monitoring area captured by sensor with different angles.

In this, image fusion takes place by getting images ofCASE STUDY monitoring area captured by sensor with different focal lengths.

The different methods of image fusion, such as Brovey, Multiplicative and Principal Component Analysis (PCA) were studied to get more information of image in remote sensing applications and compared. The different types of image fusion, such as multimodal fusion, multifocus fusion, and multitemporal fusion were studied. These types can be used to combine/fuse image to improve classification of the areas, such as vegetation area, agriculture area, and empty plot on the surface. It may be possible that the results may vary for different images in different applications, such as image classification, change detection, natural hazards, etc.