Table 1. Factors Relating to Computerised and Traditional Assessment, Including the Questionnaire's Questions *

The study examined advantages and disadvantages of computerised assessment compared to traditional evaluation. It was based on two samples of college students (n=54) being examined in computerised tests instead of paper-based exams. Students were asked to answer a questionnaire focused on test effectiveness, experience, flexibility and integrity. Concerning each characteristic, responders were asked to relate to both kinds of evaluation (computerised and traditional). Furthermore, students were asked to evaluate home and classroom computerised exams.

The research reveals that there is a significant advantage to computerised assessment in comparison to paper-based evaluation. The most powerful advantage of computer-assisted assessment found throughout the research, is a test's flexibility. The research findings point out that there is significant worthiness to adopt computerised assessment technologies in higher education, including home exams. Such a new method of evaluation is about to improve significantly the institutional educational administration.

The Department of Management at the Neri Bloomfield School of Design and Education, prepares students to teach management and accounting at high schools. The department's pedagogical aims are to provide students relevant tools, so they would be able to deal effectively with needs existing at high schools. The department deals with different levels, including theoretical and technological knowledge.

In the year 2009-10, a new Computer Assisted Assessment (CAA) system has been, firstly, used. The system, which is a part of the existing LMS (Learning Management System), has intended to replace traditional assessment. The first experiment of the new system was undertaken in the Department of Management, including the following courses

In the year 2010-11, the new system was examined again including the same courses, except "scientific and technological literacy." This course has been replaced by another one -"management of technology"(third year).

In order to examine the effectiveness of the computerised tests, a research question was worded focused on the advantages and disadvantages of computer-assisted assessment in comparison to traditional evaluation. The intention was to gain general conclusions concerning the differences between computerised and paper-based exams, according to attitudes of students in a teacher-training college.

Assessment is a critical catalyst for student learning (Brown, Bull & Pendlebury,1997) and there is considerable pressure on higher-education institutions to measure learning outcomes more formally (Farrer, 2002; Laurillard, 2002). This has been interpreted as a demand for more frequent assessment. The potential for Information and Communications Technology (ICT) to automate aspects of learning and teaching is widely acknowledged, although promised productivity benefits have been slow to appear (Conole, 2004; Conole & Dyke, 2004). Computer Assisted Assessment (CAA), has a considerable potential both to ease the assessment load and provide innovative and powerful modes of assessment (Brown et al., 1997; Bull & McKenna, 2004), and as the use of ICT increases there may be 'inherent difficulties in teaching and learning online and assessing on paper' (Bull, 2001; Bennett, 2002a). CAA is a common term to the use of computers in the assessment of student learning. The term encompasses the use of computers to deliver, mark and analyse assignments or examinations. It also includes the collaboration and analysis of optically captured data gathered from machines such as Optical Mark Readers (OMR). An additional term is 'Computer Based Assessment' (CBA), which refers to an assessment in which the questions or tasks are delivered to a student via a computer terminal. Other terms used to describe CAA activities include computer based testing, computerised assessment, computer aided assessment and web based assessment. The term screen based assessment encompasses both web based and computer based assessment (Bull & McKenna, 2004).

The most common format for items delivered by CAA is objective test questions (such as multiple-choice or true/false) which require a student to choose or provide a response to a question whose correct answer is predetermined. However, there are other types of questions, which can be used with CAA.

CAA can also provide academic staff with rapid feedback about their students' performance. Assessments which are marked automatically can offer immediate and evaluative statistical analysis allowing academics to assess quickly whether their students have understood the material being taught, both at an individual and group level. If students have misconceptions about a particular theory/concept or gaps in their knowledge, these can be identified and addressed before the course or module's end.

The format of an assessment affects validity, reliability and student performance. Paper and online assessments may differ in several respects. Studies have compared paper-based assessments with computer-based assessments to explore this (Ward, Frederiksen & Carlson, 1980; Outtz, 1998; Fiddes, Korabinski, McGuire, Youngson & McMillan, 2002). In particular, the Pass-IT project has conducted a large-scale study of schools and colleges in Scotland, across a range of subject areas and levels (Ashton, Schofield & Woodgar, 2003; Ashton, Beavers, Schofield & Youngson, 2004). Findings vary according to the item type, subject area and level. Potential causes of mode effect include the attributes of the examinees, the nature of the items, item ordering, local item dependency and the test-taking experience of the student. Additionally there may be cognitive differences and different test-taking strategies adopted for each mode. Understanding these issues is important for developing strategies for item development as well as to produce guidelines for developing appropriate administrative procedures or statistically adjusting item parameters.

In contrast to marking essays, marking objective test scripts is a simple repetitive task, and researchers are exploring methods of automating assessment. Objective testing is now well established in the United States and elsewhere for standardized testing in schools, colleges, professional entrance examinations and for psychological testing (Bennett, 2002b; Hambrick, 2002).

The limitations of item types are an ongoing issue. A major concern related with the nature of objective tests is whether Multiple-Choice Questions (MCQs) are really suitable for assessing higher-order learning outcomes in higher-education students (Pritchett, 1999; Davies, 2002), and this is reflected in the opinions of both academics and quality assurance staff (Bull, 1999; Warburton & Conole, 2003). The most optimistic view is that item-based testing may be appropriate for examining the full range of learning outcomes in undergraduates and postgraduates, provided sufficientcare is taken in their construction (Farthing & McPhee, 1999; Duke-Williams & King, 2001). MCQs and multiple response questions are still the most frequently used question types (Boyle, Hutchison, O'Hare & Patterson, 2002; Warburton & Conole, 2003) but there is steady pressure through the use of 'more sophisticated' question types (Davies, 2001).Work is also being conducted during the development of computer-generated items (Mills, Potenza, Fremer & Ward, 2002). This includes the development of item templates precise enough to enable the computer to generate parallel items that do not need to be individually calibrated. Research suggests that some subject areas are easier to replicate than others–lower level mathematics, for example, in comparison with higher-level content domain areas.

Actually, CAA is not exactly a new approach. Over the last decade, it has been developing rapidly in terms of its integration into schools, universities and other institutions. Its educational and technical sophistication and its capacity to offer elements, such as simulations and multimedia-based questions, are not feasible with paper-based assessments (Bull & McKenna, 2004).

When there are increasing numbers of students and decreasing resources, objective tests may offer a valuable addition to existing ways of assessment, which are available for lecturers.

Possible advantages for using CAA might be the following

The research questions have been derived from the necessity to examine advantages and disadvantages of computerised assessment in comparison to traditional evaluation (paper based exams) in higher education. Another aim was to examine if there are differences between home tests, and classroom computerised exams.

The following research questions were worded, relating to a teacher training college

Population: The population addressed through the study included all students in the Neri Bloomfield School of Design and Education.

Samples: There were two samples included 54 students Overall: 33 in the year 2010 and 21 in 2011. Students in the third and fourth year have been examined via Moodle computerised tests during the whole year. They were asked to answer a questionnaire at the end of the first semester of each academic year, concerning their perceptions towards computerised versus traditional exams.

The computerised exams related to the following courses (including open material)

Each computerised exam included 25 multiple-choice questions with four or five answers each, except SPSS, which included different types of questions (multiple choice, calculated number and matching lists). Students were allowed to use any support material, and they had to finish the computerised exam during a definite time (110 minutes). When the time was over, the exam has been automatically submitted, having no chance to start over again.

The questionnaires were anonymous, and the rate of response was 90% (54 out of 60).

The traditional exams related to other courses existed in 2010/2011 (research methods, marketing, accounting, sociology, economics, psychology, management and organizational behaviour).

In order to examine the effectiveness of computerised learners' evaluation in comparison to traditional assessment, a questionnaire, including 48 closed questions was prepared: 24 items related to computerised assessment and 24 equivalent items to traditional one. The questionnaires were given to all the students who were examined in one computerised test at least. Most students were examined in two tests and some of them, took part in three or even four exams.

For each question, the respondents were requested to mention their views on the following Likert five-digit scale

The questionnaire was built based upon the literature review in order to identify the main variables relating to CAA. During the review, the following areas have been recognized as principal characteristics of CAA

In addition to the closed questions, the questionnaire included two open-ended questions as well. They were designated to accomplish the main data gathered by the quantitative principal part of the questionnaire, as follows

In order to examine the validity of the questionnaire, the reliability of the factors was calculated (Cronbach's alpha). Item analysis was undertaken as well in order to improve reliability. Based on the reliability found, the following12 factors were built (2010 and 2011 together)

For every single factor, there was found a high value of reliability (ranges from 0.649 to 0.891). Each factor has been determined by calculating the mean value of the items composing it.

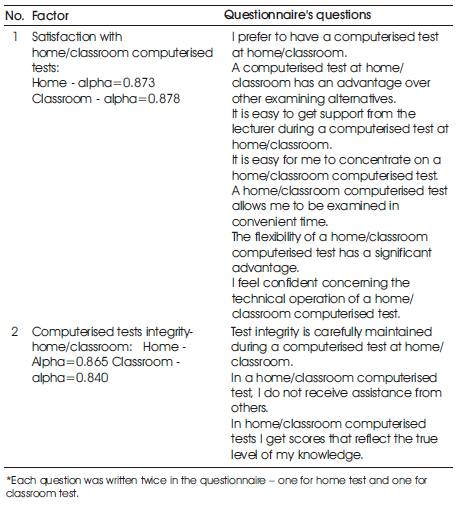

Table 1 summarizes the eight factors (four for CAA and four for traditional assessment), the items composing them and reliability values.

Table 2 summarizes the other four factors (student satisfaction with computerised tests undertaken at home and in the classroom and test integrity relating to both places), the items composing them and reliability values.

The following statistical tests have been undertaken (a<=0.05).

Table 1. Factors Relating to Computerised and Traditional Assessment, Including the Questionnaire's Questions *

Table 2. Factors Relating to Student Satisfaction with Computerised Tests Undertaken at Home and in the Classroom and Test Integrity, including the Questionnaire's Questions*

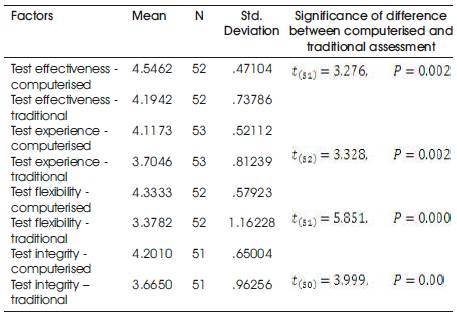

There was no significant difference between the years 2010 and 2011 concerning the mean scores of all questions and factors relating to both CAA and traditional assessment (ANOVA, a<=0.05). It means that there was a replication of the results found in the first year (2010), also in the second year (2011). It strengthens the findings and gives them more validity. Mean factors' scores are presented for both years together in Table 3.

Table 3 shows that relating to these four factors, there is a significant advantage to CAA in comparison to traditional assessment.

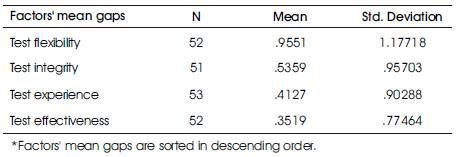

Table 4 presents the gaps between all pairs introduced in Table 3. Comparison of these gaps shows that there is a significant difference between test flexibility (gap=1.17718) and all the three other gaps (t(50) =-3.777, p<0.01, t(51) =-3.444, p<0.01, t(49) =2.666, p<0.0 1). On the other hand, there is no significant difference between the gaps relating to the other three factors. The meaning of these findings is that with regard to every single gap out of these four, there is a significant advantage to computerised tests in comparison to a traditional one. Further more, relating to tests' flexibility, the benefit of CAA is significantly greater in comparison to their advantage concerning the other three factors.

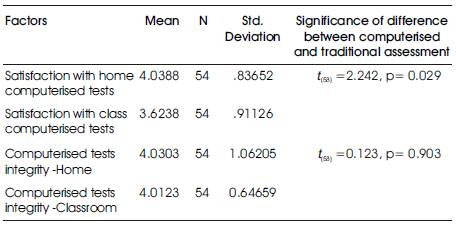

Table 5 presents a comparison between home and classroom computerised tests, regarding to satisfaction with the tests and the existing level of integrity. The findings show a significant advantage to satisfaction with home tests in comparison to classroom ones (both are computerised). However, relating to test integrity, there was no significant difference. Therefore, it can be confidently concluded that with regard to test integrity, home tests are at least not inferior in comparison to classroom exams.

The open-ended questions strengthened the closed ones as shown in the following quotes

"The computerised test has no weaknesses-all the questions are clear, accurate and understood. I have no complains whatsoever.”

"I enjoyed the computerised tests and in my opinion, it is definitely preferred in comparison to paper-based exams. A computerised test is much more convenient and interesting. In my view, computerised exams have only advantages.”

The results summarized in Tables 3-5, the answers to the open-ended questions and statistical significant tests, have been the basis for wording answers to the research questions, as detailed in the next sections.

Table 3. A comparison Between Computerised and Traditional Assessment

Table 4. Factors' Gaps: Computerised Test Mean Scores Minus Traditional Test Mean Scores *

Table 5. A Comparison Between Home and Classroom Computerised Tests

The research questions were as follows

I.What are the advantages and disadvantages of CAA in comparison to traditional assessment methods, according to students' views?

The results show that in students' view, computerised assessment has a significant advantage in comparison to traditional one, concerning the following factors being examined

ii. Are there advantages or disadvantages to computerised exams taken place at home in comparison to classroom tests, according to students' views?

The results show that in students' view, there was no significant difference between home and classroom computerised exams. Two factors have been examined

The literature review points out many advantages of CAA in comparison to traditional assessment. These benefits are mainly focused on organisational and managerial factors. When evaluating in a computerised form, it is much easier to prepare, transfer and mark tests. Therefore, it is possible to cover a lot of material while reducing the burden on faculty and administrative staff. The worthiness of adopting a new computerised system of evaluation depends on its reliability and the ability to assimilate the necessary technological knowledge among lecturers.

Assuming that there is a significant advantage to computerised assessment for institutions of higher education, another critical question arises. The question is whether in "customers' view," namely students, computerised assessment is appropriate or at least does not cause difficulties in comparison to usual assessment. As such, it was necessary to examine the properties of the two methods of assessment from the students' perspective, in order to learn whether a computerised assessment has inferiority or on the contrary, it is superior.

Since the organisational and the administrative advantages are clear, it was enough to conclude that computerised assessment has at least no disadvantage for the examinees, in order to make it worthwhile to adopt the new technology. The study shows that not only there is no disadvantage with respect to computerised assessment criteria variety, but it found out that according to students' perspectives, information technology has significant advantages for them. The highlight is expressed in the best possible service to students due to the great flexibility of the computer system. If so, the worthiness of adopting computerised assessment technology increases significantly, and that might be a great contribution to the educational administration process.

Another important conclusion resulting from the research is that it is feasible to transfer computerised tests, which allow use of open material, at the student home instead of in the academic institution. This method has distinct organisational and managerial advantages but has also an advantage from the students' perspective. It allows great flexibility to students in terms of test date as well as not having to reach the institution of higher education. According to the research results, transfer to home computerised exams, does neither involve any disadvantages, nor problems relating to tests' integrity.