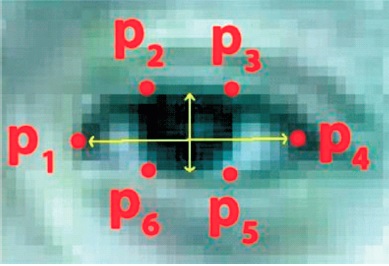

Figure 1. The 6 Facial Landmarks Associated with the Eye

According to the research, there are approximately 5.4 million people who are suffering from paralysis. The prototype system focuses on an unique system to help people with LIS paralysis, so they can communicate with a person in an easy manner. The system is done by making use of eye-blinks or eye movement as they cannot move their body parts. A camera module had been used to capture the image and convert the text to voice signal, which is displayed on LCD. The proposed system is coordinated by the help of Raspberry pi.

Disabled individuals with motor paralysis such as Locked in Syndrome (LIS) have difficulty in conveying their intentions, because the motor neurons influencing voluntary muscles are affected. There has not been any significant medical improvement to treat this type of disability. In many cases, although physical exercises and proper medications can benefit the patient, again these are very lengthy processes and the success rate is negligible. Consequences are lifelong physical disability. However, the only controls they have are their eyes. Therefore, we decided to work on an automation technology that can be easily controlled using the eyes, which means that it can be used with minimal effort to communicate with others. We have worked on a project mainly aimed for paralyzed people to develop an eye blink detection system which will be used for communication purpose. The sole purpose of our work is to make a sustainable and effective solution for people with physical disability

Mukherjee and Chatterjee (2015) proposed an idea that allows us to convert the eye blinks to the morse code, which is universally accepted. The IR sensor module records the eye blinking pattern and feeds it to the Arduino Uno Board. The Arduino Uno Board converts these patterns in alphabets and displays it on the LCD.

Martinez et al. (2017) have developed a fatigue detection system which is based on vision monitoring of the drivers. This device monitors the driver's eye blinking pattern, yawning, head posture and hand gestures, which are done with the help of a camera. If the driver shows some drowsiness symptom, then he is alerted with an alarm.

Zhang et al. (2018) presented a Human Machine Interface (HMI) for severe Spinal Cord Injury (SCI) for controlling their smart home. The proposed method of HMI allows the users to control or interact with the smart home environment by blinking their eyes. Therefore it is promising to SCI patients to assist them in their day to day life (Li et al., 2015; Zhang et al., 2017).

Cecotti (2016) gave the concept of gaze controlled virtual keyboard. Zhang et al. (2015) introduces a technology based on a brain computer and automatic navigation which is used to control a smart wheelchair.

Singh et al. (2015) published a journal on object detection by using Haar Cascade Classifier. It is widely applied in many applications and devices and acts as an interface between human and computer (Meng & Zhao, 2017).

Krafka (2016) suggested the use of a webcam for eye tracking. Webcam is one of the most promising sensors among computer vision segments. It is also preferred because of its easy accessibility and low cost. Meng & Zhao (2017) proposed an eye movement analysis model, which is based on five eye feature points detection rather than only one.

The entire system is developed for physically disabled people to interact with others with less efforts. There are two major parts of the system, the camera module and the electrical peripheral control unit.

Our blink detection system is divided into four major parts. In the first part, we have discussed the eye aspect ratio and how it can be used to determine if a person is blinking or not in a given video frame. Secondly, we have written the Python, Open CV, and dlib code to do two things: first, perform facial landmark detection (Figure 1) and second, detect blinks in video streams.

Figure 1. The 6 Facial Landmarks Associated with the Eye

Based on this implementation, we apply our method for detecting blinks. Finally, we have done the eye detection by using eye blinks.

In terms of blink detection, we just have to know about two sets of facial structures - the eyes.

Now each eye is represented by 6(x,y)-coordinates, starting at the left-corner of the eye, and then working clockwise around the remainder of the region.

As we can see that there is a relation between the width and the height of these coordinates.

Some researchers proposed the Real-Time Eye Blink Detection using Facial Landmarks. Then we can derive an equation that reflects this relation called as Eye Aspect Ratio (EAR):

EAR = (||P2 – P6|| + ||P3 – P5||) / (|| P4 – P6||

where, P1, P2 . . ., P6 are the facial landmark locations.

The numerator signifies the distance between vertical eye landmarks while the denominator signifies the distance between horizontal eye landmarks.

We have used the path of shape predictor in which there is a pre-trained facial landmark detector.

When the device is switched ON, the patient's eyes are continuously monitored and the video is operated by Raspberry pi for further proceedings. Once eye region is extracted, blinks can be registered using EAR (Eye Aspect Ratio).

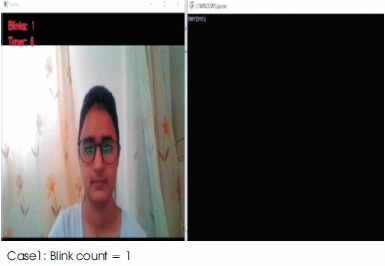

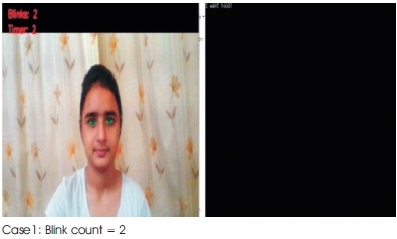

For each time, when EAR value falls below a certain threshold then a blink is detected by the system. By default the value we have taken lies between 0 to 1. Eye blinking is an involuntary action of the human body so we need to create a pattern of blinks in order to give them some meaning that can help with their respective tasks. When this EAR threshold value remains constant for 4 seconds, the system makes a very tiny beep which gives an indication to the user to open his/her eyes. However, if the user keeps the eyes close even for a longer time, the system identifies it as a human behaviour of resting or sleeping. Otherwise, the system plays 100 ms long beep to indicate that the BLINK ACTION is ready to take off. By this time, the user can blink his/her eyes for a required number of times for the desired action. The system will then make a beep to indicate that the blink has been addressed. Within ten seconds of starting the action mode the computer takes the number of blinking commands and records all the blinks. When the counter of ten seconds finishes, the system interprets the blink command. Then, it checks how many times the user blink. Depending on that, the system identifies which action has to be performed. For verification purposes, once the user lights up our computer, the "EMERGENCY" command is displayed on the LCD, as shown in Figure 2 and the buzzer beeps continuously with a delay of 100 ms. And if the user blinks for twice, the command of “I WANT FOOD” is displayed on LCD as shown in Figure 3 and if the user blinks for thrice, the command of “I WANT WATER” is displayed on LCD as shown in Figure 4.

Figure 2. An Emergency Note on the LCD

Figure 3. An Food Note on the LCD

Figure 4. An Water Note on the LCD

The output window for others will be LCD in which the text is placed. Therefore, if the person is not in the room, he/she can ask what the patient wants to do.

We described a prototype system for printing text on an LCD display so that they would read displayed text that could be heard by others on the same floor. We quantitatively demonstrated that regression-based facial landmark detectors are precise enough to reliably estimate a level of eye openness. We have used the EAR method, which involves a very simple calculation based on the ratio of the distances between the facial landmarks of the eyes. It takes around four seconds to get ready to take data, and then the system will display the operation in the LCD and the speaker speaks the operation. It will work for 24*7 for a patient so that anytime he can ask for help. The output is perfect as it is detecting eye blink and it shows the status. Finally, we get a confirmation by getting a message of data onto the LCD.

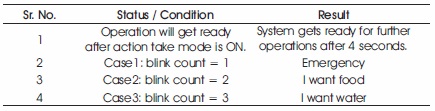

When the eyes are closed the reflection value gets lower than when the eyelids are open. Therefore, we can easily identify whenever the user closes the eyes for a specific interval. Additionally, we can also detect eye blinks. As eye blink is a natural process of the human body, therefore, we have specified a pattern to activate the system. Whenever the user closes the eyes for 4 seconds, the system identifies it as the start for taking action and gets ready. Otherwise it will take the eye blink as usual unintentional human behavior and the system will do nothing. Table 1 shows result analysis of the entire system.

Table 1. Result Analysis

The important key factor of this project is to facilitate these people and to make them more confident to manage their sites by themselves. The primary advantage is that the device can be taken away easily and is of about less weight.

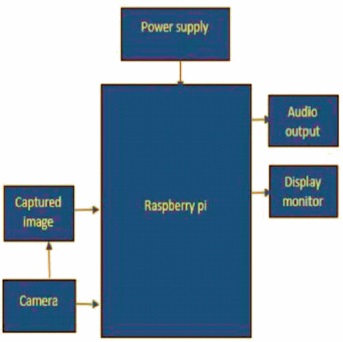

The block diagram (Figure 5) consists of Raspberry Pi which consists of an ARM11 microcontroller (BCM2837), USB cam, power supply, flash memory, SRAM and speakers or earphone. The USB camera module is used to capture the image placed in front of the user.

Figure 5. Block Diagram of the Proposed System

The HDMI interface with the system is used to connect the Raspberry Pi to the monitor. SRAM is used for temporary storage and the flash memory is used for permanent storage of the data. The BCM2837 processor is used to perform the required action on the captured image and provides audio output through the earphone. The power supply will be provided by simply connecting the Raspberry Pi to the adapter and that adapter with socket.

In this venture, an implementation of eye-blinks based communication devices for paralyzed people has been presented. Experimental results show that it can be used to communicate with the people around disabled patients via eye-blinks. The obtained results show that the algorithm used allows precise detection of eye-blinks. The performed tests demonstrate that designed eye-blink sensor device is a useful tool for communication. This research work will provide people with complete paralysis which is the advantage of communication with the outside world. It will help them to meet their personal needs and will give the chance to finally interact with the world. This will make them self-dependent and they will not feel isolated anymore.

The advancement of technologies will always fascinate us. We also found that there have been no significant research on communication devices for deaf, hard of hearing, or paralyzed individuals affected by the LIS situation. Therefore we started to look into the newsletter or published papers around us. Nowadays medical science is improving day by day. Medical operations are now getting easier. Newly developed high-tech gadgets are being implemented for paralysed patients who are suffering a lot for their physical disabilities. One of the team members is a part of an NGO where he has seen a person aged greater than 40+ is suffering from paralysis or some disabilities. So, to fulfill the requirements, it is a time to digitize their daily life with simple eye movement.

To further advance in this system, the student can improve movement so that the patient can fully communicate with their pupil.

For this we will refer to a book named as 'BLINK TO SPEAK - Ashaek Hope' (can be found at http://www.ashaek hope.com/blink-to-speak). Through this book, we can see progress in our proposed system.