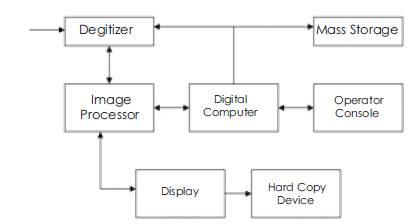

Figure 1. Block Diagram of Typical Image Processing

Images are captured at low contrast in a number of different scenarios. Image enhancement algorithms are used in a variety of image processing applications, primarily to improve or enhance the visual quality of an image by accentuating certain features. Image processing modifies pictures to improve them (enhancement, restoration) to prepare suitable images for various applications from raw unprocessed images.Image enhancement improves the quality (clarity) of images for human viewing. Increasing contrast, and revealing details are examples of enhancement operations whereas removing blurring and noise comes under the category Image restoration.

In this paper comparison of different algorithms are used for image enhancement (histogram equalization, adaptive histogram equalization, continuous histogram equalization, decorrelation stretching, median filtering, negative image and intensity adjustment). In this decorrelation stretching, median filtering and intensity adjustment together gives the best method for enhancing image because it not only increases the intensity values but also removes salt-and-pepper noise.

Digital image processing is concerned primarily with extracting useful information from images. Ideally, this is done by computers, with little or no human intervention. Image processing algorithms may be placed at three levels. At the lowest level are those techniques which deal directly with the raw, possibly noisy pixel values, with denoising and edge detection being good examples. In the middle are algorithms which utilise low level results for further means, such as segmentation and edge linking. At the highest level are those methods which attempt to extract semantic meaning from the information provided by the lower levels, for example, handwriting recognition [2].

A typical digital image processing system [2, 3] is given in Figure 1

Image Processing has been developed in response to solve three major problems concerned with pictures:

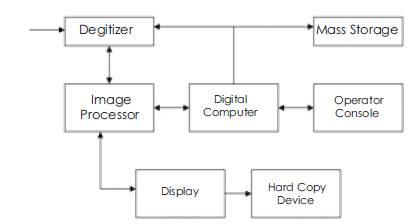

An image may be described as a two-dimensional function f(x, y) [3] as shown in Figure 2.

Where x and y are spatial coordinates. Amplitude of f(x, y)at any pair of coordinates (x, y) is called intensity off (x, y)or gray value of the image. When spatial coordinates and amplitude values are all finite, discrete quantities, the image is called digital image. The digital image f(x, y) is represented by a single 2- dimensional integer array for a grey scale image and a series of three 2- dimensional arrays for each colour bands.

Figure 1. Block Diagram of Typical Image Processing

Figure 2. Two-Dimensional Distribution of image Points (Pixel)

Following is the list of different image processing functions based on the above two classes [1].

Image AcquisitionFor the first seven functions the inputs and outputs are images whereas for the rest three the outputs are attributes from the input images. With the exception of image acquisition and display most image processing functions are implemented in software.

Images are captured at low contrast in a number of different scenarios. The main reason for this problem is poor lighting conditions (e.g., pictures taken at night or against the sun rays). As a result, the image is too dark or too bright, and is inappropriate for visual inspection or simple observation. Image enhancement algorithms are used in a variety of image processing applications, primarily to improve or enhance the visual quality of an image by accentuating certain features. Image processing modifies pictures to improve them (enhancement, restoration) to prepare suitable images for various applications from raw unprocessed images. Images can be processed by optical, photographic, and electronic means, but image processing using digital computers is the most common method because digital methods are fast, flexible, and precise. Image enhancement improves the quality (clarity) of images for human viewing. Increasing contrast, and revealing details are examples of enhancement operations whereas removing blurring and noise comes under the category Image restoration[2, 3].

Doctors use this technology frequently to manipulate CT scans, MRI and X-ray images. Areas like forensic science use image sharpening (enhancement) techniques for criminal detection [4]. Enhancement algorithms are used extensively to enhance biometric (finger print, iris) images in airport, banking security systems. Palm print manuscripts contain religious texts and treaties on a host of subjects such as astronomy, astrology, architecture, law, medicine and music. Enhancement algorithms are inevitable members of the preservation projects to protect the valuable historical documents.

Enhancement techniques are used to enhance the degraded documents so as to enable retrieval of the written text from these documents. Printing technology also uses extensively the enhancement schemes to produce high quality photographic prints. Acquisition of information of an object or phenomenon, by the use of sensing devices that is not in physical or intimate contact with the object i.e., forest, vegetation, land utilization, sea changes etc. Various image processing techniques are involved in analyzing the acquired data. Image restoration and enhancement are used usually in synchronization rather than as an individual. This class of image processing algorithms include image sharpening, contrast and edge enhancement. Among the enhancement algorithms contrast enhancement is most important because it plays a fundamental role in the overall appearance of an image to human being. A human being's perception is sensitive to contrast rather than the absolute values themselves. So it is justified to increase the contrast of an image for better perception. It provides a detailed classification for conventional enhancement schemes under the heading contrast enhancement [5].

Types of Image Enhancement Algorithms used in this work

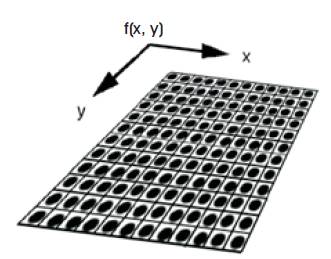

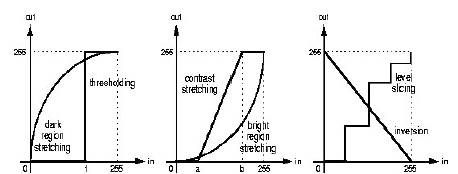

Apart from geometrical transformations some preliminary grey level adjustments may be indicated, to take into account imperfections in the acquisition system. This can be done pixel by pixel, calibrating with the output of an image with constant brightness. Frequently spaceinvariant grey value transformations are also done for contrast stretching, range compression, etc. The critical distribution is the relative frequency of each grey value as shown in Figure 3, the grey value histogram. Examples of simple grey level transformations in this domain are:

Image enhancement usually employs various contrast enhancement schemes to increase the amount of visual perception. Different enhancement schemes emphasize different properties or components of images. Contrast enhancement techniques can be broadly classified into two categories. For the first category, the gray value of each pixel is modified based on the statistical information of the image. Power law transform, log transform, histogram equalization belong to this category. In the second category the contrast is enhanced by first separating the high and/or low frequency components of the image, manipulating them separately and then recombining them together with the different weights.

Figure 3. Relative Frequency of each grey value

The negative of an image with gray levels in the range [0, L-1] is obtained by using the negative transformation, which is given by the equation 1.

Where r & s denote the values of pixels before and after the processing and L is the maximum Gray level intensity of the input image. Reversing the intensity level of an image in this manner produces the equivalent of a photographic negative. This type of processing is particularly suited for enhancing white or gray detail embedded in dark regions of an image.

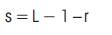

The luminance histogram of a typical natural scene that has been linearly quantized is usually highly skewed toward the darker levels; a majority of the pixels possess a luminance less than the average. In such images, detail in the darker regions is often not perceptible. One means of enhancing these types of images is a technique called histogram modification, in which the original image is rescaled so that the histogram of the enhanced image follows some desired form. This method also assumes the information carried by an image is related to the probability of occurrence of each gray level. To maximize the information, the transformation should redistribute the probabilities of occurrence of the gray level to make it uniform. In this way, the contrast at each gray level is proportional to the height of the image histogram. Various modifications of histogram equalization are also available which increases its potential of contrast enhancement. Adaptive histogram equalization (AHE), Contrast limited adaptive histogram equalization (CLAHE) belong to that category which apply histogram equalization locally on the image and provides better contrast.

Now a day's digital cameras are certainly the most used devices to capture images. They are everywhere, including mobile phones, personal digital assistants (PDAs -a.k.a. pocket computers or palmtop computers), robots, and surveillance and home security systems. There is no doubt that the quality of the images obtained by digital cameras, regardless of the context in which they are used, has improved a lot since digital cameras early days. Part of these improvements is due to the higher processing capability of the systems they are built-in and memory availability.

However, there are still a variety of problems which need to be tackled regarding the quality of the images obtained, including [6]:

Among the seven problems related above, some are more dependent on the quality of the capture devices used, whereas others are related to the conditions in which the image was captured. When working on the latter, the time required to correct the problem on contrast is a big issue. This is because the methods developed to correct these problems can be applied to an image on a mobile phone with very low processing capability, or on a powerful computer. Moreover, in realtime applications, the efficiency of such methods is usually favored over the quality of the images obtained [5].

A fast method generating images with medium enhancement on image contrast is worth more than a slow method with outstanding enhancement.

This proposes two methodologies for contrast enhancement in digital imaging using histogram equalization (HE). Although there has been a lot of research in the image enhancement area for 40 years, there is still a lot of room for improvement concerning the quality of the enhanced image obtained and the time necessary to obtain it [1]. HE is a histogram specification process which consists of generating an output image with a uniform histogram (i.e., uniform distribution). In image processing, the idea of equalizing a histogram is to stretch and/or redistribute the original histogram using the entire range of discrete levels of the image, in a way that an enhancement of image contrast is achieved[3]. He is a technique commonly used for image contrast enhancement, since it is computationally fast and simple to implement. The main motivation is to preserve the best features the He methods have, and introduce some modifications which will overcome the drawbacks associated with them.

In the case of gray-level image contrast enhancement, methods based on HE have been the most used. Despite its success for image contrast enhancement, this technique has a well-known drawback: it does not preserve the brightness of the input image on the output one. This problem makes the use of classical HE techniques not suitable for image contrast enhancement on consumer electronic products, such as video surveillance, where preserving the input brightness is essential to avoid the generation of non-existing artifacts in the output image. In order to overcome this problem, variations of the classic HE technique, such as [have proposed to first decompose the input image into two sub-images, and then perform HE independently in each sub-image (Bi-HE). These works mathematically show that dividing the image into two rises the expectance of preserving the brightness [6].

Although Bi-HE successfully performs image contrast enhancement and also preserves the input brightness to some extent, it might generate images which do not look as natural as the input ones. Unnatural images are unacceptable for use in consumer electronics products. Hence, in order to enhance contrast, preserve brightness and produce natural looking images, the authors propose a generic Multi-HE (MHE) method that first decomposes the input image into several sub-images, and then applies the classical HE process to each of them. They present two discrepancy functions to decompose the image, conceiving two variants of that generic MHE method for image contrast enhancement, i.e., Minimum Within-Class Variance MHE(MWCVMHE) and Minimum Middle Level Squared Error MHE (MMLSEMHE). Moreover, a cost function, which takes into account both the discrepancy between the input and enhanced images and the number of decomposed sub-images, is used to automatically determine in how many Sub-images the input image will be decomposed on as shown in Figure 4.

Image adjustment is an image enhancement technique which is used to improve an image, where improve is sometimes defined objectively[2, 3] (e.g., increase the signal-to-noise ratio), and sometimes subjectively (e.g., make certain features easier to see by modifying the colors or intensities).

By using different methods we can adjust the image, they Are

Figure 4. Original and CLAHE images

Figure 5. Contrast Adjustment by its limit

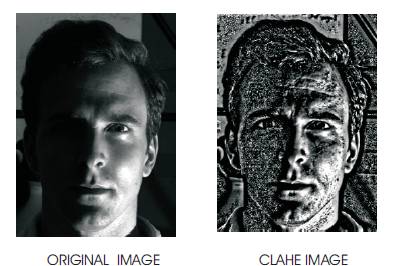

Adjust image contrast by linearly scaling pixel values. Contrast Adjustment block adjusts the contrast of an image by linearly scaling the pixel values between upper and lower limits as shown in Figure 5. Pixel values that are above or below this range are saturated to the upper or lower limit value, respectively [5].

Mathematically, the contrast adjustment operation is described by the following equation, where the input limits are [low_inhigh_in] and the output limits are [low_outhigh_out]:

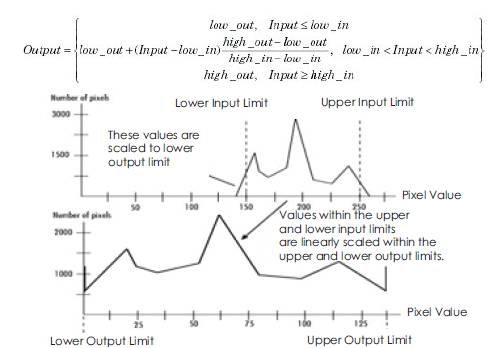

Use the adjust pixel values from and Adjust pixel values to parameters to specify the upper and lower input and output limits. All options are described below.

Use the adjust pixel values from parameter to specify the upper and lower input limits.

If we select Full input data range [min max], uses the minimum input value as the lower input limit and the maximum input value as the upper input limit.

If we select User-defined, the Range [low high] parameter associated with this option appears. Enter a two-element vector of scalar values, where the first element corresponds to the lower input limit and the second element corresponds to the upper input limit.

If we select Range determined by saturating outlier pixels, the Percentage of pixels to saturate [low high] (in %), Specify number of histogram bins (used to calculate the range when outliers are eliminated), and Number of histogram bins parameters appear on the block. The block uses these parameter values to calculate the input limits in this three-step process:

Use the Adjust pixel values to parameter to specify the upper and lower output limits.

If we select Full data type range, the block uses the minimum value of the input data type as the lower output limit and the maximum value of the input data type as the upper out

If we select User-defined range, the Range [low high] parameter appears on the block. Enter a two-element vector of scalar values, where the first element corresponds to the lower output limit and the second element corresponds to the upper output limit.

Table 1. Blocks Handling Pixel Values

Figure 6. Image with Histogram Equalisation

If any input pixel value is either INF or -INF, the Contrast Adjustment block will change the pixel value according to how the parameters are set. Table 1 shows how the block handles these pixel values.

If any input pixel has a NAN value, the block maps the pixels with valid numerical values according to the userspecified method. It maps the NAN pixels to the lower limit of the adjust pixels values to parameter

Image enhancement techniques are used to improve an image, where "improve" is sometimes defined objectively (e.g., increase the signal-to-noise ratio), and sometimes subjectively (e.g., make certain features easier to see by modifying the colors or intensities) [4].

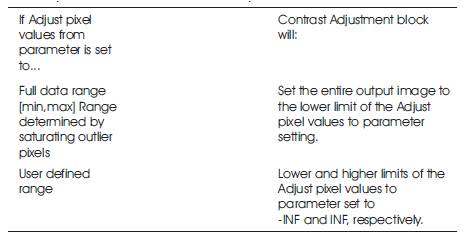

Intensity adjustment is an image enhancement technique that maps an image's intensity values to a new range. To illustrate, this figure shows a low-contrast image with its histogram. Notice in the histogram of the image how all the values gather in the center of the range as shown in Figure 6.

If we remap the data values to fill the entire intensity range [0, 255], we can increase the contrast of the image.

The functions described in this section apply primarily to grayscale images. However, some of these functions can be applied to color images as well. For information about how these functions work with color images, see the reference pages for the individual functions.

Correlation is a signal-matching technique. It is an important component of radar, sonar, digital communications and many other systems. Correlation is a mathematical operation that is similar to convolution.

Decorrelation is the inverse operation of the correlation. Here it is used to obtain differences between two signals. Decorrelation stretching is applied to the multichannel image. Decorrelation stretching does not change the size, mean, variance of the image. Decorrelation stretch enhances the image by increasing contrast.

A median filter is a non-linear filter and is efficient in removing salt-and-pepper noise. variants of median filters are

The median ξ , of a set of values is such that half of the values in the set are less than or equal to ξ , and half are greater than or equal to ξ . In order to perform median filtering at a point in an image, first the values of the pixel in the neighborhood, determine their median and assign that value to the corresponding pixel in the filtered image.

For example, in a 3x3 neighborhood the median is the 5th largest value, and so on. In fact, isolated clusters of pixels those are light or dark with respect to their neighbors, and whose area is less than m2/2 (one – half the filter area), are eliminated by an m x m median filter. In this case “eliminated” means forced to the median intensity of the neighbors. Larger clusters are affected considerably less [4].

The inverse transformation reverses light and dark. An example of inverse transformation is an image negative. A negative image is obtained by subtracting each pixel from the maximum pixel value. For an 8-bit image, the negative image can be obtained by reverse scaling of the gray levels, according to the transformation

g (m, n) = 255 – f(m, n)

Where, f (m, n) is the input image and g (m, n) is the output image.

Negative images are useful in the display of medical images and producing negative prints of images. Negative of a image sometimes give more information for interpretation than the original image.

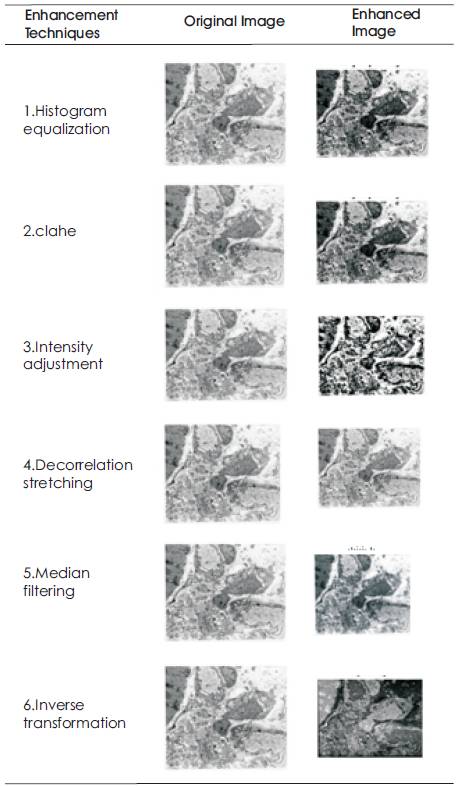

Table 2. Different Enhancement Techniques where implemented on image of Cardiogram from a diabetic patient

Table 3. Different Enhancement Techniques where Implemented on image of Moontaken from a Satellite

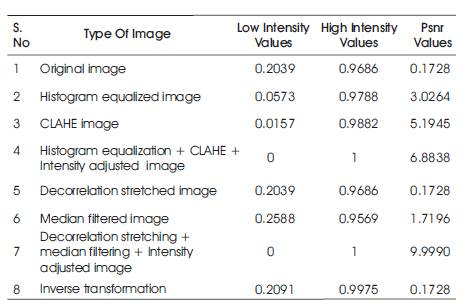

Table 4. Comparison of Intensity values for the different techniques used

Different enhancement techniques implemented on image of cardiogram from a diabetic patient has been represented in Table 2.

Algorithms were written using eight different techniques and its output are shown in Table 2 and Table 3. In this paper the authors have proposed seven enhancement algorithms which are effective for enhancing medical images.

The details of the image such as width, length and pixel values are also displayed.

From Table 4, the method 7 and method 4 will give same intensity values with PSNR values as 4.8913 and 3.0264 respectively. From the PSNR values The authors say that method 7 is more efficient for visual.

This techniques used for enhancing image can be extended to frequency domain. These techniques are usually used for electron micrographs and this can be extended for dichromic images and color images.