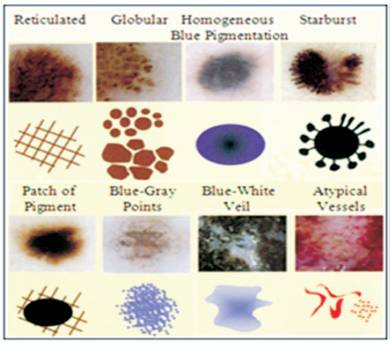

Figure 1. Dermoscopic Patterns[7]

The malignant melanoma is a deadly form of the skin cancer in humans. It develops quickly, and effortlessly metastasizes. Late identification of the dangerous melanoma is in charge of 75% of deaths connected with skin growths. Early diagnosis is an important factor that increases chances of successful cure as there is a rapid course of the disease. Computer analysis and image processing are efficient tools supporting quantitative medical diagnosis. Therefore it is relevant to develop computer based methods for dermatological images. So, in order to get the effective results and information about distinctive stages of the infected portion, one needs the corresponding features of that particular area in order to decide the stage. So, the feature extraction phase is hugely dependent on the region detected which has the disease. So appropriate segmentation algorithm is required which can affectivity detect the skin melanoma pixels in the information image. In this work, an algorithm is presented which can adequately detect the pixels having melanoma region and ordinary skin. The proposed work uses a hybrid technique in which space complexity of intensity values is reduced by taking pre-segmentation results from Gaussian mixtures posterior algorithm. The algorithm first chooses some candidates from different regions of the images having distinctive intensity values and then Gaussian models are built from the chosen places by taking their neighborhood pixels. After this, posterior testing is carried out to get pre-segmented results. In the end neural network based training and testing is implemented to get final segmentation results. Experimental results show that the proposed algorithm gives 98% accuracy results on the tested database images.

Computer-aided diagnosis (CAD) systems have been quickly being developed over the previous decade for skin cancer classification. An impressive role of CAD systems is to give a “second opinion” to the dermatologists to make decision for successful diagnosis of patients. In dermatology, the significant types of lesions for skin cancer are divided into malignant melanoma and nonmelanoma. On comparing to the current melanoma CAD systems, the recognition rate of non-melanoma skin lesions is less than 75%, maybe because of the presence of many lesion appearances. An important amount of studies have demonstrated that the measurement of tissue lesion features may be of crucial importance in clinical practice. This is because of the reason distinguished tissue lesions can be identified based on measurable features which are derived from an image. In the most recent years, computer vision-based diagnostic systems have been utilized in several hospitals and dermatology clinics, which aims for most part at the early identification of harmful melanoma tumor. Also, this is the most frequent types of skin cancer, compared to other types of non-malignant cutaneous diseases. Seriousness in melanoma is due to the fact that its occurrence has increased quicker than all other cancers. The annual extend rates have increased on the order of 3–7% in humans having fair-skin [1] . The progressive cutaneous melanoma is still hopeless, but when analyzed to early stages it can be cured without any complications. The separation of early melanoma from other non-malignant pigmented skin lesions is not negligible even for experienced dermatologists. In certain cases, primary care physicians miscalculate melanoma in its early stage[2]. In this paper, the problems of skin image segmentation using a hybrid path for the separation of pigmented skin lesions from normal skin and the feature extraction from the separated regions are analyzed.

The examination of digital dermoscopy images can follow various methodologies which are either directed by the medical expert aspect, or are driven by a machine knowledge point of view. All methodologies share basic steps in the processing course which includes segmentation, feature extraction, and classification. The different dermoscopic patterns are displayed in Figure 1.

Figure 1. Dermoscopic Patterns[7]

Two important approaches which can be distinguished in dermoscopy digital image analysis for melanoma diagnosis is as follows:

An extensive number of (more or less) low-level features are figured, normally a few hundred features discriminating amongst melanoma and benign PSL’s are automatically selected by a specific procedure. By reducing the number of features to a few tens, and then by using a classifier these elements are constructed. In general, it is not straight forward to human client however such features really measure and then the classifier arrives at its decision.

A small number of high-level diagnostic features utilized by medical experts for visual assessment of the images are displayed mathematically. Semi-quantitative diagnostic algorithms which includes the ABCD rule are then automated using the computerized features rather than the human scores. Normally, the user can easily get the meaning of the features and the way the proposed diagnosis is constructed by the classification algorithm. Practically, both the approaches from time to time appear in a pure form, or may be, following a mime approach. A system developer will somewhat extend the number of features (e.g., by introducing variants) and then fit the classification algorithm, if this enhances the performance of the system and apply the accuracy by users. On the other hand, the number and type of features as well as the choice of classifiers can be driven to gain transparency by applying the black box approach. An example for the black box approach was given by Blum, et al. [3], where the researchers did not follow a preformed strategy (e.g., ABCD rule) that they applied an enormous number of algorithms of vision algebra to the image. In special case, they used 64 analytical parameters to frame the classifier. The feature design is based on the ABCD-rule of dermatology in automated diagnosis of skin lesions. ABCD rule constitutes the basis of diagnosis by a dermatologist [4], which represents the Asymmetry, Border irregularity, Color variation, and the diameter of the skin lesion. The feature extraction is the estimation on the pixels that represent a segmented object allowing non-visible features to be registered. Several studies have additionally demonstrated the efficiency of border shape descriptors which identifies the malignant melanoma on both clinical and computer based assessment strategies[5]. Three types of features are used in this study, Border Features which corresponds to A and B parts of the ABCD-rule of dermatology, Color Features which covers the C rules and Textural Features, which are based on D rules.

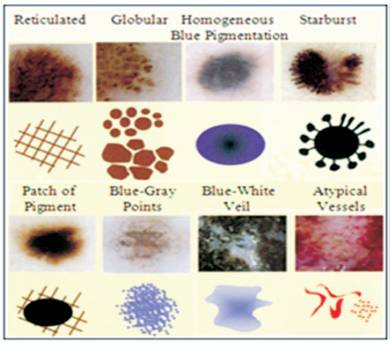

ABCD Rule is a medical algorithm that expects to encourage diagnosis of melanoma to the observers having less experience in this procedure. It depends on the assessment of 4 criteria [6], as found in Table 1.

Table 1. Criteria and Punctuation of “ABCD Rule”

The pigmented lesion is partitioned into two perpendicular axes, in a manner that, as much as symmetry could be accomplished as expected, and the asymmetry is evaluated with respect to the shape, the color and the structure in both sides of every axis. A punctuation of 0 is assumed if there is no asymmetry in any axis, 1 is granted if there exists asymmetry in one axis and 2 if it presents it in both axes, in such a manner that the lesion can have a grade of 0–2.

The lesion is separated in eight segments and it is scored with one every portion which displays an unexpected completion of the border. In the minimum score that it can acquire is 0 and the maximum is 8.

It calculates the existence of six colors (white, light brown, dark brown, blue-gray, red, and black). Therefore, the maximal score will be 6 and the minimum will be 1. The white color will be scored if it is lighter than the nearby skin particularly when it applies to white areas of regression.

In the clinical ABCD to macroscopic pictures, “D” is corresponding to the Diameter and it is considered a symptom of malignancy that it drops from 6 mm. Here, the authors describe the dermoscopic ABCD, in which “D” is compared to dermoscopic structures, a comparative concept to “pattern” which was considered in the “Pattern Analysis”. It is studied with five structures, each of them scored with 1. Therefore the maximum score will be 5 and the minimum will be 1. The dermoscopic patterns are Pigmented network if it is typical or a typical. The homogeneous and unstructured areas of envelope should be more than 10% of the lesion. Points should be more than two. Globule should be at least two. Linear consequence/pseudopods: should be more than two.

Using the dermoscopic technique, the complete life cycle of an automated system for the identification of melanoma, consists of the following stages which can be seen in Figure 2[16].

Figure 2. Tasks of the Life Cycle of an Automated System for the Detection of Melanoma [16]

The acquisition of the dermoscopic image is done.

In this the preprocessing of the image is done. As first importance, the problems of the improvement in the image quality are secured, with the point of representing as closely as the original one. Also, it is here where the computerized process of a software tool actually begins, the problem in the detection and treatment of the “noise” is secured, which is used to show the images. For example hair, bubbles, flashes, shadows, ink marks in the skin, electronic marks (generally computerized identifiers or copyright data), black frames and devices and rulers to measure.

The skin lesion segmentation is done. Constantly, it is embraced in an automated way. There exist semi automated systems in which the expert is permitted to intercede in this stage, presenting information for the segmentation improvement.

The automated identification and characterization of the picked indicators to attempt the diagnosis.

On the basis of the medical algorithm, the quantitative count of the malignancy level of threat is made.

Amir, et al. [8] proposed that the segmentation of skin lesions in dermoscopic images is based on Wavelet Network (WN). The Wavelet Network presented is a member of fixed-grid WN’s and there is no need of training. Experimental results show that this method acts more efficiently as compared to some modern techniques that have been successfully used in many medical imaging problems. Maryam, et al. [9] combines a physics-based flux model which is a machine-learning approach is a novel melanoma feature which is used that identifies the streaks in dermoscopic images and also captures the turbulence in the color dermoscopic images. This approach is sensitive to the radial features of streaks. Glaister, et al. [10] proposed an innovative multistage illumination modeling algorithm in order to correct the illumination variation in skin lesion photographs. The proposed algorithm shows better visual, segmentation, and the classification results when comparing with three other illumination correction algorithms. Shubhangi and Nagaraj [11] have proposed a technique called automatic segmentation of skin lesion in traditional macroscopic images. This method is based on Stochastic Region Merging (SRM). In the proposed method, Region Adjacency Graph (RAG) is adopted for segmenting of the skin lesion from macroscopic images based on the Discrete Wavelet Transformation (DWT). Nilkamal and Jain [12] proposed a new approach for Skin Cancer detection and analysis from given image of patient's lesion area. The proposed scheme uses Wavelet transformation for image improvement and denoising. Amelio Alessia and Clara Pizzuti[13] proposed a Genetic Algorithm for segmentation of colored image. Experimental results show that the segmentation approach is able to detect accurately the borders of the lesion. Mehta, et al. [14] presented an image classification system for premature Skin cancer detection. This research compares and determines different methods to present segmentation of dermatoscopic images. In this preprocessing and postprocessing, segmentation is carried out. Preprocessing demonstrates the speed performance and noise filtering, and post processing includes the image eminence. Jeffrey, et al. [15] proposed a texture-based skin lesion segmentation algorithm. In this a set of representative texture distributions are carried out from a skin lesion image and texture distinctiveness metric is calculated for each distribution. Further the image is classified as normal skin or lesion based on the occurrence of representative texture distributions.

Biomedical image processing is an exceptionally expansive field which includes biomedical signal gathering, image formation, image processing, and image display to medical diagnosis. Further the features are extracted from images. Medical imaging is the procedure and process of making visual representations of the interior of a body for medicinal intercession. Medical imaging tries to recognize inner structures which are covered by the skin and bones, then to diagnose and treat expire. Medical imaging additionally builds up a database of normal anatomy and physiology to make it possible to recognize abnormalities. The combination of hardware and software prompts clinical imaging devices. Skin is the biggest organ in our body. Cancer is a group of diseases described by uncontrolled growth and spread of abnormal cells. If the abnormal cell is not formed, it can result in death. In this paper, the authors have given an effective algorithm for border detection of skin melanoma region.

The flowchart for the proposed algorithm is shown in Figure 3.

Figure 3. Flowchart of the Techniques Used

The digital camera is not fit for taking the interior images of the skin and so for that Dermoscope is required. Images of melanoma are captured utilizing the method dermoscopy or epiluminance microscopy. It utilizes equipment called dematoscope which observes and catches the skin lesion closely. In this work, the dermoscopy images of infected melanoma are collected from the internet.

The L*a*b color space is considered as a uniform color space transform, which implies that distances calculated in this space compares to differ as the human visual system perceives. Convert the Image from RGB Color Space to L*a*b* Color Space. The L*a*b* color space (also known as CIELAB or CIE L*a*b*) enables us to evaluate these visual differences. The L*a*b* space comprises of a luminosity layer 'L*', chromaticity-layer 'a*' demonstrating where color falls along the red-green axis, and chromaticity-layer 'b*' showing where the color falls along the blue-yellow axis. Greater part of the color information is in the 'a*' and 'b*' layers. We can measure the difference between two colors utilizing the Euclidean distance metric.

FCM is a technique of clustering which permits one piece of data to belong to two or more clusters. The primary difference between the traditional hard clustering and fuzzy clustering can be stated as follows. While in hard clustering an element resides only to single cluster and in fuzzy clustering, the elements are allowed to reside too many clusters having deferent degrees of membership. The most common technique of fuzzy clustering is the Fuzzy c-Means method which is being most broadly used in image processing applications. In this work, FCM has been utilized as pre-clustering step to get texture candidates which are further used by Gaussian mixtures.

A Gaussian Mixture is a parametric likelihood density function defined as a weighted sum of Gaussian component densities. They are usually utilized as a parametric model of the likely hood distribution of continuous measurements or features in a biometric system. For example, vocal-tract related spectral features in a speaker recognition system. Their parameters are evaluated from preparing information utilizing the iterative Expectation-Maximization (EM) calculation or Maximum A Posteriori (MAP) estimation from a well-trained prior model. In this Gaussian mixtures, some candidates are chosen who have different neighborhood intensity values from one another. After that segmentation has been done to get the rough idea about skin cancer pixels and normal skin pixels which are further improved by ANN.

Artificial Neural Networks (ANN) is one amongst the dynamic research and application areas. The significant weakness in using ANN is to locate the most relevant grouping of training, learning and transfer function for arranging the data sets with growing number of features and classified sets. Artificial Neural Network is studied as a classifier in which different combinations of functions and its effect and the correctness of these functions are evaluated for different kinds of datasets. This present reality issues which are represented by multidimensional datasets are taken from medical background. The classification and clustering of these data sets are important. The dataset is divided into training set and testing set and it has no usage in the training procedure. The outputs are produced with the help of these datasets and it is used for testing. The training set is taken from 2/3rd of the dataset and the remaining has been taken as test set. This is made through the estimate of the accuracy which is achieved through testing against these datasets. Then the network is simulated with the same data. In order to train the neural network, back propagation algorithm is used. Gradient Descent Method (GDM) was utilized to decrease the mean squared error between network output and the actual error rate. The following parameters are considered to evaluate the efficiency of the network,

With the convenient combination of training, learning and transfer functions, the dataset classification uses the most outstanding tool called Back Propagation Neural Network.

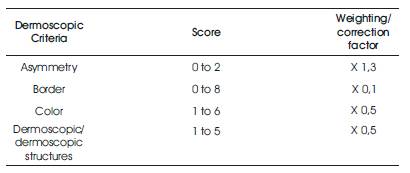

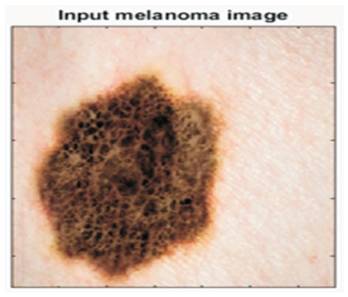

Experimental results of the proposed scheme have been explained in this portion in which the authors used skin melanoma cancer images. They provided output results for different images in the last, but the resulted output at different points has been shown for only a single image. The input melanoma image is shown in Figure 4. Corresponding, sensitivity and specificity of each image has been found using ground truth lesion pixels marked manually by the observer as shown in Figure 5.

Figure 4. Image Used for Experimental Results

Figure 5. Ground Truth of the above Image from Observer's Point of View

Below are the results for few images from the database that has been tested by this algorithm. Figure 6 shows the segmented results of the input images.

Figure 6. Segmented Results for the Input Images

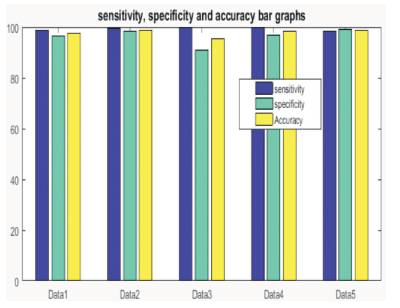

Sensitivity is an analytical measure that represents the proportion of true positive subjects with the disease in a total group of subjects with the disease (TP/TP+FN).

This measure of accuracy of a diagnostic test is complementary to sensitivity. It is defined as the extend of subjects without the disease with negative test result to the overall number of subjects without disease (TN/TN+FP). Where TP is True Positive, FN is False Negative, TN is True Negative and FP is False Positive of the pixels found by ANN as for ground truth.

This is the mean of sensitivity and specificity parameters. Figure 7 represents the bar graph for the outcomes measured with ground truth images and segmented results by the proposed technique.

Figure 7. Bar Graphs for the Database Images with Sensitivity, Specificity and Accuracy Parameters

It has been found that the overall accuracy of the proposed algorithm is found out to be 98% which is far better.

The malignant melanoma is a deadly form of skin cancer in humans. Due to rapid course of the disease, early diagnosis is an important factor that increases the chances of successful cure. Computer analysis and image processing are the effective tools which supports quantitative medical diagnosis. So, it is applicable to develop computer based methods for dermatological images. As it is required to know the stages of the disease with maximum accuracy, feature extraction of infected area is extremely important. The feature extraction phase is highly dependent on the region detected which has the disease. The above purpose can be solved by applying suitable segmentation algorithm which affectively detects the skin and melanoma pixels in the input image. Segmentation is the grouping of pixels having common features and these features are color and texture. In proposed work, the space complexity of intensity values is reduced by getting pre-segmentation results from Gaussian mixtures posterior algorithm. The algorithm first chooses some candidates from different regions of the images having different intensity values and then Gaussian models are built from the chosen places by taking their neighborhood pixels. After this, posterior testing is carried out to get pre-segmented results. To get the final segmentation result, neural network based training and testing is implemented. Experimental results show that the proposed algorithm gives 98% accuracy results on the tested database images. In future work, the same work can be expanded for classification of different stages of melanoma cancers from the collected images as this will be helpful for dermatologists in the detection of melanoma cancer in its early stage. For this, data collection will be the main step as there is no standard database of stage wise.