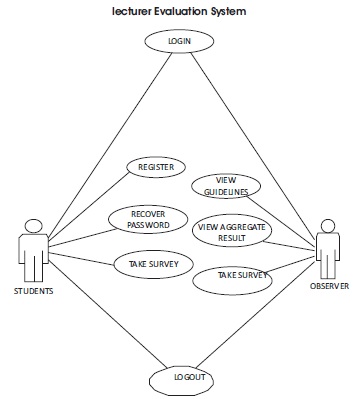

Figure 1. Lecturer Evaluation System Use Case Diagram

The evaluation of lecturers by students in higher institutions is important to monitor and control academic quality. This work was conducted to evaluate lecturers' teaching methods in Federal University of Technology (FUT Minna) using survey evaluation technique and implemented on a JavaFX platform. The scope of this work is for all undergraduate students in FUT Minna and quality assurance staff. The Lecturer Evaluation System (LES) was developed integrating various components in computer science. The participants for the LES were drawn from various departments in the institution consisting of 20 students each from 100 to 500 levels. The LES was evaluated using the System Usability Scale (SUS) and aggregations were obtained from students' reviews. Results showed that 90% of 400 level students and 60% of 200 students level preferred the system, 40% of 100 level students found the system’s usage tedious, 50% of the 300 level students and 30% of 500 level students found the system cumbersome to operate. It was concluded that the system was easy to interact with, workability process was not complex, and it could be used to assess lecturers' teaching methods.

Quality assurance is a process of planning and systematic checking to see whether the requirement specified for a service in development is achieved (Jani, 2011). Evaluation of lecturers solely for the purpose of improving quality of learning, which includes lecturers' credibility, work process and efficiency is a fundamental source of concern to all institutions of learning (Kassim, Johari, Rahim, & Buniyamin, 2017).

The diversity and complexity of learning in higher institutions is a major source of concern for many of our institutions. The competency of lecturers and the ability to mentor and motivate students towards academic and moral excellence cannot be exaggerated. It is stated that evaluation provides beneficial report concerning strength, weakness, and potential in any learning process (Chua & Kho, 2013). In (Richardson, 2005), showed that the perception of lecturers on teaching became more consistent with the perceptions of their student feedback result in the form of students' evaluations. In other words, a students' evaluation may change a teacher's self-perception.

In a technological thriving world, the need for competitive teaching and learning becomes paramount both during the period of study and beyond undergraduate level. Although so many have debated over the effectiveness of allowing students to evaluate their lecturer's performance, a lot of scholars deem this method as controversial and inappropriate for measuring instructional effectiveness (Emery, Kramer, & Tian, 2003). To make certain of capacity building progressing intellectually, there is need for certain standards to be obtained in the educational system (Jani, 2011) and this is related to the guidelines followed in the development of the Lecturer Evaluation System (LES).

In recent times, information can be transferred to any place in the world within seconds. Modern techniques must be implemented by higher institution to enable the students to cope with the change in technology and working environment (Jani, 2011). The traditional method makes use of questionnaire to interview students of various departments and manually filling the sheet of paper by the students or the use of survey monkey to send in surveys to students through their email. These two methods are not time and cost effective as sometimes the quality assurance budget may not be able to pay for the software as a service on time, the manual process result in lowered integrity of the evaluation process due to insufficient data from all actors because larger audience might not be covered using printed hard copy questionnaires. Based on this, the lecturer evaluation system is developed to improve lecturer performance by obtaining regular, particular, and conceptual reports from the evaluation done by students and quality assurance staff (Winarno, 2017). The response and feedback of student in this case is important, otherwise the experience in teaching and learning will be uninspiring for all involved (McLoone, O'Keeffe, Villing, & Brennan, 2014). The function of LES is to monitor and control academic quality. This system is made to facilitate the collection and storage of individual surveys taken by students to a database before the commencement of examination.

Therefore, the objective of this work is to evaluate lecturers' teaching methods in Federal University of Technology (FUT Minna).

The use of student perception to evaluate lecturers is not new, it has been in existence for the past 4 decades, even though it cannot be concluded to be the best available technique of sourcing for information about lecturing efficiency. Over the years, a lot of research have been carried out on the reliability of this technique (Emery et al., 2003).

Balachandran and Kirupananda (2017) have developed a web-based evaluation system for higher institution, using online reviews gathered from Application Programming Interfaces (APIs) by finding and taking out aspect that concern the institution from the reviews and aggregating the sentiment of reviews. The work showed evidently that analyzing the quality of institution by student through an online platform is more efficient than any existing evaluation system used in higher institution. The drawback of the distributed system is majorly the fake reviews which makes judgment and aggregations tedious. The research generally produces an accuracy of 72.56% using the StanfordCoreNLP Java language analysis library, which validates the users’ entry using natural language processing and find the ones that are not correlating. Thus, for an initial work, this is a good percentage.

Web base educational evaluating system structure uses MySQL for the database to retrieve and store data. This allow easy retrieval, reading, selecting, erasing, and make relationship with databases, the front end is viewed through a web page implemented using JavaScript and PHP. The PHP provides a platform for gathering, altering, and scrambling information in the database. Due to the popularity, it is compatible on various platforms, such as Unix, Windows, and Macintosh. JavaScript is a progressive language that makes webpage intuitive and expressive (David, 2016). Security is the major concern captured in this paper because PHP is an open source programming language, allowing all individuals to see the source code. The methodology used is free which allows solid support for the application and the PHP platform grants the application great portability. The overall objective aids students to have adequate knowledge in developing educational website using PHP (David, 2016).

Nasrudin et al. (2015) have presented a developed skill assessment in a team work using peer evaluation system to solve soft skill problems in evaluation, which includes non-transparency and complexity in grading process in evaluation. Apache 1.8.2-4 was used for the web server, PHP 5.3 was the programming language platform used, the database implemented was MySQL 5.6, the Integrated development environment used was Macromedia Dreamweaver CS5.5, and the database management system is phpMyadmin. The application provides a platform for transparent evaluation due to the web based nature of the software. In addition, the system saves time in generating aggregates from the evaluation process data result. The application contains various steps to be assessed before evaluation can be made which makes it not time effective. In view of this result, the system can be a tool in improving student understanding and productivity.

The use of quality assurance and management in improving intellectual capacity in higher education by (Jani, 2011) is based on three standards:

They involve coverage of quality management comprehensively, specifying standards met, and management of standard outline steps. The need of quality assurance aims to produce consistent product that meet applicable requirements couple with constant improvement of the system. Quality assurance and management objectives is to improve learning of student in different disciplines, with the expectation of producing high quality graduates and providing them with the required skills to solve problems faced in the constantly changing global world. Consequently, new inventions contribute directly to intellectual capacity building in higher education (Jani, 2011).

Foy (1969) suggested a possible checklist which consists of 43 statements, students could assign values between 1 and 5 to determine an ideal lecturer to a real subject. The survey was carried out on a Lecturer X, teaching the same subject to 2 different groups of students within a 2 and half year interval. The result of the correlation given in the first year was r=0.93 and r=0.71 in the second year. The result clearly shows that the two groups of students had the same view about what they look for in an ideal lecturer and what they found in an actual one.

Sok-Foon, Sze-Yin, & Yin-Fah (2012) have carried out a survey to evaluate the effectiveness of the lecturers in private universities in Malaysia through the use of questionnaires. The survey focused on the following factors: Lecturer and Tutor Characteristics, Subject Characteristics, Studentship, Learning Resources and Facilities, and Overall Performance. The questionnaire had 33 Items and the students could give their response using a 5- scale ratio (with 1 representing strongly disagree and 5 representing strongly agree). The 33 items were spread out over the 5 characteristics measured; Lecturer and Tutor Characteristics, (13 items), subject characteristics (6 items), the studentship (7 items) learning resources and facilities (4 items), overall performance (2 items). The results of the reliability test on the 33 items showed that the Cronbach alpha score was 0.961 (variance = 371.331). The survey indicated that lecturer and tutor characteristics (The characteristics and quality of the lecturer) were the most important factors in determining student's satisfaction with lecturers. Learning resources and learning facilities were ranked as the second most important factor influencing lecturer performance. Shevlin, Banyard, Davies, & Griffiths (2000) carried out a similar survey in the UK to determine Student Evaluation of Teaching (SET). It consisted of 213 undergraduate students. The survey focused on determining whether other factors such as student characteristics and the physical environment influenced how students judge their lecturers. They also highlighted that there are some factors which cannot be measured when evaluating actual teaching performance. Most students consider the intellectual excitement and interpersonal rapport of their lecturers while others consider respect for students, organization and presentation skills, and ability to challenge students. It was concluded that 'Charisma' is the most important factor that students use while judging and this can lead to biased results because the actual teaching performance of the lecturer is not considered.

Makondo and Ndebele (2014) have investigated on lecturer's reaction to the Student Evaluation of Teaching (SET) process. SET is an important process in a university, which can assist in making decisions, such as contract renewal, promotions, and as evidence of teaching ability. It can be used as a guide for lecturers who wish to improve their lecturing skills and know their shortcomings. It was noted that results from SET should be evaluated deeply because just as (Shevlin et al., 2000) stated, it can be biased as students are more likely to vote for a lecturer based on his/her popularity and not particularly their teaching skills. Also, students therefore need proper training on how to carry out SET surveys.

Foy (1969) criticized the 'lecture system' adopted by most lecturers in the university. The 'lecture system' is simply how lecturers expound their subject to students through series of theoretical classes. There has been serious debate over the years as to how effectively lecturers can perform; due to lack of a scheduled training system for the lecturers and more importantly, because of the lack of a feedback machinery to determine whether they are doing their work effectively or not. But there has always been a feedback machinery (Foy, 1969). The problem is that it is very rarely used and most times, very little students are willing to give feedback on their lecturers because of fear and also because they believe that their opinions are worthless.

Evaluation of Lecturers in higher institutions of learning cannot be over emphasized for more accountability and productivity towards the development of our economy and producing a global standard of graduates that can compete internationally. The creativity of students can only be fully harnessed if lecturers are dedicated to their duties and different strategies of learning are used in strengthening of lecturers and equipping them in areas where they are lacking. Higher institutions of learning including Federal University of Technology Minna can use LES for effective evaluation of lecturers, strengthening lecturer-student relationship, maximizing student potential, improving learning skills, and enhancing creative thinking and innovation.

In the development of Lecturer Evaluation System, requirements for the system were elicited from the Quality Assurance department and Servicom Unit of Federal University of Technology, Minna. Oral interview method of requirement gathering was used. The requirements were translated into the Use Case diagram as shown in Figure 1 below.

Figure 1. Lecturer Evaluation System Use Case Diagram

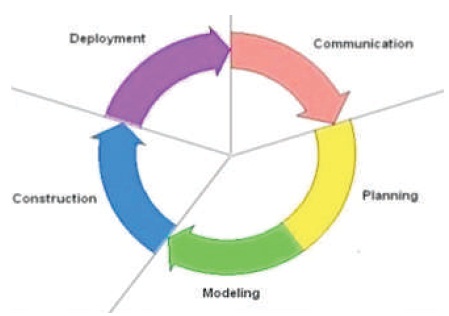

The context diagram also known as 0DFD was used to show the high-level process of LES as shown in Figure 3.

The system was designed and developed using various tools and libraries such as the SceneBuilder for the graphical User Interface, NetBeans IDE for coding the system functionalities, Google Material Design JFoenix library for the User Interface Look and feel customization of widgets, fontawesome library for icons, controlfx library, and derby database to store the captured survey carried out by the students and also by the quality assurance staff. The implementation of the system was done using the java controller classes, prototyping model of the System Development Life Cycle (SDLC) was used. Figure 2 shows how system development life circle of LES will keep improving on the current prototype based on client requirements (Bennett & Coleman, 2018). Model-View- Controller (MVC) architecture and strict software engineering principles were followed.

Figure 2. Evolution of LES using the Prototype Model (Musthafa, 2013)

Figure 3. Lecturer Evaluation System Data Flow Diagram (DFD)

The user is required to register on the system’s home page by providing his matric number, email, department, level, and password. This data is not going to appear in reviewers page for fear of victimization. However, the details are still required to avoid fake reviews. Subsequently, after registering, the user is allowed to login in the text field and password field provided as shown in Figure 4 to take a survey after successfully logging in, a warning message (Figure 5) will pop up to guide the student on how to take the survey. This guideline includes reminding the student the purpose of the survey and the need for him/her to take the survey without prejudiced or biased. Lastly no survey will be accepted a week before the commencement of the semester exams. The criteria used for the evaluation ranges from:

Figure 4. Lecturer Evaluation System Login and Register Page

Figure 5. Lecturer Evaluation System Home Page

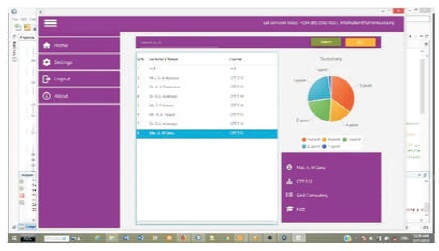

After taking the survey, the student will submit by clicking on the submit button. The other user of the system is the quality assurance staff, who have access to the evaluation interface and guideline for evaluation coupled with the aggregate result for all the surveys that have been taken by the students on each course and its lecturer. Figure 6 shows how the executive summary will be displayed to the quality assurance staff on the system.

Figure 6. Lecture Evaluation System Executive Summary of Survey taken by Students

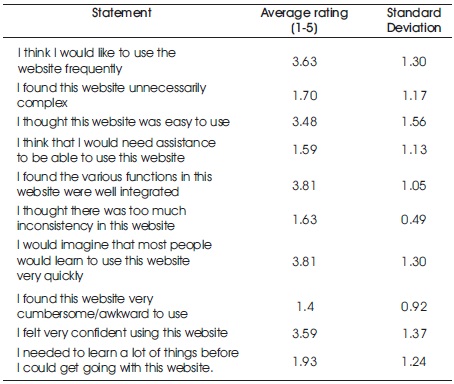

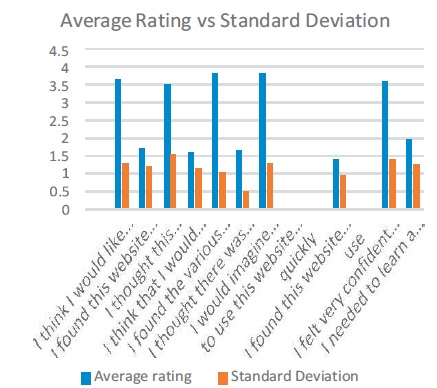

The lecturer evaluation has been conducted to determine the usability of the system at Federal University of Technology, Minna. The participants for the evaluation were drawn from various departments in the institution consisting of 8 students each from 100 to 500 levels. This evaluation was carried out using the 5 point Likert scale questionnaire. The system usability scale developed by John Brooke in the year 1986 for fast and efficient evaluation of software was adopted (Thomas, 2015). All set of students were surveyed using paper questionnaires to have their review of the application user interactivity. Table 1 shows the result of the average and standard deviation of the reviews given by the students who responded to the survey for the set of statements. The student rated each statement on a scale of 1 (strongly disagree) to 5 (strongly agree).

Table 1. Les Evaluation Results

The results presented in Table 1 and Figure 7 show the system was easy to use, workability process was not complex, making it easy to interact with. The application functions were found to be well integrated. Outcomes from the participant showed that 90% of 400 level students and 60% of 200 level students preferred the system, 40% of 100 level students found the system’s usage tedious, 50% of the 300 level students and 30% of 500 level students found the system cumbersome to operate. Majority of the respondents believed that the anonymity aspect of the system allowed them to express their feelings without any fear. Overall, the students' enjoyed using the system in contributing their views in the lecturer evaluation process.

Figure 7. Average Rating and Standard Deviation of Results

This paper critically shows the need to checkmate inadequate performance of some lecturers and proffer proper line of action by the institutions management, making a computerized, rich Internet lecture evaluation system, and utilization of students' response as data in the evaluation process can be used as an alternative solution to assist the traditional evaluation process. The research also evaluated the system using samples of students in FUT Minna, Nigeria and found that large percentage of students preferred using this online evaluation system compared to the manual system. The system allows the quality assurance unit to see the lecturer evaluation review from a generated pie chart.

The future work involves integrating the evaluation of all university units which includes Health Service Unit, Sport Services, Cafeteria, and non-academic staff.

The authors want to thank the Quality Assurance Unit of Federal University of Technology, Minna, for providing useful information used for this project. The authors also want to thank all the students who partook in the surveys of our LES.