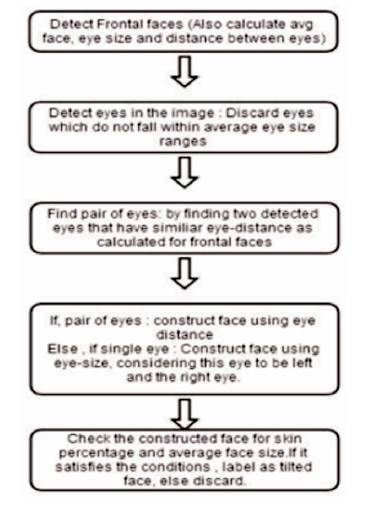

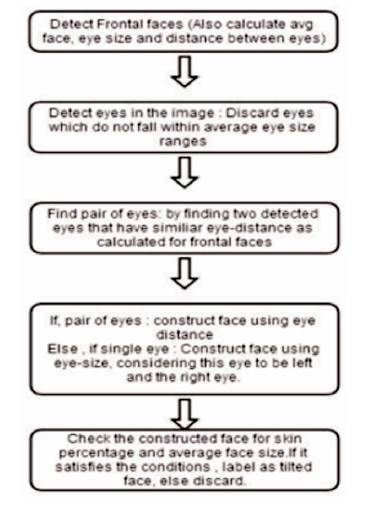

Figure 1. Steps for ViolaJones Algorithm

A popular feature of many Online Social Network (OSN) is photo tagging and photo sharing that allows users to annotate the images who are present in the uploaded images. To overcome the user's privacy, a Facial Recognition (FR) system has been designed effectively during sharing, posting and liking of the photos. An increasing number of personal photographs are uploaded to Online Social Networks, and these photos do not exist in isolation. Photo tagging is a popular feature of many social network sites. The FR system is superior to some possible approaches in terms of increase in recognition ratio and efficiency. To achieve this, OSN specifies a privacy policy and an exposure policy. By these policies, individuals are enabled in a photo by providing permissions before posting a co-photo [11]. Exploring computational techniques and confidentiality of training sets that takes advantage of these trends seems a worthwhile endeavor. To share our photo safely we need an effective FR system, which can recognize everyone in the photo. We also attempted to develop users' private photos for designing an adaptive Face Recognition system specifically used to share a photo with their permission. Finally, the system protects user's privacy in photo sharing over Online Social Network.

Facebook is the largest photo sharing site on the Internet with 1 billion photos uploaded monthly. Integrating photo sharing within social network sites has also provided the opportunity for user-tagging, annotating and linking images to the identities of the people in them. Online Social Network (OSN) have become integral part of our daily life and has profoundly changed the way that we has interacted with each other, fulfilling our social needs–the needs for social interactions, information sharing, appreciation and respect.

“Photo Tagging” is a popular feature of many social network sites that allow users to annotate the uploaded images with those who are in them, explicitly linking the photo to each person's profile. It is a nature of social media that makes people put more content, including photos, over OSNs without too much thought on the content. The act of labeling identities on personal photos is called “Face Annotation” or “Name Tagging”. There is no restriction with sharing of co-photos, on the contrary. Social network service providers like Facebook are encouraging users to post co-photos and tag their friends in order to get more people involved.

Facial recognition systems are commonly used for security purposes but are increasingly being used in a variety of other applications. Most current facial recognition systems work with numeric codes called face prints. These systems work by capturing data for nodal points on a digital image of an individual's face and storing the resultant data as a face print.

Facebook uses Facial Recognition software to help automate user tagging in photographs. Each time an individual is tagged in a photograph, the software application stores information about that a person's facial characteristics. When enough data has been collected about a person to identify them, the system uses that information to identify the same face in different photographs, and will subsequently suggest tagging those pictures with that person's name.

OSN plays an important role in sourcing of image annotations and that provides information about social interactions among individuals for automatic image understanding. Now-a-days innumerous photos and context are drawn from Online Social Network such as Facebook etc. Many Facebook photos contains people, and these photos are an extreme challenge for the datasets present in face recognition system. The main use of OSN is to share personal photos and videos in online sites. OSNs enable a user to maintain a list of contacts and to share image and video content with other OSN members. The key idea is that the members of an OSN have strongly correlated real-world activities when they are friends, family members, or co-workers. By computing the correlation between the personal context models of the OSN members, the accuracy of event-based image annotation can be significantly improved. In an OSN, fusion methods operating at the level of feature extractors or features are less suited from an implementation pointof- view. Indeed, FR engines that belong to different members of the OSN may use different FR techniques [12].

Privacy management is not about setting rules and enforcing them; rather, it is the continual management of boundaries between different spheres of action and degrees of disclosure within those sphere. Boundaries move dynamically as the context changes. These boundaries reflect tensions between conflicting goals; boundaries occur at points of balance and resolution. The significance of information technology in view lies, in its ability to disrupt or destabilize the regulation of boundaries. Information technology plays multiple roles. It can form part of the context in which the process of boundary maintenance is conducted; transform the boundaries; be a means of managing boundaries; mediate representations of action across boundaries; and so forth. However, to better understand the role of technology, additional precision about these boundaries is needed.

Mutually accepted privacy policy has been determining which information should be posted and shared. To achieve this, OSN users are asked to specify two policies. One is Privacy policy and the other is Exposure policy. These two policies will together mutually specify how a cophoto could be accessed. However, before examining these policies, finding identities in co-photos is the first and probably the most important step. A game-theoretic scheme is determined in which the privacy policies are collaboratively enforced over the shared data. Each user is able to define his/her privacy policy and exposure policy, only when a photo is processed with owner's privacy policy and co-owner's exposure policy could be posted. However, the co-owners of a co-photo cannot be determined automatically, instead, potential co-owners could only be identified by using the tagging features on the current OSNs.

Privacy policy is used to define a group of users who are able to access a photo when being the owner. When a request is sent at the time of accepting a friend request, and the accepted friend is a close friend, this privacy policy request can be accepted by them and their photo can be shared by the owner at any time without giving request each and every time of posting the photos.

Exposure policy is used to define a group of users who are able to access when being a co-owner. The exposure policy is treated as a private data that shall not be revealed, and a secure set intersection protocol is used to find the access policy. This is a type of request, where each time a request is send when the photos are posted on OSN.

Traditional image search systems rely on the user specific words to describe the images that they are looking for. A facial recognition system is a computer application for automatically identifying or verifying a person from a digital image or a video frame from a video source. One of the ways to do this is by comparing the selected facial features from the image and a facial database. The majority of facial recognition solutions are based on a number of common approaches or algorithms. A common approach is to measure the relative distance between the eyes, ears and nose of a face detected in an image. These measurements are then used in an algorithm to derive other faces with similar features. Another approach is to use an image as a yardstick and the applying templates of matching techniques.

The Challenges in a FR system are follows.

Facial recognition systems require an understanding of the features of a personality whom they are supposed to recognize and identify. This is usually referred to as the reference point for the personality. Some facial recognition systems require only 1 sample image to start with, whilst others require more, some 30 – 40 samples images. The reality is that, smaller the size of the sample images, less accurate will be the recognition and identification of the faces in the image processed.

Viola Jones Object Detection framework has proposed by Paul Viola and Michael Jones in 2001. This method was one of the first methods to provide object detection at very fast rates. It is the method for rapid and correct object detection through Adaboost machine learning. The Viola- Jonesface detection algorithm was used for detecting face images in personal photos. The accuracy of the Viola-Jones face detection algorithm may be problematic depending upon the targeted applications as well as the associated parameter setup. Hence, more advanced face detection techniques could be used in our face annotation framework (such as techniques that are robust against severe pose variation), thus allowing for more accurate face detection results. A widely used method for real-time object detection in which each image contains 10-50 thousand locs/scales. This algorithm manually detected labelled face images of individuals who appear at least ten times in each photo collection used. Also, all of the detected face images were individually rotated and rescaled to 86x86 pixels using the center coordinates of the eyes. The algorithm has four main stages,

The Major features of Viola Jones Algorithm are

For the detection of faces using Open CV 's implementation of Viola Jones algorithm, the tilted faces usually go undetected. Our proposed methodology is focused on detection of tilted faces using skin detection and eye detection. First, the normal faces are detected using the basic Viola Jones face detector and false positives are removed using the skin detection method. Through these correctly detected faces, the average face size and normal distance between eyes are calculated and is shown in Figure 1.

Figure 1. Steps for ViolaJones Algorithm

Skin Detection can be done using two colour spaces i.e. HSV (Hue, Saturation and Value) and YCrCb (Y is luma component and Cr- Red difference, Cb- Blue difference) and eyes are used to identify the tilted faces. As a result, it is found that the skin and eyes are able to detect the tilted faces. Our proposed method has accuracy about 88.89% for tilted faces. For detection of tilted faces, the eye detection over the image is performed. Pair of eyes is chosen in accordance to the data calculated before and face areas are constructed. All these face areas are then checked for skin percentage threshold, and then marked as tilted faces.

The detected face region of interest is converted from RGB colour space to HSV and YCrCbcolour space for detecting the skin percentage. Locating and tracking human faces is a prerequisite for face recognition and/or facial expressions analysis, although it is often assumed that a normalized face image is available. In order to locate a human face, the system needs to capture an image using a camera and a frame-grabber to process the image, search the image for important features and then use these features to determine the location of the face. For detecting face, various algorithms like skin color based algorithms are included. Color is an important feature of human faces. Using skin-color as a feature for tracking a face has several advantages.

The skin color classification has gained increasing attention in recent years due to the active research in content-based image representation. For instance, the ability to locate image object as a face can be exploited for image coding, editing, indexing or other user interactivity purposes. Moreover, face localization also provides a good stepping stone in facial expression studies. It would be fair to say that, the most popular algorithm to face localization is the use of color information, whereby estimating areas with skin color is often the first vital step of such strategy. Hence, the skin color classification has become an important task. Much of the research in skin color based face localization and detection is based on RGB, YCbCr and HSV color spaces.

The RGB color space consists of the three additive primaries: red, green and blue. Spectral components of these colors combine additively to produce a resultant color. The RGB model is represented by a 3-dimensional cube with red green and blue at the corners. Black is at the origin. White is at the opposite end of the cube. The gray scale follows the line from black to white. In a 24-bit color graphics system with 8 bits per color channel, red is (255, 0, 0). On the color cube, it is (1, 0, 0).

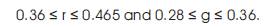

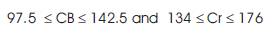

The RGB model simplifies the design of computer graphics systems but is not ideal for all applications. The red, green and blue color components are highly correlated. This makes it difficult to execute some image processing algorithms. Many processing techniques, such as histogram equalization, work on the intensity component of an image only. The color level value ranges in the skin area as follows (R: red, G: green, B: blue):

YCbCr color space has been defined in response to the increasing demands of digital algorithms. The family includes others such as YUV and YIQ. YCbCr is a digital color system, while YUV and YIQ are analog spaces for the respective PAL and NTSC systems. These color spaces separate RGB (Red-Green-Blue) into luminance and chrominance information and are useful in compression applications, however the specification of colors is somewhat unintuitive. The Recommendation 601 specifies 8 bit (i.e. 0 to 255) coding of YCbCr, whereby the luminance component Y has an excursion of 219 and an offset of +16. This coding, places black at code 16 and white at code 235. In doing so, it reserves the extreme range for signal processing foot room and headroom. On the other hand, the chrominance components Cb and Cr have excursions of +112 and offset of +128, producing a range from 16 to 240 inclusively. The rules for YCbCr skin color levels (Cb, Cr: two Chroma components from R, G and B levels):

In the HSV color model, where H, S and V stand for Hue Saturation and Value respectively. The HSV color domain for skin color lies in the following range:

The detected region of interest is taken from the image and converted into YCrCb and HSV colour range. For each of the colour range, the pixels that fall in the domain of skin are converted to white and the pixels that are not in the domain are converted to black. The skin ratio of region of interest is the total number of white pixels is to the total number of pixels in the face. If the ratio is above 70% (threshold value), then the detected part is considered a face. Otherwise, it is a falsely detected region. As per observations, it is found that 70% is a good threshold value for face detection (since the face detection detects face including background, hair, eyes, etc), which do not fall in the skin colour range. Also, whenever there is 100% skin in images in both colour ranges, then the image is discarded because this condition is possible only when complete detection has no other colour other than the skin colour range which is not possible for faces.

The stages in Viola-Jones Algorithm invokes the following process:

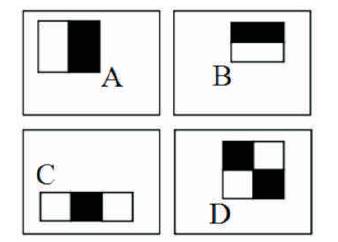

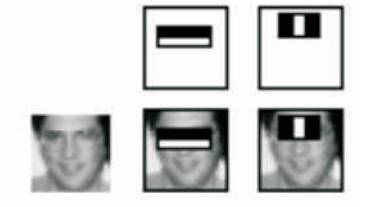

Viola Jones Face Detector basically detects only frontal and non-tilted faces through the classifier. The classifier cannot be trained for every tilt faces and classification is done on the basis of Figure 2.

Figure 2. Haar Feature Selection

It is observed that eyes are always present whether the face is frontal or tilted. Firstly, the average eye width and average eye distance is calculated with the help of already detected correct faces. After detection of eyes, the following parameters are used for detecting the tilted faces and forming the region of interest for tilted faces. For these distances, the following equations are used.

Where,

hface = height of the face,

deye = distance between eyes,

heye = height of eyes and

weye = width of eyes.

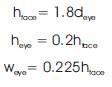

Rapid Detection of Objects requires computation of Haar features and in order to compute them, integral image is required as shown in Figure 3. Integral image is obtained using few operations per pixel. After this computation, Haar features of any type can be computed in constant time.

Figure 3. Creation of Integral Images

The integral image computes a value at each pixel (x,y) that is the sum of the pixel values above and to the left of (x,y), inclusive. This can quickly be computed in one pass through the image.

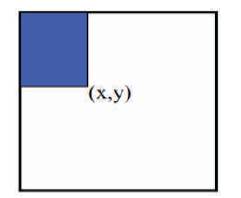

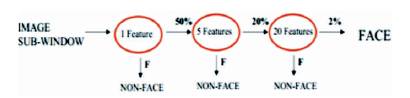

The Adaboost Learning algorithm, created efficient classifiers from the set extracting important visual features. For fast classification on the basis of Figure 4, learning must exclude a majority of features available in the image. This algorithm extracts critical features while discarding all other unimportant features.

Figure 4. Adaboost Training method

The “cascade” classifier which focuses on Object like parts and discards the background Cascade is a type of mechanism that knows its region of attention and discarded region are not likely to contain any object. This is very fast in Real Time detection and has been detected in Figure 5.

Figure 5. Cascaded Classifiers

A single feature classifier shown in Figure 6, achieves 100% detection rate and about 50% false positive rate. A 5 feature classifier achieves 100% detection rate and 40% false positive rate (20% cumulative) using data from previous stage. A 20 feature classifier achieves 100% detection rate with 10% false positive rate (2% cumulative). The Viola Jones Algorithm is concluded from the experimental results and analysis that the improvements were applied as skin detection, skin percentage and the eye detection.

Figure 6. Feature Classifiers

The skin and eyes play an important role for enhancing the efficiency of Viola Jones Algorithm. As a result, tilted as well as frontal faces are detected. The efficiency of the algorithm becomes 88.89% when noise is present in an image otherwise the detection is almost 100%, which is not done in Viola Jones Face Detection.

The Face Detection has the capability to literally count the number of detected faces in an image. With each face that is detected in the image, it assigns a dominance factor, i.e. how dominant is each face in the image relative to the other faces. When an image is processed, it counts the number of faces in the image and calculates each detected face's dominance factor. These are then treated as an attribute for the image, just like any other attributes that are extracted, e.g. Colour Layout, Colour Histogram, Edging Information or Texture Information. These attributes are used when searching for images that are similar to a sample image. The benefits of this Face Detection features are,

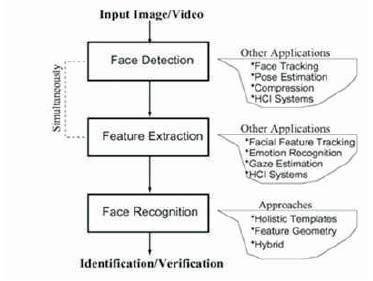

The problem of automatic face recognition involves three key steps/subtasks: (1) detection and rough normalization of faces, (2) feature extraction and accurate normalization of faces, (3) identification and/or verification. Sometimes, different subtasks are not totally separated. For example, the facial features (eyes, nose, mouth) used for face recognition are often used in face detection. Depending on the nature of the application, for example, the sizes of the training and testing databases, clutter and variability of the background, noise, occlusion, and speed requirements, some of the subtasks can be very challenging. Figure 7 shows the Identification of Image.

Figure 7. Identification of images

The first step in any automatic face recognition system is the detection of faces in images. After a face has been detected, the task of feature extraction is to obtain features that are fed into a face classification system. Depending on the type of classification system, the features can be local features such as lines or fiducial points, or facial features such as eyes, nose, and mouth. Face detection may also employ features in which, the case features are extracted simultaneously with face detection. Feature extraction is also a key to animation and recognition of facial expressions. Without considering feature locations, face detection is declared successful if the presence and rough location of a face has been correctly identified. However, without accurate face and feature location, noticeable degradation in recognition performance is observed.

After faces are located, the faces and their features can be tracked. Face tracking and feature tracking are critical for reconstructing a face model (depth) through SfM, and feature tracking is essential for facial expression recognition and gaze recognition. Tracking also plays a key role in spatiotemporal-based recognition methods. Most general form, tracking is an essentially motion estimation. However, general motion estimation has fundamental limitations such as the aperture problem. In general, tracking and modeling are dual processes: tracking is constrained by a generic 3D model or a learned statistical model under deformation, and individual models are refined through tracking.

The private information of a user is considered as his/hers privacy and exposure policies; friend list and the private training data sets. These private information are protected from a semi-honest adversary. Privacy is not monotonic, that is, more privacy is not necessarily better. Indeed, both “crowding” and “isolation”, the result of privacy regulation has gone wrong. Privacy states are relative to what is desired and what is achieved; one can be in the presence of others but feel isolated or crowded depending on the degree of sociability sought. The goal of privacy regulation is to modify and optimize behaviors for the situation to achieve the desired state along the spectrum of openness and closeness. To that end, people employ “a network of behavioral mechanisms,” which include privacy regulation includes much more than just the physical environment in the management of social interaction.

The application gets developed by PHP framework using WAMP server and SQLYOG as a backend for connectivity. SQLYOG act as a database for storing all the details. The framework of this system involves (i) User Login (ii) Add Friends (iii) Photo Sharing (iv) Photo posting [11] .

A log in/out button could be used for log in/out with Facebook. After logging in, a greeting message and the profile picture will be shown. The user can login if they already have an Facebook account, if not they have to signup to have an account in Facebook. The login and signup page has been devloped on the basis of Facebook front page, whereas new user can signup and the old user can use login menu for login to the facebook account. A new user details are stored in a database once when the signup button gets clicked. By the username and password the user can login using the login menu, and if the given password and the stored password doesn't match the user cannot login to the facebook.

The friends are added to the Facebook circle by clicking “Add Friends” menu. Facebook allows us to create a list of friends such as “close friends” or “Acquaintances”. We can share a photo only to friends on list. The friend list should be intersection of owner's privacy policy and co-owners' exposure policies.

Photos are shared once a “post” button is clicked. Notifications are send along with requesting permission.

The photo has been posted only after accepting the policy of privacy or exposure, the photo gets posted, if not the photo will be in a blur manner.

The study focuses the statistics of photo sharing on social networks and propose a three realms model“ a social realm”, in which identities are entities, and friendship a relation; second, a visual sensory realm [8], of which faces are entities, and co-occurrence in images a relation; and third, a physical realm, in which bodies belong, with physical proximity being a relation [4].” They show that any two realms are highly correlated. Given information in one realm, we can give a good estimation of the relationship of the other realm [1]. For the first time, the authors propose to use the contextual information in the social realm and cophoto relationship to do automatic FR. They define a pairwise Conditional Random Field (CRF) model [2]. To find the optimal joint labeling by maximizing the conditional density [7]. Specifically, they use the existing labeled photos as the training samples and combine the photo cooccurrence statistics and baseline FR score to improve the accuracy of face annotation [5]. The difference between the traditional FR system and the FR system that is designed specifically for OSNs [3]. They point out that a customized FR system for each user is expected to be much more accurate in his/her own photo collections [9]. A similar work is done. Choi et al [6] proposed to use multiple personal FR engines to work collaboratively to improve the recognition ratio. Specifically, they use the social context to select the suitable FR engines that contain the identity of the queried face image with high probability [10].

The application is implemented on the basis of PHP as a front end and SQLYOG as a backend, by having Facebook as a platform.

The system is evaluated with two criteria: network-wide performance and facial recognition performance. The former is used to capture the real-world performance of our design on large-scale OSNs in terms of computation cost, while the latter is an important factor for the user experience. The face detection and Eigen face method is carried out by Face Recognition (FR) System [11] . Figure 8 shows the Graphical User Interface (GUI), A log in/out button could be used for log in/out with Facebook. After logging in, a greeting message and the profile picture will be shown. Our prototype works in three modes: a setup mode, a sleeping mode and a working mode.

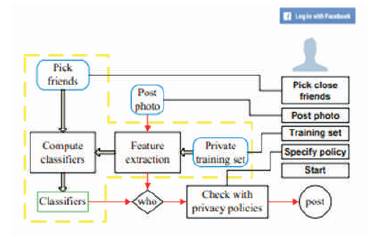

Figure 8. System Structure of the Application

Running in the setup mode, the program is working towards the establishment of the decision tree. For this purpose, the private training set and neighborhood needs to be specified. When the “private training set” button is pressed, photos in the specified galleries could be selected and added. To setup the neighborhood at this stage, a user needs to manually specify the set of “close friends” among their Facebook friends with the button “Pick friends” as their neighborhood. According to the Facebook statistics, on average a user has 130 friends, we assume only a small portion of them are “close friends”. In this application, each user picks up to 30 “close friends”. Notice that all the selected friends are required to install the application to carry out the collaborative training. The setup mode could be activated by pressing the button “Start”. Key operations and the data flow in this mode are enclosed by a yellow dashed box on the system architecture Figure 8.

After the classifiers are obtained, decision tree is constructed and the program switches from the setup mode to the sleeping mode. Facebook allows us to create a list of friends such as “close friends” or “Acquaintances”. We can share a photo only to the friends on list. The friend list should be on intersection of owner's privacy policy and co-owners' exposure policies. That means, we cannot customize a friend list to share a cophoto. Currently, when the button “Post Photo” is pressed, co-owners are identified, then notifications are send to the co-owners to request permissions. If they all agree to post, the owner shares the photo on the owner's page like a normal photo. In this sense, users could specify their privacy policy but their exposure policies are either everybody on earth or nobody depending on their attitude toward. The data flow for a photo posting activity is illustrated by the solid arrows. After the requests are sent out, the program will go back to the sleeping mode.

Photo sharing is one of the most popular features in online social networks such as Facebook. Unfortunately, careless photo posting may reveal privacy of individuals in a posted photo. To curb the privacy leakage, we proposed to enable individuals potentially in a photo to give the permissions before posting a co-photo. We designed a privacy-preserving FR system to identify individuals in a cophoto. The proposed system is featured with low computation cost and confidentiality of the training set. The proposed scheme will be very useful in protecting users' privacy in photo/image sharing over online social networks. However, there always exist trade-off between privacy and utility.

Photo sharing on social network sites has grown tremendously to over a billion new photos a month. Yet the tagging of photos on social network sites such as Facebook has caused users to lose control over their identity and information disclosures. The main objectives of the paper are to get secured when sharing the photos in a social network like Facebook. We proposed a technique to enable give permissions before sharing the photo. For that we need a privacy face recognition system to find the images. Users are forced to accept the resulting problems because of a strong desire to participate in photo sharing. Personal photos are highly variable in appearance but are increasingly shared online in social networks that contain a great deal of information about the photographers, the people who are photographed, and their various relationships. Finally, the privacy preserving FR system identifies individuals in the Co-photo and protects user's privacy in photo sharing over Online Social Network. The results show that the proposed scheme is important to everyone who use Facebook to share their photos securely.